Bevy 0.13

Posted on February 17, 2024 by Bevy Contributors

Thanks to 198 contributors, 672 pull requests, community reviewers, and our generous sponsors, we're happy to announce the Bevy 0.13 release on crates.io!

For those who don't know, Bevy is a refreshingly simple data-driven game engine built in Rust. You can check out our Quick Start Guide to try it today. It's free and open source forever! You can grab the full source code on GitHub. Check out Bevy Assets for a collection of community-developed plugins, games, and learning resources. And to see what the engine has to offer hands-on, check out the entries in the latest Bevy Jam, including the winner That's a LOT of beeeeees.

To update an existing Bevy App or Plugin to Bevy 0.13, check out our 0.12 to 0.13 Migration Guide.

Since our last release a few months ago we've added a ton of new features, bug fixes, and quality of life tweaks, but here are some of the highlights:

- Lightmaps: A fast, popular baked global illumination technique for static geometry (baked externally in programs like The Lightmapper).

- Irradiance Volumes / Voxel Global Illumination: A baked form of global illumination that samples light at the centers of voxels within a cuboid (baked externally in programs like Blender).

- Approximate Indirect Specular Occlusion: Improved lighting realism by reducing specular light leaking via specular occlusion.

- Reflection Probes: A baked form of axis aligned environment map that allows for realistic reflections for static geometry (baked externally in programs like Blender)

- Primitive shapes: Basic shapes are a core building block of both game engines and video games: we've added a polished, ready-to-use collection of them!

- System stepping: Completely pause and advance through your game frame-by-frame or system-by-system to interactively debug game logic, all while rendering continues to update.

- Dynamic queries: Refining queries from within systems is extremely expressive, and is the last big puzzle piece for runtime-defined types and third-party modding and scripting integration.

- Automatically inferred command flush points: Tired of reasoning about where to put

apply_deferredand confused about why your commands weren't being applied? Us too! Now, Bevy's scheduler uses ordinary.beforeand.afterconstraints and inspects the system parameters to automatically infer (and deduplicate) synchronization points. - Slicing, tiling and nine-patch 2D images: Ninepatch layout is a popular tool for smoothly scaling stylized tilesets and UIs. Now in Bevy!

- Camera-Driven UI: UI entity trees can now be selectively added to any camera, rather than being globally applied to all cameras, enabling things like split screen UIs!

- Camera Exposure: Realistic / "real world" control over camera exposure via EV100, f-stops, shutter speed, and ISO sensitivity. Lights have also been adjusted to make their units more realistic.

- Animation interpolation modes: Bevy now supports non-linear interpolation modes in exported glTF animations.

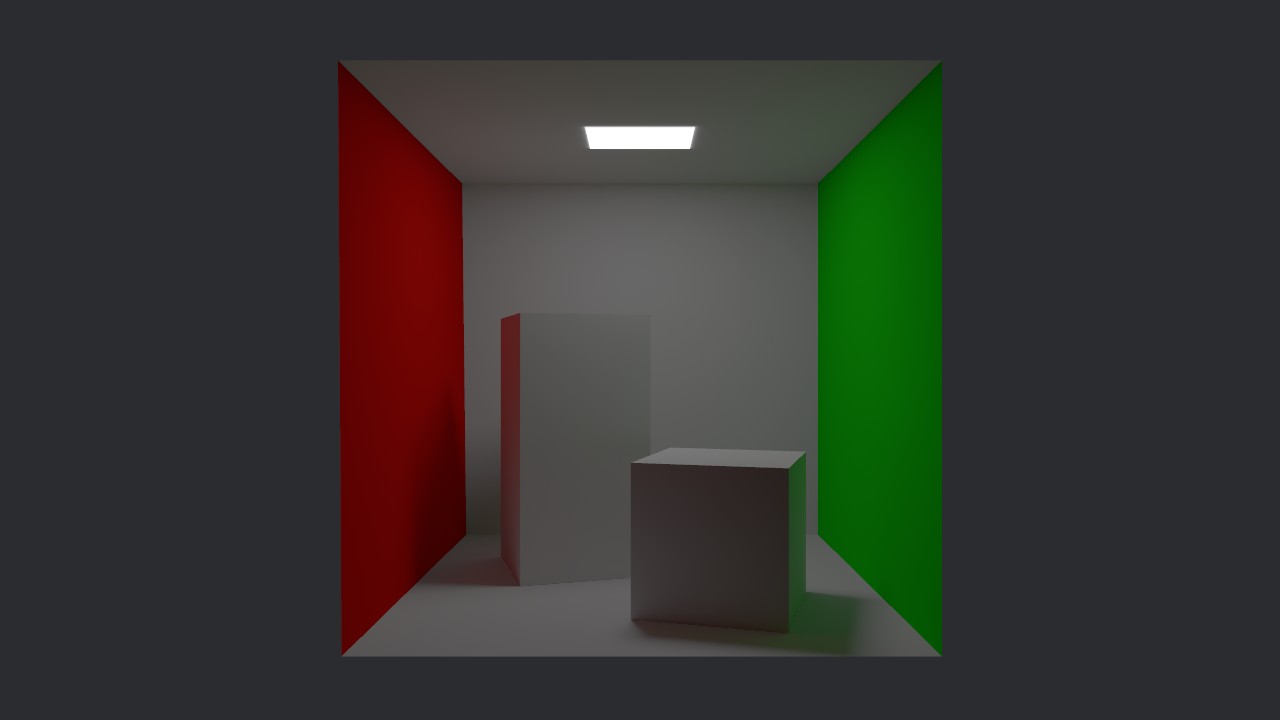

Initial Baked Lighting #

Computing lighting in real time is expensive; but for elements of a scene that never move (like rooms or terrain), we can get prettier lighting and shadows for cheaper by computing it ahead of time using global illumination, then storing the results in a "baked" form that never changes. Global illumination is a more realistic (and expensive) approach to lighting that often uses ray tracing. Unlike Bevy's default rendering, it takes light bouncing off of other objects into account, producing more realistic effects through the inclusion of indirect light.

Lightmaps #

Lightmaps are textures that store pre-computed global illumination results. They have been a mainstay of real-time graphics for decades. Bevy 0.13 adds initial support for rendering lightmaps computed in other programs, such as The Lightmapper. Ultimately we would like to add support for baking lightmaps directly in Bevy, but this first step unlocks lightmap workflows!

Like the lightmaps example shows, just load in your baked lightmap image, and then insert a Lightmap component on the corresponding mesh.

Irradiance Volumes / Voxel Global Illumination #

Irradiance volumes (or voxel global illumination) is a technique used for approximating indirect light by first dividing a scene into cubes (voxels), then sampling the amount of light present at the center of each of those voxels. This light is then added to objects within that space as they move through it, changing the ambient light level on those objects appropriately.

We've chosen to use the ambient cubes algorithm for this, based on Half Life 2. This allows us to match Blender's Eevee renderer, giving users a simple and free path to creating nice-looking irradiance volumes for their own scenes.

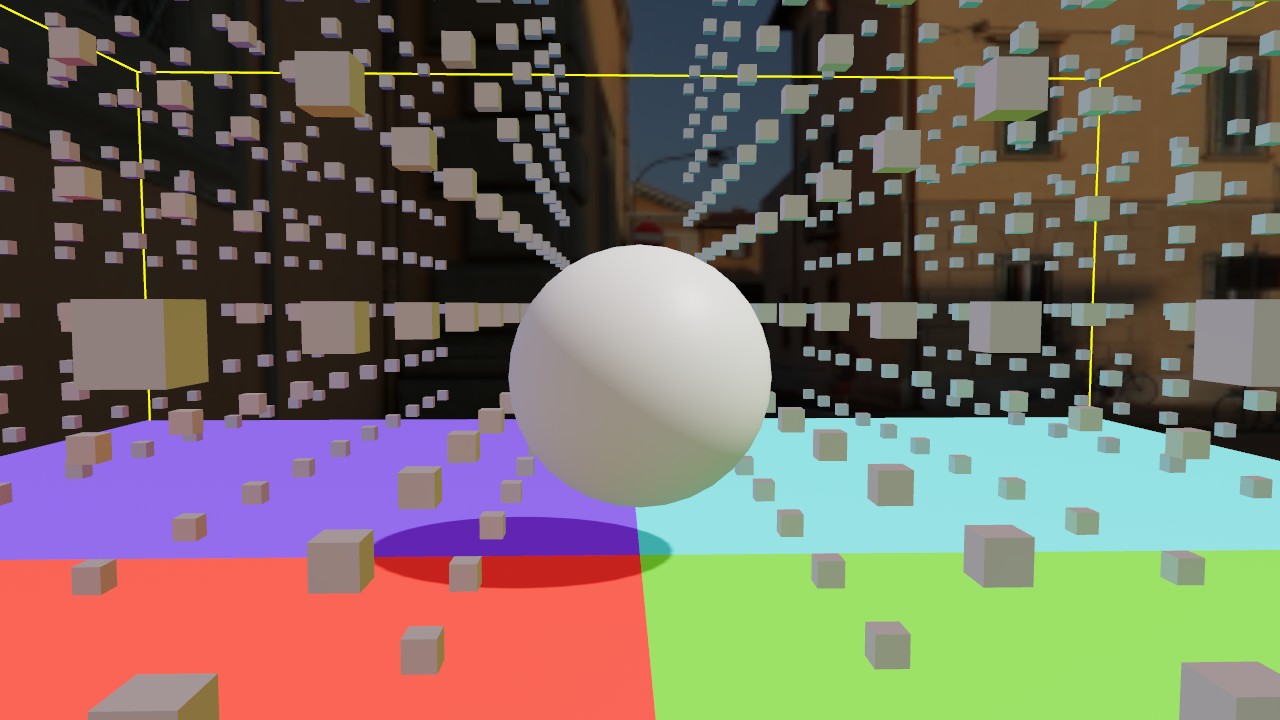

Notice how this sphere subtly picks up the colors of the environment as it moves around, thanks to irradiance volumes:

For now, you need to use external tools such as Blender to bake irradiance volumes, but in the future we would like to support baking irradiance volumes directly in Bevy!

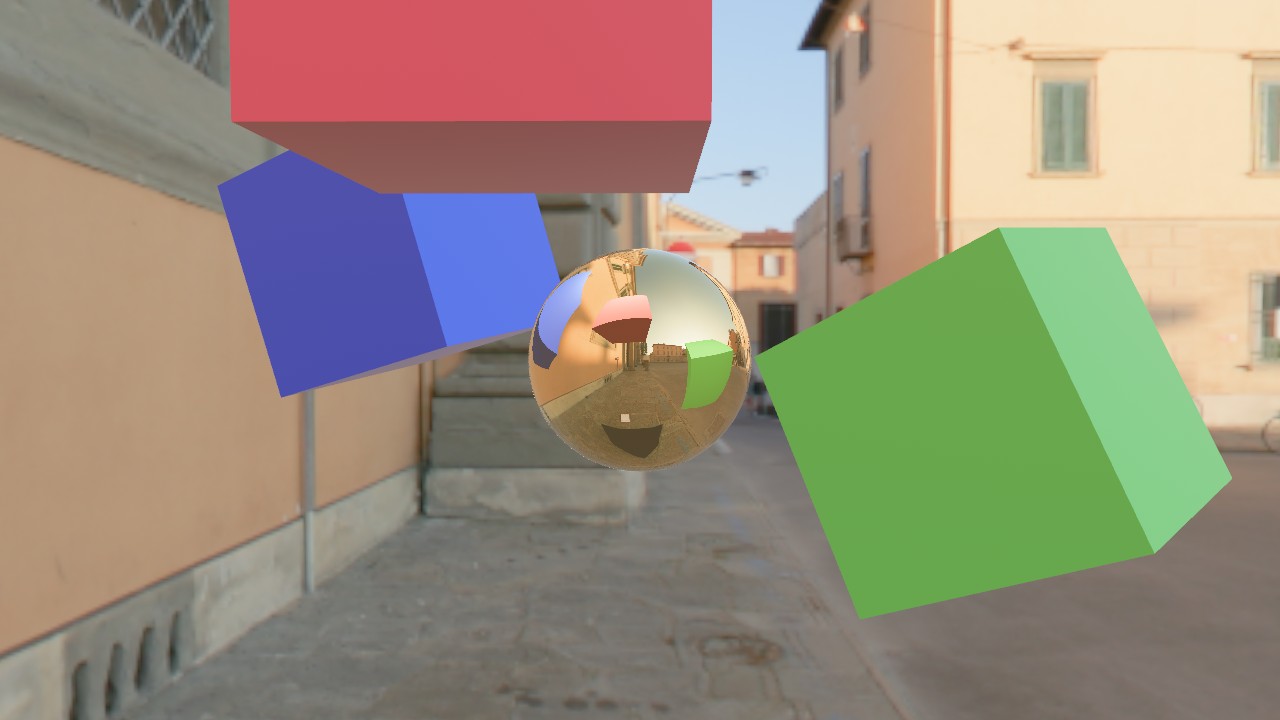

Minimal Reflection Probes #

Environment maps are 2D textures used to simulate lighting, reflection, and skyboxes in a 3D scene. Reflection probes generalize environment maps to allow for multiple environment maps in the same scene, each of which has its own axis-aligned bounding box. This is a standard feature of physically-based renderers and was inspired by the corresponding feature in Blender's Eevee renderer.

In the reflection probes PR, we've added basic support for these, laying the groundwork for pretty, high-performance reflections in Bevy games. Like with the baked global illumination work discussed above, these must currently be precomputed externally, then imported into Bevy. As discussed in the PR, there are quite a few caveats: WebGL2 support is effectively non-existent, sharp and sudden transitions will be observed because there's no blending, and all cubemaps in the world of a given type (diffuse or specular) must have the same size, format, and mipmap count.

Approximate Indirect Specular Occlusion #

Bevy's current PBR renderer over-brightens the image, especially at grazing angles where the fresnel effect tends to make surfaces behave like mirrors. This over-brightening happens because the surfaces must reflect something, but without path traced or screen-space reflections, the renderer has to guess what is being reflected. The best guess it can make is to sample the environment cube map, even if light would've hit something else before reaching the environment light. This artifact, where light occlusion is ignored, is called specular light leaking.

Consider a car tire; though the rubber might be shiny, you wouldn't expect it to have bright specular highlights inside a wheel well, because the car itself is blocking (occluding) the light that would otherwise cause these reflections. Fully checking for occlusion can be computationally expensive.

Bevy 0.13 adds support for Approximate Indirect Specular Occlusion, which uses our existing Screen Space Ambient Occlusion to approximate specular occlusion, which can run efficiently in real time while still producing reasonably high quality results:

Drag this image to compare

In the future, this could be further improved with screen space reflections (SSR). However, conventional wisdom is that you should use specular occlusion alongside SSR, because SSR still suffers from light leaking artifacts.

Primitive Shapes #

Geometric shapes are used all across game development, from primitive mesh shapes and debug gizmos to physics colliders and raycasting. Despite being so commonly used across several domains, Bevy hasn't really had any general-purpose shape representations.

This is changing in Bevy 0.13 with the introduction of first-party primitive shapes! They are lightweight geometric primitives designed for maximal interoperability and reusability, allowing Bevy and third-party plugins to use the same set of basic shapes and increase cohesion within the ecosystem. See the original RFC for more details.

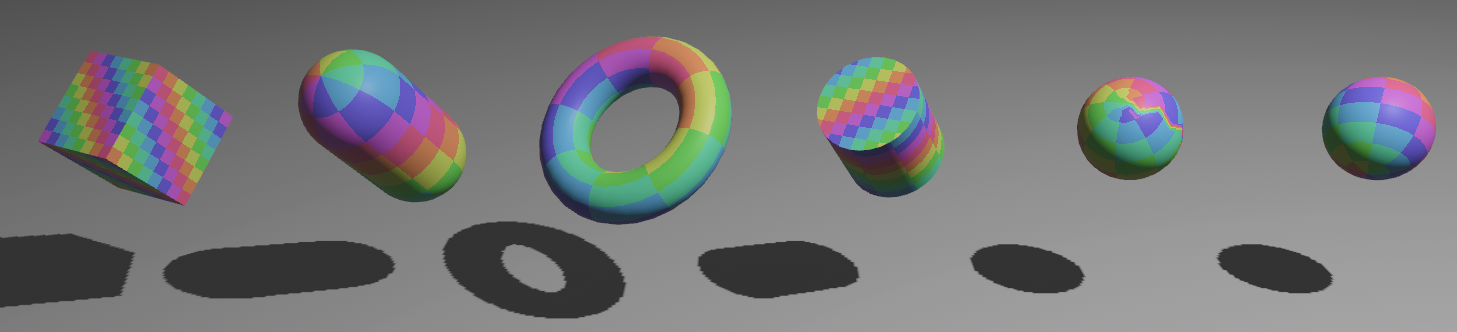

The built-in collection of primitives is already quite sizeable:

More primitives will be added in future releases.

Some use cases for primitive shapes include meshing, gizmos, bounding volumes, colliders, and ray casting functionality. Several of these have landed in 0.13 already!

Rendering #

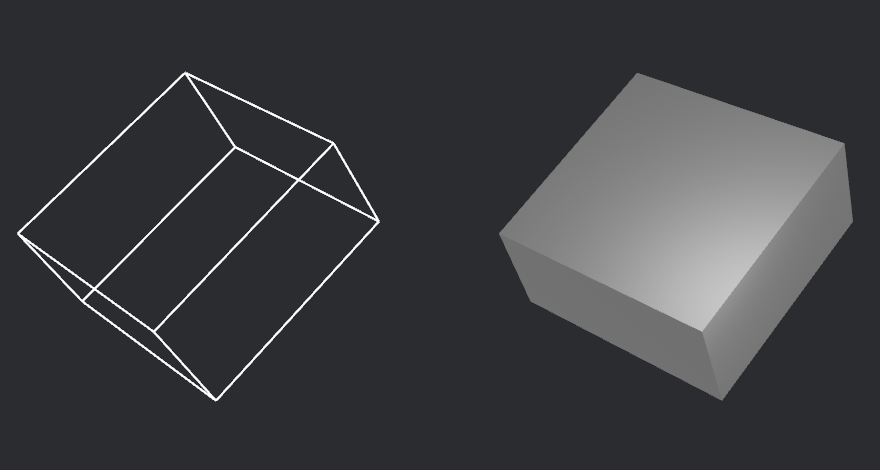

Primitive shapes can be rendered using both meshes and gizmos. In this section, we'll take a closer look at the new APIs.

Below, you can see a cuboid and a torus rendered using meshes and gizmos. You can check out all primitives that can be rendered in the new Rendering Primitives example.

Meshing #

Previous versions of Bevy have had types like Quad, Box, and UVSphere for creating meshes from basic shapes. These have been deprecated in favor of a builder-like API using the new geometric primitives.

Primitives that support meshing implement the Meshable trait. For some shapes, the mesh method returns a Mesh directly:

let before = Mesh::from(Quad::new(Vec2::new(2.0, 1.0)));

let after = Rectangle::new(2.0, 1.0).mesh(); // Mesh::from also works

For most primitives however, it returns a builder for optional configuration:

// Create a circle mesh with a specified vertex count

let before = Mesh::from(Circle {

radius: 1.0,

vertices: 64,

});

let after = Circle::new(1.0).mesh().resolution(64).build();

Below are a few more examples of meshing with the new primitives.

// Icosphere

let before = meshes.add(

Mesh::try_from(Icosphere {

radius: 2.0,

subdivisions: 8,

})

.unwrap()

);

let after = meshes.add(Sphere::new(2.0).mesh().ico(8).unwrap());

// Cuboid

// (notice how Assets::add now also handles mesh conversion automatically)

let before = meshes.add(Mesh::from(shape::Box::new(2.0, 1.0, 1.0)));

let after = meshes.add(Cuboid::new(2.0, 1.0, 1.0));

// Plane

let before = meshes.add(Mesh::from(Plane::from_size(5.0)));

let after = meshes.add(Plane3d::default().mesh().size(5.0, 5.0));

With the addition of the primitives, meshing is also supported for more shapes, like Ellipse, Triangle2d, and Capsule2d. However, note that meshing is not yet implemented for all primitives, such as Polygon and Cone.

Below you can see some meshes in the 2d_shapes and 3d_shapes examples.

Some default values for mesh shape dimensions have also been changed to be more consistent.

Gizmos #

Primitives can also be rendered with Gizmos. There are two new generic methods:

gizmos.primitive_2d(primitive, position, angle, color)gizmos.primitive_3d(primitive, position, rotation, color)

Some primitives can have additional configuration options similar to existing Gizmos drawing methods. For example, calling primitive_3d with a Sphere returns a SphereBuilder, which offers a segments method to control the level of detail of the sphere.

let sphere = Sphere { radius };

gizmos

.primitive_3d(sphere, center, rotation, color)

.segments(segments);

Bounding Volumes #

In game development, spatial checks have several valuable use cases, such as getting all entities that are in the camera's view frustum or near the player, or finding pairs of physics objects that might be intersecting. To speed up such checks, bounding volumes are used to approximate more complex shapes.

Bevy 0.13 adds some new publicly available bounding volumes: Aabb2d, Aabb3d, BoundingCircle, and BoundingSphere. These can be created manually, or generated from primitive shapes.

Each bounding volume implements the BoundingVolume trait, providing some general functionality and helpers. The IntersectsVolume trait can be used to test for intersections with these volumes. This trait is implemented for bounding volumes themselves, so you can test for intersections between them. This is supported between all existing bounding volume types, but only those in the same dimension.

Here is an example of how bounding volumes are constructed, and how an intersection test is performed:

// We create an axis-aligned bounding box that is centered at position

let position = Vec2::new(100., 50.);

let half_size = Vec2::splat(20.);

let aabb = Aabb2d::new(position, half_size);

// We create a bounding circle that is centered at position

let position = Vec2::new(80., 70.);

let radius = 30.;

let bounding_circle = BoundingCircle::new(position, radius);

// We check if the volumes are intersecting

let intersects = bounding_circle.intersects(&aabb);

There are also two traits for the generation of bounding volumes: Bounded2d and Bounded3d. These are implemented for the new primitive shapes, so you can easily compute bounding volumes for them:

// We create a primitive, a hexagon in this case

let hexagon = RegularPolygon::new(50., 6);

let translation = Vec2::new(50., 200.);

let rotation = PI / 2.; // Rotation in radians

// Now we can get an Aabb2d or BoundingCircle from this primitive.

// These methods are part of the Bounded2d trait.

let aabb = hexagon.aabb_2d(translation, rotation);

let circle = hexagon.bounding_circle(translation, rotation);

Ray Casting and Volume Casting #

The bounding volumes also support basic ray casting and volume casting. Ray casting tests if a bounding volume intersects with a given ray, cast from an origin in a direction, until a maximum distance. Volume casts work similarly, but function as if moving a volume along the ray.

This functionality is provided through the new RayCast2d, RayCast3d, AabbCast2d, AabbCast3d, BoundingCircleCast, and BoundingSphereCast types. They can be used to check for intersections against bounding volumes, and to compute the distance from the origin of the cast to the point of intersection.

Below, you can see ray casting, volume casting, and intersection tests in action:

To make it easier to reason about ray casts in different dimensions, the old Ray type has also been split into Ray2d and Ray3d. The new Direction2d and Direction3d types are used to ensure that the ray direction remains normalized, providing a type-level guarantee that the vector is always unit-length. These are already in use in some other APIs as well, such as for some primitives and gizmo methods.

System Stepping #

Bevy 0.13 adds support for System Stepping, which adds debugger-style execution control for systems.

The Stepping resource controls which systems within a schedule execute each frame, and provides step, break, and continue functionality to enable live debugging.

let mut stepping = Stepping::new();

You add the schedules you want to step through to the Stepping resource. The systems in these schedules can be thought of as the "stepping frame". Systems in the "stepping frame" won't run unless a relevant step or continue action occurs. Schedules that are not added will run on every update, even while stepping. This enables core functionality like rendering to continue working.

stepping.add_schedule(Update);

stepping.add_schedule(FixedUpdate);

Stepping is disabled by default, even when the resource is inserted. To enable it in apps, feature flags, dev consoles and obscure hotkeys all work great.

#[cfg(feature = "my_stepping_flag")]

stepping.enable();

Finally, you add the Stepping resource to the ECS World.

app.insert_resource(stepping);

System Step & Continue Frame #

The "step frame" action runs the system at the stepping cursor, and advances the cursor during the next render frame. This is useful to see individual changes made by systems, and see the state of the world prior to executing a system

stepping.step_frame()

The "continue frame" action will execute systems starting from the stepping cursor to the end of the stepping frame during the next frame. It may stop before the end of the stepping frame if it encounters a system with a breakpoint. This is useful for advancing quickly through an entire frame, getting to the start of the next frame, or in combination with breakpoints.

stepping.continue_frame()

This video demonstrates these actions on the breakout example with a custom egui interface. The stepping cursor can be seen moving through the systems list as we click the step button. When the continue button is clicked, you can see the game progress one stepping frame for each click.

Breakpoints #

When a schedule grows to a certain point, it can take a long time to step through every system in the schedule just to see the effects of a few systems. In this case, stepping provides system breakpoints.

This video illustrates how a breakpoint on check_for_collisions() behaves with "step" and "continue" actions:

Disabling Systems During Stepping #

During debugging, it can be helpful to disable systems to narrow down the source of the problem. Stepping::never_run() and Stepping::never_run_node() can be used to disable systems while stepping is enabled.

Excluding Systems from Stepping #

It may be necessary to ensure some systems still run while stepping is enabled. While best-practice is to have them in a schedule that has not been added to the Stepping resource, it is possible to configure systems to always run while stepping is enabled. This is primarily useful for event & input handling systems.

Systems can be configured to always run by calling Stepping::always_run(), or Stepping::always_run_node(). When a system is configured to always run, it will run each rendering frame even when stepping is enabled.

Limitations #

There are some limitations in this initial implementation of stepping:

- Systems that reads events likely will not step properly: Because frames still advance normally while stepping is enabled, events can be cleared before a stepped system can read them. The best approach here is to configure event-based systems to always run, or put them in a schedule not added to

Stepping. "Continue" with breakpoints may also work in this scenario. - Conditional systems may not run as expected when stepping: Similar to event-based systems, if the run condition is true for only a short time, system may not run when stepped.

Detailed Examples #

- Text-based stepping example

- Non-interactive bevy UI example stepping plugin used in the breakout example

- Interactive egui stepping plugin used in demo videos

Camera Exposure #

In the real world, the brightness of an image captured by a camera is determined by its exposure: the amount of light that the camera's sensor or film incorporates. This is controlled by several mechanics of the camera:

- Aperture: Measured in F-Stops, the aperture opens and closes to control how much light is allowed into the camera's sensor or film by physically blocking off lights from specific angles, similar to the pupil of an eye.

- Shutter Speed: How long the camera's shutter is open, which is the duration of time that the camera's sensor or film is exposed to light.

- ISO Sensitivity: How sensitive the camera's sensor or film is to light. A higher value indicates a higher sensitivity to light.

Each of these plays a role in how much light the final image receives. They can be combined into a final EV number (exposure value), such as the semi-standard EV100 (the exposure value for ISO 100). Higher EV100 numbers mean that more light is required to get the same result. For example, a sunny day scene might require an EV100 of about 15, whereas a dimly lit indoor scene might require an EV100 of about 7.

In Bevy 0.13, you can now configure the EV100 on a per-camera basis using the new Exposure component. You can set it directly using the Exposure::ev100 field, or you can use the new PhysicalCameraParameters struct to calculate an ev100 using "real world" camera settings like f-stops, shutter speed, and ISO sensitivity.

This is important because Bevy's "physically based" renderer (PBR) is intentionally grounded in reality. Our goal is for people to be able to use real-world units in their lights and materials and have them behave as close to reality as possible.

Drag this image to compare

Note that prior versions of Bevy hard-coded a static EV100 for some of its light types. In Bevy 0.13 it is configurable and consistent across all light types. We have also bumped the default EV100 to 9.7, which is a number we chose to best match Blender's default exposure. It also happens to be a nice "middle ground" value that sits somewhere between indoor lighting and overcast outdoor lighting.

You may notice that point lights now require significantly higher intensity values (in lumens). This (sometimes) million-lumen values might feel exorbitant. Just reassure yourself that (1) it actually requires a lot of light to meaningfully register in an overcast outdoor environment and (2) Blender exports lights on these scales (and we are calibrated to be as close as possible to them).

Camera-Driven UI #

Historically, Bevy's UI elements have been scaled and positioned in the context of the primary window, regardless of the camera settings. This approach made some UI experiences like split-screen multiplayer difficult to implement, and others such as having UI in multiple windows impossible.

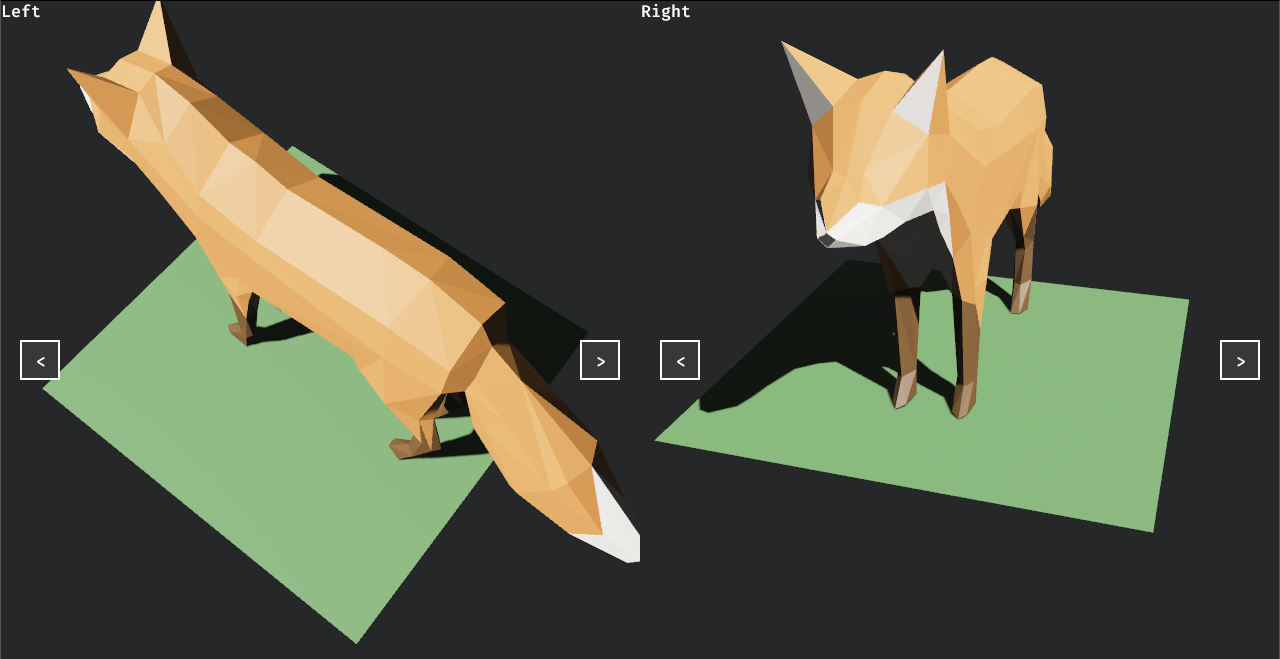

Bevy 0.13 introduces Camera-Driven UI. Each camera can now have its own UI root, rendering according to its viewport, scale factor, and a target which can be a secondary window or even a texture.

This change unlocks a variety of new UI experiences, including split-screen multiplayer, UI in multiple windows, displaying non-interactive UI in a 3D world, and more.

If there is one camera in the world, you don't need to do anything; your UI will be displayed in that camera's viewport.

commands.spawn(Camera3dBundle {

// Camera can have custom viewport, target, etc.

});

commands.spawn(NodeBundle {

// UI will be rendered to the singular camera's viewport

});

When more control is desirable, or there are multiple cameras, we introduce the TargetCamera component. This component can be added to a root UI node to specify which camera it should be rendered to.

// For split-screen multiplayer, we set up 2 cameras and 2 UI roots

let left_camera = commands.spawn(Camera3dBundle {

// Viewport is set to left half of the screen

}).id();

commands

.spawn((

TargetCamera(left_camera),

NodeBundle {

//...

}

));

let right_camera = commands.spawn(Camera3dBundle {

// Viewport is set to right half of the screen

}).id();

commands

.spawn((

TargetCamera(right_camera),

NodeBundle {

//...

})

);

With this change, we also removed the UiCameraConfig component. If you were using it to hide UI nodes, you can achieve the same outcome by configuring a Visibility component on the root node.

commands.spawn(Camera3dBundle::default());

commands.spawn(NodeBundle {

visibility: Visibility::Hidden, // UI will be hidden

// ...

});

Texture Slicing and Tiling #

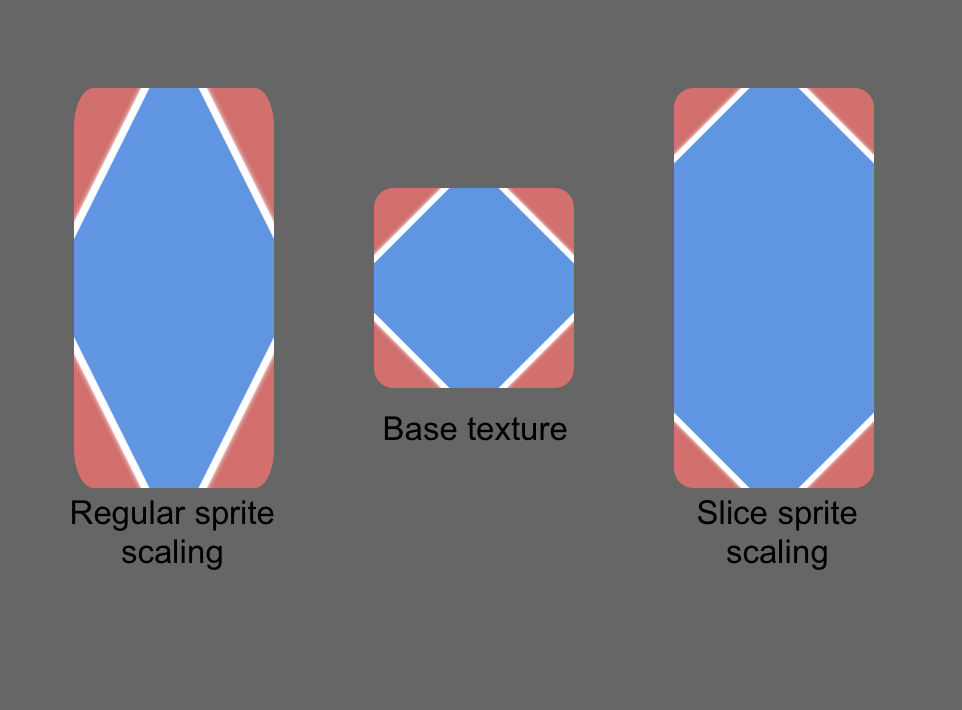

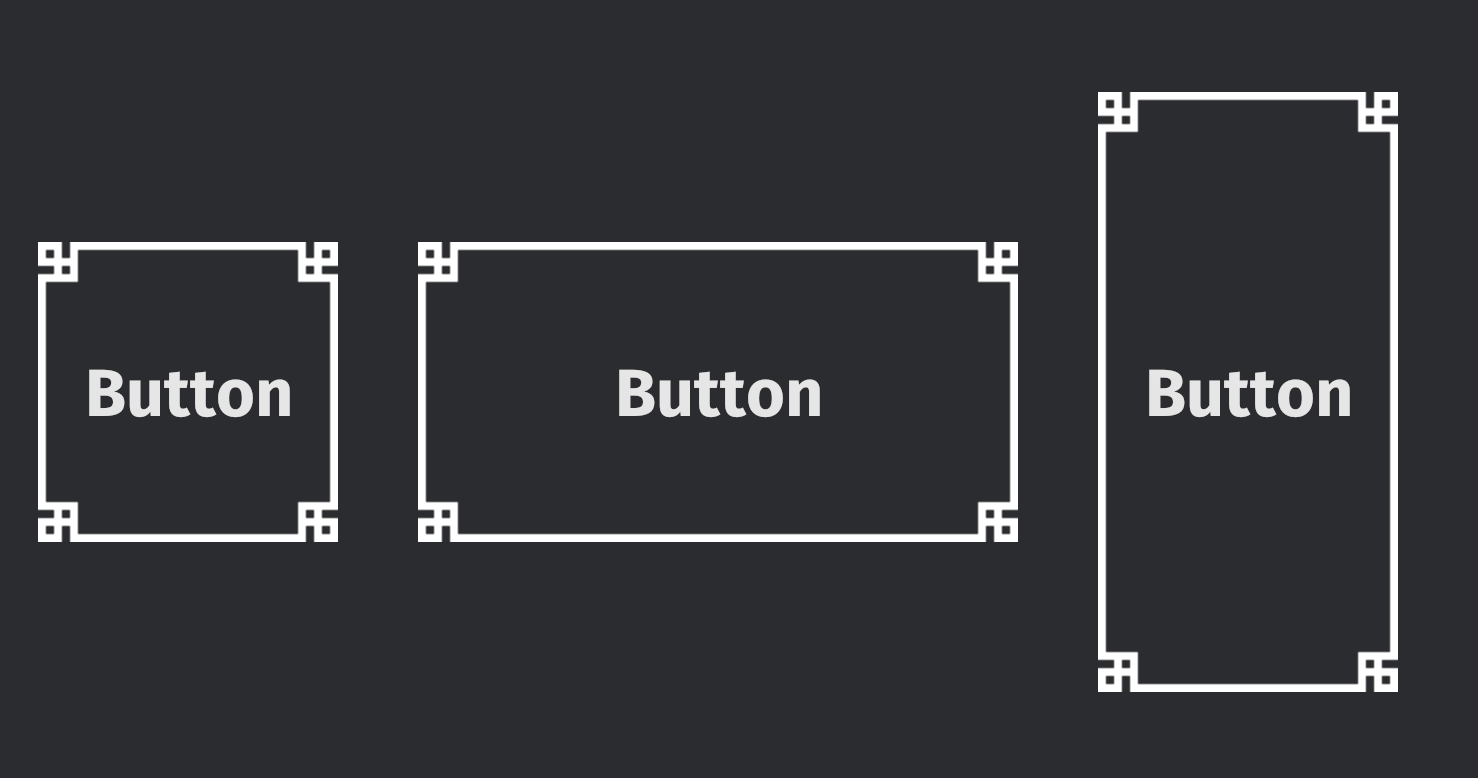

3D rendering gets a lot of love, but 2D features matter too! We're pleased to add CPU-based slicing and tiling to both bevy_sprite and bevy_ui in Bevy 0.13!

This behavior is controlled by a new optional component: ImageScaleMode.

9 slicing #

Adding ImageScaleMode::Sliced to an entity with a sprite or UI bundle enables 9 slicing, keeping the image proportions during resizes, avoiding stretching of the texture.

This is very useful for UI, allowing your pretty textures to look right even as the size of your element changes.

commands.spawn((

SpriteSheetBundle::default(),

ImageScaleMode::Sliced(TextureSlicer {

// The image borders are 20 pixels in every direction

border: BorderRect::square(20.0),

// we don't stretch the corners more than their actual size (20px)

max_corner_scale: 1.0,

..default()

}),

));

Tiling #

Adding ImageMode::Tiled { .. } to your 2D sprite entities enables texture tiling: repeating the image until their entire area is filled. This is commonly used for backgrounds and surfaces.

commands.spawn((

SpriteSheetBundle::default(),

ImageScaleMode::Tiled {

// The image will repeat horizontally

tile_x: true,

// The image will repeat vertically

tile_y: true,

// The texture will repeat if the drawing rect is larger than the image size

stretch_value: 1.0,

},

));

Dynamic Queries #

In Bevy ECS, queries use a type-powered DSL. The full type of the query (what component to access, which filter to use) must be specified at compile time.

Sometimes we can't know what data the query wants to access at compile time. Some scenarios just cannot be done with static queries:

- Defining queries in scripting languages like Lua or JavaScript.

- Defining new components from a scripting language and query them.

- Adding a runtime filter to entity inspectors like

bevy-inspector-egui. - Adding a Quake-style console to modify or query components from a prompt at runtime.

- Creating an editor with remote capabilities.

Dynamic queries make these all possible. And these are only the plans we've heard about so far!

The standard way of defining a Query is by using them as system parameters:

fn take_damage(mut player_health: Query<(Entity, &mut Health), With<Player>>) {

// ...

}

This won't change. And for most (if not all) gameplay use cases, you will continue to happily use the delightfully simple Query API.

However, consider this situation: as a game or mod developer I want to list entities with a specific component through a text prompt. Similar to how the Quake console works. What would that look like?

#[derive(Resource)]

struct UserQuery(String);

// user_query is entered as a text prompt by the user when the game is running.

// In a system, it's quickly apparent that we can't use `Query`.

fn list_entities_system(user_query: Res<UserQuery>, query: Query<FIXME, With<FIXME>>) {}

// Even when using the more advanced `World` API, we are stuck.

fn list_entities(user_query: String, world: &mut World) {

// FIXME: what type goes here?

let query = world.query::<FIXME>();

}

It's impossible to choose a type based on the value of user_query! QueryBuilder solves this problem.

fn list_entities(

user_query: String,

type_registry: &TypeRegistry,

world: &mut World,

) -> Option<()> {

let name = user_query.split(' ').next()?;

let type_id = type_registry.get_with_short_type_path(name)?.type_id();

let component_id = world.components().get_id(type_id)?;

let query = QueryBuilder::<FilteredEntityRef>::new(&mut world)

.ref_id(component_id)

.build();

for entity_ref in query.iter(world) {

let ptr = entity_ref.get_by_id(component_id);

// Convert `ptr` into a `&dyn Reflect` and use it.

}

Some(())

}

It is still an error-prone, complex, and unsafe API, but it makes something that was previously impossible possible. We expect third-party crates to provide convenient wrappers around the QueryBuilder API, some of which will undoubtedly make their way upstream.

Query Transmutation #

Have you ever wanted to pass a query to a function, but instead of having a Query<&Transform> you have a Query<(&Transform, &Velocity), With<Enemy>>? In Bevy 0.13 you can, thanks to the new QueryLens and Query::transmute_lens() method.

Query transmutes allow you to change a query into different query types as long as the components accessed are a subset of the original query. If you do try to access data that is not in the original query, this method will panic.

fn reusable_function(lens: &mut QueryLens<&Transform>) {

let query = lens.query();

// do something with the query...

}

// We can use the function in a system that takes the exact query.

fn system_1(mut query: Query<&Transform>) {

reusable_function(&mut query.as_query_lens());

}

// We can also use it with a query that does not match exactly

// by transmuting it.

fn system_2(mut query: Query<(&mut Transform, &Velocity), With<Enemy>>) {

let mut lens = query.transmute_lens::<&Transform>();

reusable_function(&mut lens);

}

Note that the QueryLens will still iterate over the same entities as the original Query it is derived from. A QueryLens<&Transform> taken from a Query<(&Transform, &Velocity)>, will only include the Transform of entities with both Transform and Velocity components.

Besides removing parameters you can also change them in limited ways to the different smart pointer types. One of the more useful is to change a &mut to a &. See the documentation for more details.

One thing to take into consideration is the transmutation is not free. It works by creating a new state and copying cached data inside the original query. It's not an expensive operation, but you should avoid doing it inside a hot loop.

WorldQuery Trait Split #

A Query has two type parameters: one for the data to be fetched, and a second optional one for the filters.

In previous versions of Bevy both parameters simply required WorldQuery: there was nothing stopping you from using types intended as filters in the data position (or vice versa).

Apart from making the type signature of the Query items more complicated (see example below) this usually worked fine as most filters had the same behavior in either position.

Unfortunately this was not the case for Changed and Added which had different (and undocumented) behavior in the data position and this could lead to bugs in user code.

To allow us to prevent this type of bug at compile time, the WorldQuery trait has been replaced by two traits: QueryData and QueryFilter. The data parameter of a Query must now be QueryData and the filter parameter must be QueryFilter.

Most user code should be unaffected or easy to migrate.

// Probably a subtle bug: `With` filter in the data position - will not compile in 0.13

fn my_system(query: Query<(Entity, With<ComponentA>)>)

{

// The type signature of the query items is `(Entity, ())`, which is usable but unwieldy

for (entity, _) in query.iter(){

}

}

// Idiomatic, compiles in both 0.12 and 0.13

fn my_system(query: Query<Entity, With<ComponentA>>)

{

for entity in query.iter(){

}

}

Automatically Insert apply_deferred Systems #

When writing gameplay code, you might commonly have one system that wants to immediately see the effects of commands queued in another system. Before Bevy 0.13, you would have to manually insert an apply_deferred system between the two, a special system which causes those commands to be applied when encountered. Bevy now detects when a system with commands is ordered relative to other systems and inserts the apply_deferred for you.

// Before 0.13

app.add_systems(

Update,

(

system_with_commands,

apply_deferred,

another_system,

).chain()

);

// After 0.13

app.add_systems(

Update,

(

system_with_commands,

another_system,

).chain()

);

This resolves a common beginner footgun: if two systems are ordered, shouldn't the second always see the results of the first?

Automatically inserted apply_deferred systems are optimized by automatically merging them if possible. In most cases, it is recommended to remove all manually inserted apply_deferred systems, as allowing Bevy to insert and merge these systems as needed will usually be both faster and involve less boilerplate.

// This will only add one apply_deferred system.

app.add_systems(

Update,

(

(system_1_with_commands, system_2).chain(),

(system_3_with_commands, system_4).chain(),

)

);

If this new behavior does not work for you, please consult the migration guide. There are several new APIs that allow you to opt-out.

More Flexible One-Shot Systems #

In Bevy 0.12, we introduced one-shot systems, a handy way to call systems on demand without having to add them to a schedule. The initial implementation had some limitations in regard to what systems could and could not be used as one-shot systems. In Bevy 0.13, these limitations have been resolved.

One-shot systems now support inputs and outputs.

fn increment_sys(In(increment_by): In<i32>, mut counter: ResMut<Counter>) -> i32 {

counter.0 += increment_by;

counter.0

}

let mut world = World::new();

let id = world.register_system(increment_sys);

world.insert_resource(Counter(1));

let count_one = world.run_system_with_input(id, 5).unwrap(); // increment counter by 5 and return 6

let count_two = world.run_system_with_input(id, 2).unwrap(); // increment counter by 2 and return 8

Running a system now returns the system output as Ok(output). Note that output cannot be returned when calling one-shot systems through commands, because of their deferred nature.

Exclusive systems can now be registered as one-shot systems:

world.register_system(|world: &mut World| { /* do anything */ });

Boxed systems can now be registered with register_boxed_system.

These improvements round out one-shot systems significantly: they should now work just like any other Bevy system.

wgpu 0.19 Upgrade and Rendering Performance Improvements #

In Bevy 0.13 we upgraded from wgpu 0.17 to wgpu 0.19, which includes the long awaited wgpu arcanization that allows us to compile shaders asynchronously to avoid shader compilation stutters and multithread draw call creation for better performance in CPU-bound scenes.

Due to changes in wgpu 0.19, we've added a new webgpu feature to Bevy that is now required when doing WebAssembly builds targeting WebGPU. Disabling the webgl2 feature is no longer required when targeting WebGPU, but the new webgpu feature currently overrides the webgl2 feature when enabled. Library authors, please do not enable the webgpu feature by default. In the future we plan on allowing you to target both WebGL2 and WebGPU in the same WebAssembly binary, but we aren't quite there yet.

We've swapped the material and mesh bind groups, so that mesh data is now in bind group 1, and material data is in bind group 2. This greatly improved our draw call batching when combined with changing the sorting functions for the opaque passes to sort by pipeline and mesh. Previously we were sorting them by distance from the camera. These batching improvements mean we're doing fewer draw calls, which improves CPU performance, especially in larger scenes. We've also removed the get_instance_index function in shaders, as it was only required to work around an upstream bug that has been fixed in wgpu 0.19. For other shader or rendering changes, please see the migration guide and wgpu's changelog.

Many small changes both to Bevy and wgpu summed up to make a modest but measurable difference in our performance on realistic 3D scenes! We ran some quick tests on both Bevy 0.12 and Bevy 0.13 on the same machine on four complex scenes: Bistro, Sponza, San Miguel and Hidden Alley.

As you can see, these scenes are substantially more detailed than most video game environments, but that screenshot was being rendered in Bevy at better than 60 FPS at 1440p resolution! Between Bevy 0.12 and Bevy 0.13 we saw frame times decrease by about 5-10% across the scenes tested. Nice work!

Unload Rendering Assets from RAM #

Meshes and the textures used to define their materials take up a ton of memory: in many games, memory usage is the biggest limitation on the resolution and polygon count of the game! Moreover, transferring that data from system RAM (used by the CPU) to the VRAM (used by the GPU) can be a real performance bottleneck.

Bevy 0.13 adds the ability to unload this data from system RAM, once it has been successfully transferred to VRAM. To configure this behavior for your asset, set the RenderAssetUsages field to specify whether to retain the data in the main (CPU) world, the render (GPU) world, or both.

This behavior is currently off by default for most asset types as it has some caveats (given that the asset becomes unavailable to logic on the CPU), but we strongly recommend enabling it for your assets whenever possible for significant memory usage wins (and we will likely enable it by default in the future).

Texture atlases and font atlases now only extract data that's actually in use to VRAM, rather than wasting work sending all possible images or characters to VRAM every frame. Neat!

Better Batching Through Smarter Sorting #

One of the core techniques used to speed up rendering is to draw many similar objects together at the same time. In this case, Bevy was already using a technique called "batching", which allows us to combine multiple similar operations, reducing the number of expensive draw calls (instructions to the GPU) that are being made.

However, our strategy for defining these batches was far from optimal. Previously, we were sorting by distance to the camera, and then checking if multiple of the same meshes were adjacent to each other in that sorted list. In realistic scenes, this is unlikely to find many candidates for merging!

In Bevy 0.13, we first sort by pipeline (effectively the type of material being used), and then by mesh identity. This strategy results in better batching, improving overall FPS by double-digit percentages on the realistic scene tested!

Animation Interpolation Methods #

Generally, animations are defined by their keyframes: snapshots of the position (and other state) or objects at moments along a timeline. But what happens between those keyframes? Game engines need to interpolate between them, smoothly transitioning from one state to the next.

The simplest interpolation method is linear: the animated object just moves an equal distance towards the next keyframe every unit of time. But this isn't always the desired effect! Both stop-motion-style and more carefully smoothed animations have their place.

Bevy now supports both step and cubic spline interpolation in animations. Most of the time, this will just be parsed correctly from the glTF files, but when setting VariableCurve manually, there's a new Interpolation field to set.

Animatable Trait #

When you think of "animation": you're probably imagining moving objects through space. Translating them back and forth, rotating them, maybe even squashing and stretching them. But in modern game development, animation is a powerful shared set of tools and concepts for "changing things over time". Transforms are just the beginning: colors, particle effects, opacity and even boolean values like visibility can all be animated!

In Bevy 0.13, we've taken the first step towards this vision, with the Animatable trait.

/// An animatable value type.

pub trait Animatable: Reflect + Sized + Send + Sync + 'static {

/// Interpolates between `a` and `b` with a interpolation factor of `time`.

///

/// The `time` parameter here may not be clamped to the range `[0.0, 1.0]`.

fn interpolate(a: &Self, b: &Self, time: f32) -> Self;

/// Blends one or more values together.

///

/// Implementors should return a default value when no inputs are provided here.

fn blend(inputs: impl Iterator<Item = BlendInput<Self>>) -> Self;

/// Post-processes the value using resources in the [`World`].

/// Most animatable types do not need to implement this.

fn post_process(&mut self, _world: &World) {}

}

This is the first step towards animation blending and an asset-driven animation graph which is essential for shipping large scale 3D games in Bevy. But for now, this is just a building block. We've implemented this for a few key types (Transform, f32 and glam's Vec types) and published the trait. Slot it into your games and crates, and team up with other contributors to help bevy_animation become just as pleasant and featureful as the rest of the engine.

Extensionless Asset Support #

In prior versions of Bevy, the default way to choose an AssetLoader for a particular asset was entirely based on file extensions. The recent addition of .meta files allowed for specifying more granular loading behavior, but file extensions were still required. In Bevy 0.13, the asset type can now be used to infer the AssetLoader.

// Uses AudioSettingsAssetLoader

let audio = asset_server.load("data/audio.json");

// Uses GraphicsSettingsAssetLoader

let graphics = asset_server.load("data/graphics.json");

This is possible because every AssetLoader is required to declare what type of asset it loads, not just the extensions it supports. Since the load method on AssetServer was already generic over the type of asset to return, this information is already available to the AssetServer.

// The above example with types shown

let audio: Handle<AudioSettings> = asset_server.load::<AudioSettings>("data/audio.json");

let graphics: Handle<GraphicsSettings> = asset_server.load::<GraphicsSettings>("data/graphics.json");

Now we can also use it to choose the AssetLoader itself.

When loading an asset, the loader is chosen by checking (in order):

- The asset

metafile - The type of

Handle<A>to return - The file extension

// This will be inferred from context to be a glTF asset, ignoring the file extension

let gltf_handle = asset_server.load("models/cube/cube.gltf");

// This still relies on file extension due to the label

let cube_handle = asset_server.load("models/cube/cube.gltf#Mesh0/Primitive0");

// ^^^^^^^^^^^^^^^^^

// | Asset path label

File Extensions Are Now Optional #

Since the asset type can be used to infer the loader, neither the file to be loaded nor the AssetLoader need to have file extensions.

pub trait AssetLoader: Send + Sync + 'static {

/* snip */

/// Returns a list of extensions supported by this [`AssetLoader`], without the preceding dot.

fn extensions(&self) -> &[&str] {

// A default implementation is now provided

&[]

}

}

Previously, an asset loader with no extensions was very cumbersome to use. Now, they can be used just as easily as any other loader. Likewise, if a file is missing its extension, Bevy can now choose the appropriate loader.

let license = asset_server.load::<Text>("LICENSE");

Appropriate file extensions are still recommended for good project management, but this is now a recommendation rather than a hard requirement.

Multiple Asset Loaders With The Same Asset #

Now, a single path can be used by multiple asset handles as long as they are distinct asset types.

// Load the sound effect for playback

let bang = asset_server.load::<AudioSource>("sound/bang.ogg");

// Load the raw bytes of the same sound effect (e.g, to send over the network)

let bang_blob = asset_server.load::<Blob>("sound/bang.ogg");

// Returns the bang handle since it was already loaded

let bang_again = asset_server.load::<AudioSource>("sound/bang.ogg");

Note that the above example uses turbofish syntax for clarity. In practice, it's not required, since the type of asset loaded can usually be inferred at the call site.

#[derive(Resource)]

struct SoundEffects {

bang: Handle<AudioSource>,

bang_blob: Handle<Blob>,

}

fn setup(mut effects: ResMut<SoundEffects>, asset_server: Res<AssetServer>) {

effects.bang = asset_server.load("sound/bang.ogg");

effects.bang_blob = asset_server.load("sound/bang.ogg");

}

The custom_asset example has been updated to demonstrate these new features.

Texture Atlas Rework #

Texture atlases efficiently combine multiple images into a single larger texture called an atlas.

Bevy 0.13 significantly reworks them to reduce boilerplate and make them more data-oriented. Say goodbye to TextureAtlasSprite and UiTextureAtlasImage components (and their corresponding Bundle types). Texture atlasing is now enabled by adding a single additional component to normal sprite and image entities: TextureAtlas.

Why? #

Texture atlases (sometimes called sprite sheets) simply draw a custom section of a given texture. This is still Sprite-like or Image-like behavior, we're just drawing a subset. The new TextureAtlas component embraces that by storing:

- a

Handle<TextureAtlasLayout>, an asset mapping an index to aRectsection of a texture - a

usizeindex defining which sectionRectof the layout we want to display

Light RenderLayers #

RenderLayers are Bevy's answer to quickly hiding and showing entities en masse by filtering what a Camera can see ... great for things like customizing the first-person view of what a character is holding (or making sure vampires don't show up in your mirrors!).

RenderLayers now play nice with lights, fixing a serious limitation to make sure this awesome feature can shine appropriately!

Bind Group Layout Entries #

We added a new API, inspired by the bind group entries API from 0.12, to declare bind group layouts. This new API is based on using built-in functions to define bind group layout resources and automatically set the index based on its position.

Here's a short example of how declaring a new layout looks:

let layout = render_device.create_bind_group_layout(

"post_process_bind_group_layout",

&BindGroupLayoutEntries::sequential(

ShaderStages::FRAGMENT,

(

texture_2d_f32(),

sampler(SamplerBindingType::Filtering),

uniform_buffer::<PostProcessingSettings>(false),

),

),

);

Type-Safe Labels for the RenderGraph #

Bevy uses Rust's type system extensively when defining labels, letting developers lean on tooling to catch typos and ease refactors. But this didn't apply to Bevy's render graph. In the render graph, hard-coded—and potentially overlapping—strings were used to define nodes and sub-graphs.

// Before 0.13

impl MyRenderNode {

pub const NAME: &'static str = "my_render_node"

}

In Bevy 0.13, we're using a more robust way to name render nodes and render graphs with the help of the type-safe label pattern already used by bevy_ecs.

// After 0.13

#[derive(Debug, Hash, PartialEq, Eq, Clone, RenderLabel)]

pub struct PrettyFeature;

With those, the long paths for const-values become shorter and cleaner:

// Before 0.13

render_app

.add_render_graph_node::<ViewNodeRunner<PrettyFeatureNode>>(

core_3d::graph::NAME,

PrettyFeatureNode::NAME,

)

.add_render_graph_edges(

core_3d::graph::NAME,

&[

core_3d::graph::node::TONEMAPPING,

PrettyFeatureNode::NAME,

core_3d::graph::node::END_MAIN_PASS_POST_PROCESSING,

],

);

// After 0.13

use bevy::core_pipeline::core_3d::graph::{Node3d, Core3d};

render_app

.add_render_graph_node::<ViewNodeRunner<PrettyFeatureNode>>(

Core3d,

PrettyFeature,

)

.add_render_graph_edges(

Core3d,

(

Node3d::Tonemapping,

PrettyFeature,

Node3d::EndMainPassPostProcessing,

),

);

When you need dynamic labels for render nodes, those can still be achieved via e.g. tuple structs:

#[derive(Debug, Hash, PartialEq, Eq, Clone, RenderLabel)]

pub struct MyDynamicLabel(&'static str);

This is particularly nice because we don't have to store strings here: we can use integers, custom enums or any other hashable type.

Winit Upgrade #

Through the heroic efforts of our contributors and reviewers, Bevy is now upgraded to use winit 0.29. winit is our windowing library: it abstracts over all the different operating systems and input devices that end users might have, and provides a uniform API to enable a write-once run-anywhere experience. While this brings with it the usual litany of valuable bug fixes and stability improvements, the critical change revolves around how KeyCode is handled.

Previously, KeyCode represented the logical meaning of a key on a keyboard: pressing the same button on the same keyboard when swapping between QWERTY and AZERTY keyboard layouts would give a different result! Now, KeyCode represents the physical location of the key. Lovers of WASD games know that this is a much better default for games. For most Bevy developers, you can leave your existing code untouched and simply benefit from better default keybindings for users on non-QWERTY keyboards or layouts. If you need information about the logical keys pressed, use the ReceivedCharacter event.

Multiple Gizmo Configurations #

Gizmos let you quickly draw shapes using an immediate mode API. Here is how you use them:

// Bevy 0.12.1

fn set_gizmo_width(mut config: ResMut<GizmoConfig>) {

// set the line width of every gizmos with this global configuration resource.

config.line_width = 5.0;

}

fn draw_circles(mut gizmos: Gizmos) {

// Draw two circles with a 5 pixels outline

gizmos.circle_2d(vec2(100., 0.), 120., Color::NAVY);

gizmos.circle_2d(vec2(-100., 0.), 120., Color::ORANGE);

}

Add a Gizmos system param and simply call a few methods. Cool!

Gizmos are also great for crate authors, they can use the same API. For example, the oxidized_navigation navmesh library uses gizmos for its debug overlay. Neat!

However, there is only one global configuration. Therefore, a dependency could very well affect the game's gizmos. It could even make them completely unusable.

Not so great. How to solve this? Gizmo groups.

Now, Gizmos comes with an optional parameter. By default, it uses a global configuration:

fn draw_circles(mut default_gizmos: Gizmos) {

default_gizmos.circle_2d(vec2(100., 0.), 120., Color::NAVY);

}

But with a GizmoConfigGroup parameter, Gizmos can choose a distinct configuration:

fn draw_circles(

mut default_gizmos: Gizmos,

// this uses the distinct NavigationGroup config

mut navigation_gizmos: Gizmos<NavigationGroup>,

) {

// Two circles with different outline width

default_gizmos.circle_2d(vec2(100., 0.), 120., Color::NAVY);

navigation_gizmos.circle_2d(vec2(-100., 0.), 120., Color::ORANGE);

}

Create your own gizmo config group by deriving GizmoConfigGroup, and registering it to the App:

#[derive(Default, Reflect, GizmoConfigGroup)]

pub struct NavigationGroup;

impl Plugin for NavigationPlugin {

fn build(&mut self, app: &mut App) {

app

.init_gizmo_group::<NavigationGroup>()

// ... rest of plugin initialization.

}

}

And this is how you set the configuration of gizmo groups to different values:

// Bevy 0.13.0

set_gizmo_width(mut config_store: ResMut<GizmoConfigStore>) {

let config = config_store.config_mut::<DefaultGizmoConfigGroup>().0;

config.line_width = 20.0;

let navigation_config = config_store.config_mut::<NavigationGroup>().0;

navigation_config.line_width = 10.0;

}

Now, the navigation gizmos have a fully separate configuration and don't conflict with the game's gizmos.

Not only that, but the game dev can integrate and toggle the navigation gizmos with their own debug tools however they wish. Be it a hotkey, a debug overlay UI button, or an RPC call. The world is your oyster.

glTF Extensions #

glTF is a popular standardized open file format, used to store and share 3D models and scenes between different programs. The trouble with standards though is that you eventually want to customize it, just a little, to better meet your needs. Khronos Group, in their wisdom, foresaw this and defined a standardized way to customize the format called extensions.

Extensions can be readily exported from other tools (like Blender), and contain all sorts of other useful information: from bleeding edge physically-based material information like anisotropy to performance hints like how to instance meshes.

Because Bevy parses loaded glTF's into our own entity-based hierarchy of objects, getting access to this information when you want to do new rendering things can be hard! With the changes by CorneliusCornbread you can configure the loader to store a raw copy of the glTF file itself with your loaded asset, allowing you to parse and reprocess this information however you please.

Asset Transformers #

Asset processing, at its core, involves implementing the Process trait, which takes some byte data representing an asset, transforms it, and then returns the processed byte data. However, implementing the Process trait by hand is somewhat involved, and so a generic LoadAndSave<L: AssetLoader, S: AssetSaver> Process implementation was written to make asset processing more ergonomic.

Using the LoadAndSave Process implementation, the previous Asset processing pipeline had four stages:

- An

AssetReaderreads some asset source (filesystem, http, etc) and gets the byte data of an asset. - An

AssetLoaderreads the byte data and converts it to a BevyAsset. - An

AssetSavertakes a BevyAsset, processes it, and then converts it back into byte data. - An

AssetWriterthen writes the asset byte data back to the asset source.

AssetSavers were responsible for both transforming an asset and converting it into byte data. However, this posed a bit of an issue for code reusability. Every time you wanted to transform some asset, such as an image, you would need to rewrite the portion that converts the asset to byte data. To solve this, AssetSavers are now solely responsible for converting an asset into byte data, and AssetTransformers which are responsible for transforming an asset were introduced. A new LoadTransformAndSave<L: AssetLoader, T: AssetTransformer, S: AssetSaver> Process implementation was added to utilize the new AssetTransformers.

The new asset processing pipeline, using the LoadTransformAndSave Process implementation, has five stages:

- An

AssetReaderreads some asset source (filesystem, http, etc) and gets the byte data of an asset. - An

AssetLoaderreads the byte data and converts it to a BevyAsset. - An

AssetTransformertakes an asset and transforms it in some way. - An

AssetSavertakes a BevyAssetand converts it back into byte data. - An

AssetWriterthen writes the asset byte data back to the asset source.

In addition to having better code reusability, this change encourages writing AssetSavers for various common asset types, which could be used to add runtime asset saving functionality to the AssetServer.

The previous LoadAndSave Process implementation still exists, as there are some cases where an asset transformation step is unnecessary, such as when saving assets into a compressed format.

See the Asset Processing Example for a more detailed look into how to use LoadTransformAndSave to process a custom asset.

Entity Optimizations #

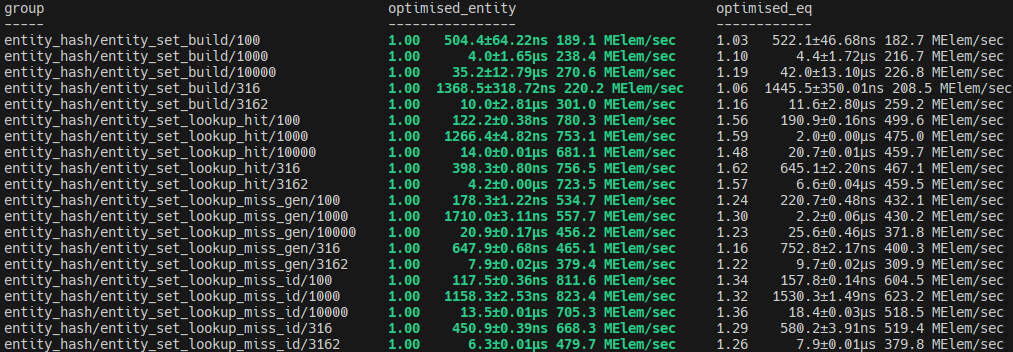

Entity (Bevy's 64-bit unique identifier for entities) received several changes this cycle, laying some more groundwork for relations alongside related, and nice to have, performance optimizations. The work here involved a lot of deep-diving into compiler codegen/assembly output, with running lots of benchmarks and testing to ensure all changes didn't cause breakages or major problems. Although the work here was dealing with mostly safe code, there were lots of underlying assumptions being changed that could have impacted code elsewhere. This was the most "micro-optimization" oriented set of changes in Bevy 0.13.

- #9797: created a unified identifier type, paving the path for us to use the same fast, complex code in both our

Entitytype and the much-awaited relations - #9907: allowed us to store

Option<Entity>in the same number of bits asEntity, by changing the layout of our Entity type to reserve exactly oneu64value for theNonevariant - #10519: swapped us to a manually crafted

PartialEqandHashimplementation forEntityto improve speed and save instructions in our hot loops - #10558: combined the approach of #9907 and #10519 to optimize

Entity's layout further, and optimized ourPartialOrdandOrdimplementations! - #10648: further optimized our entity hashing, changing how we multiply in the hash to save one precious assembly instruction in the optimized compiler output

Full credit is also due to the authors who pursued similar work in #2372 and #3788: while their work was not ultimately merged, it was an incredibly valuable inspiration and source of prior art to base these more recent changes on.

The above results show from where we started (optimised_eq being the first PR that introduced the benchmarks) to where we are now with all the optimizations in place (optimised_entity). There are improvements across the board, with clear performance benefits that should impact multiple areas of the codebase, not just when hashing entities.

There are a ton of crunchy, well-explained details in the linked PRs, including some fascinating assembly output analysis. If that interests you, open some new tabs in the background!

Porting Query::for_each to QueryIter::fold override #

Currently to get the full performance out of iterating over queries, Query::for_each must be used to take advantage of auto-vectorization and internal iteration optimizations that the compiler can apply. However, this isn't idiomatic rust and is not an iterator method so you can't use it on an iterator chain. However, it is possible to get the same benefits for some iterator methods, for which #6773 by @james7132 sought to achieve. By providing an override to QueryIter::fold, it was possible to port the iteration strategies of Query::for_each so that Query::iter and co could achieve the same gains. Not every iterator method currently benefits from this, as they require overriding QueryIter::try_fold, but that is currently still a nightly-only optimisation. This same approach is within the Rust standard library.

This deduplicated code in a few areas, such as no longer requiring both Query::for_each and Query::for_each_mut, as one just needs to call Query::iter or Query::iter_mut instead. So code like:

fn some_system(mut q_transform: Query<&mut Transform, With<Npc>>) {

q_transform.for_each_mut(|transform| {

// Do something...

});

}

Becomes:

fn some_system(mut q_transform: Query<&mut Transform, With<Npc>>) {

q_transform.iter_mut().for_each(|transform| {

// Do something...

});

}

The assembly output was compared as well between what was on main branch versus the PR, with no tangible differences being seen between the old Query::for_each and the new QueryIter::for_each() output, validating the approach and ensuring the internal iteration optimizations were being applied.

As a plus, the same internal iteration optimizations in Query::par_for_each now reuse code from for_each, deduplicating code there as well and enabling users to make use of par_iter().for_each(). As a whole, this means there's no longer any need for Query::for_each, Query::for_each_mut, Query::_par_for_each, Query::par_for_each_mut so these methods have been deprecated for 0.13 and will be removed in 0.14.

Reducing TableRow as Casting #

Not all improvements in our ECS internals were focused on performance. Some small changes were made to improve type safety and tidy up some of the codebase to have less as casting being done on various call sites for TableRow. The problem with as casting is that in some cases, the cast will fail by truncating the value silently, which could then cause havoc by accessing the wrong row and so forth. #10811 by @bushrat011899 was put forward to clean up the API around TableRow, providing convenience methods backed by asserts to ensure the casting operations could never fail, or if they did, they'd panic correctly.

Naturally, adding asserts in potentially hot codepaths were cause for some concern, necessitating considerable benchmarking efforts to confirm there were regressions and to what level. With careful placing of the new asserts, the detected regression for these cases was in the region of 0.1%, well within noise. But the benefit was a less error-prone API and more robust code. With a complex unsafe codebase like bevy_ecs, every little bit helps.

Events Live Longer #

Events are a useful tool for passing data into systems and between systems.

Internally, Bevy events are double-buffered, so a given event will be silently dropped once the buffers have swapped twice. The Events<T> resource is set up this way so events are dropped after a predictable amount of time, preventing their queues from growing forever and causing a memory leak.

Before 0.12.1, event queues were swapped every update (i.e. every frame). That was an issue for games with logic in FixedUpdate since it meant events would normally disappear before systems in the next FixedUpdate could read them.

Bevy 0.12.1 changed the swap cadence to "every update that runs FixedUpdate one or more times" (only if the TimePlugin is installed). This change did resolve the original problem, but it then caused problems in the other direction. Users were surprised to learn some of their systems with run_if conditions would iterate much older events than expected. (In hindsight, we should have considered it a breaking change and postponed it until this release.) The change also introduced a bug (fixed in this release) where only one type of event was being dropped.

One proposed future solution to this lingering but unintended coupling between Update and FixedUpdate is to use event timestamps to change the default range of events visible by EventReader<T>. That way systems in Update would skip any events older than a frame while systems in FixedUpdate could still see them.

For now, the <=0.12.0 behavior can be recovered by simply removing the EventUpdateSignal resource.

fn main() {

let mut app = App::new()

.add_plugins(DefaultPlugins);

/* ... */

// If this resource is absent, events are dropped the same way as <=0.12.0.

app.world.remove_resource::<EventUpdateSignal>();

/* ... */

app.run();

}

What's Next? #

We have plenty of work in progress! Some of this will likely land in Bevy 0.14.

Check out the Bevy 0.14 Milestone for an up-to-date list of current work that contributors are focusing on for Bevy 0.14.

More Editor Experimentation #

Led by the brilliant JMS55, we've opened up a free-form playground to define and answer key questions about the design of the bevy_editor: not through discussion, but through concrete prototyping. Should we use an in-process editor (less robust to game crashes) or an external one (more complex)? Should we ship an editor binary (great for non-programmers) or embed it in the game itself (very hackable)? Let's find out by doing!

There are some incredible mockups, functional prototypes and third-party editor-adjacent projects out there. Some highlights:

- A Bevy-branded editor UI mockup by

@!!&Amyon Discord, imagining what the UX for an ECS-based editor could look like bevy_animation_graph: a fully-functional asset-driven animation graph crate with its own node-based editor for Bevyspace_editor: a polished Bevy-native third-party scene editor that you can use today!Blender_bevy_components_workflow: an impressively functional ecosystem of tools that lets you use Blender as a seamless level and scene editor for your games today.@coreh's experiment on a reflection-powered remote protocol, coupled with an interactive web-based editor, allows devs to inspect and control their Bevy games from other processes, languages and even devices! Try it out live!

It's really exciting to see this progress, and we're keen to channel that energy and experience into official first-party efforts.

bevy_dev_tools #

The secret to smooth game development is great tooling. It's time to give Bevy developers the tools they need to inspect, debug and profile their games as part of the first-party experience. From FPS meters to system stepping to a first-party equivalent of the fantastic bevy-inspector-egui: giving these a home in Bevy itself helps us polish them, points new users in the right direction, and allows us to use them in the bevy_editor itself.

A New Scene Format #

Scenes are Bevy's general-purpose answer to serializing ECS data to disk: tracking entities, components, and resources for both saving games and loading premade levels. However, the existing .ron-based scene format is hard to hand-author, overly verbose, and brittle; changes to your code (or that of your dependencies!) rapidly invalidate saved scenes. Cart has been cooking up a revised scene format with tight IDE and code integration that tackles these problems and makes authoring content (including UI!) in Bevy a joy. Whether you're writing code, writing scene files, or generating it from a GUI.

bevy_ui Improvements #

bevy_ui has its fair share of problems and limitations, both mundane and architectural; however, there are tangible things we can and are doing to improve this: an improved scene format offers an end to the boilerplate when defining layouts, rounded corners just need a little love from reviewers, and the powerful and beloved object picking from [bevy_mod_picking] is slated to be upstreamed for both UI and gameplay alike. A spectacular array of third-party UI solutions exists today, and learning from those and committing to a core architecture for UI logic and reactivity is a top priority.

Meshlet Rendering #

Split meshes into clusters of triangles called meshlets, which bring many efficiency gains. During the 0.13 development cycle, we made a lot of progress on this feature. We implemented a GPU-driven meshlet renderer that can scale to much more triangle-dense scenes, with a much lower CPU load. Memory usage, however, is very high, and we haven't implemented LODs or compression yet. Instead of releasing it half-baked, we're going to continue to iterate, and are very excited to (hopefully) bring you this feature in a future release.

The Steady March Towards Relations #

Entity-entity relations, the ability to track and manage connections between entities directly in the ECS, has been one of the most requested ECS features for years now. Following the trail blazed by flecs, the mad scientists over in #ecs-dev are steadily reshaping our internals, experimenting with external implementations, and shipping the general purpose building blocks (like dynamic queries or lifecycle hooks) needed to build a fast, robust and ergonomic solution.

Support Bevy #

Sponsorships help make our work on Bevy sustainable. If you believe in Bevy's mission, consider sponsoring us ... every bit helps!

Contributors #

Bevy is made by a large group of people. A huge thanks to the 198 contributors that made this release (and associated docs) possible! In random order:

- @ickk

- @orph3usLyre

- @tygyh

- @nicopap

- @NiseVoid

- @pcwalton

- @homersimpsons

- @Henriquelay

- @Vrixyz

- @GuillaumeGomez

- @porkbrain

- @Leinnan

- @IceSentry

- @superdump

- @solis-lumine-vorago

- @garychia

- @tbillington

- @Nilirad

- @JMS55

- @kirusfg

- @KirmesBude

- @maueroats

- @mamekoro

- @NiklasEi

- @SIGSTACKFAULT

- @Olle-Lukowski

- @bushrat011899

- @cbournhonesque-sc

- @daxpedda

- @Testare

- @johnbchron

- @BlackPhlox

- @MrGVSV

- @Kanabenki

- @SpecificProtagonist

- @rosefromthedead

- @thepackett

- @wgxer

- @mintlu8

- @AngelOnFira

- @ArthurBrussee

- @viridia

- @GabeeeM

- @Elabajaba

- @brianreavis

- @dmlary

- @akimakinai

- @VitalyAnkh

- @komadori

- @extrawurst

- @NoahShomette

- @valentinegb

- @coreh

- @kristoff3r

- @wackbyte

- @BD103

- @stepancheg

- @bogdiw

- @doup

- @janhohenheim

- @ekropotin

- @thmsgntz

- @alice-i-cecile

- @tychedelia

- @soqb

- @taizu-jin

- @kidrigger

- @fuchsnj

- @TimJentzsch

- @MinerSebas

- @RomainMazB

- @cBournhonesque

- @tripokey

- @cart

- @pablo-lua

- @cuppar

- @TheTacBanana

- @AxiomaticSemantics

- @rparrett

- @richardhozak

- @afonsolage

- @conways-glider

- @ItsDoot

- @MarkusTheOrt

- @DavJCosby

- @thebluefish

- @DGriffin91

- @Shatur

- @MiniaczQ

- @killercup

- @Ixentus

- @hecksmosis

- @nvdaz

- @james-j-obrien

- @seabassjh

- @lee-orr

- @Waridley

- @wainwrightmark

- @robtfm

- @asuratos

- @Ato2207

- @DasLixou

- @SludgePhD

- @torsteingrindvik

- @jakobhellermann

- @fantasyRqg

- @johanhelsing

- @re0312

- @ickshonpe

- @BorisBoutillier

- @lkolbly

- @Friz64

- @rodolphito

- @TheBlckbird

- @HeyZoos

- @nxsaken

- @UkoeHB

- @GitGhillie

- @ibotha

- @ManevilleF

- @andristarr

- @josfeenstra

- @maniwani

- @Trashtalk217

- @benfrankel

- @notverymoe

- @simbleau

- @aevyrie

- @Dig-Doug

- @IQuick143

- @shanecelis

- @mnmaita

- @Braymatter

- @LeshaInc

- @esensar

- @Adamkob12

- @Kees-van-Beilen

- @davidasberg

- @andriyDev

- @hankjordan

- @Jondolf

- @SET001

- @hxYuki

- @matiqo15

- @capt-glorypants

- @hymm

- @HugoPeters1024

- @RyanSpaker

- @bardt

- @tguichaoua

- @SkiFire13

- @st0rmbtw

- @Davier

- @mockersf

- @antoniacobaeus

- @ameknite

- @Pixelstormer

- @bonsairobo

- @matthew-gries

- @NthTensor

- @tjamaan

- @Architector4

- @JoJoJet

- @TrialDragon

- @Gadzev

- @eltociear

- @scottmcm

- @james7132

- @CorneliusCornbread

- @Aztro-dev

- @doonv

- @Malax

- @atornity

- @Bluefinger

- @kayhhh

- @irate-devil

- @AlexOkafor

- @kettle11

- @davidepaci

- @NathanSWard

- @nfagerlund

- @anarelion

- @laundmo

- @nelsontkq

- @jeliag

- @13ros27

- @Nathan-Fenner

- @softmoth

- @xNapha

- @asafigan

- @nothendev

- @SuperSamus

- @devnev

- @RobWalt

- @ThePuzzledDev

- @rafalh

- @dubrowgn

- @Aceeri

Full Changelog #

The changes mentioned above are only the most appealing, highest impact changes that we've made this cycle. Innumerable bug fixes, documentation changes and API usability tweaks made it in too. For a complete list of changes, check out the PRs listed below.

A-Rendering + A-Windowing #

- Allow prepare_windows to run off main thread.

- Allow prepare_windows to run off main thread on all platforms

- don't run

create_surfacessystem if not needed - fix create_surfaces system ordering

A-Animation + A-Reflection #

A-Assets #

- Don't

.unwrap()inAssetPath::try_parse - feat:

Debugimplemented forAssetMode - Remove rogue : from embedded_asset! docs

- use

treesyntax to explain bevy_rock file structure - Make AssetLoader/Saver Error type bounds compatible with anyhow::Error

- Fix untyped labeled asset loading

- Add

load_untypedto LoadContext - fix example custom_asset_reader on wasm

ReadAssetBytesError::Ioexposes failing path- Added Method to Allow Pipelined Asset Loading

- Add missing asset load error logs for load_folder and load_untyped

- Fix wasm builds with file_watcher enabled

- Do not panic when failing to create assets folder (#10613)

- Use handles for queued scenes in SceneSpawner

- Fix file_watcher feature hanging indefinitely

- derive asset for enums

- Ensure consistency between Un/Typed

AssetIdandHandle - Fix Asset Loading Bug

- remove double-hashing of typeid for handle

- AssetMetaMode

- Fix GLTF scene dependencies and make full scene renders predictable

- Print precise and correct watch warnings (and only when necessary)

- Allow removing and reloading assets with live handles

- Add GltfLoaderSettings

- Refactor

process_handle_drop_internal()in bevy_asset - fix base64 padding when loading a gltf file

- assets should be kept on CPU by default

- Don't auto create assets folder

- Use

impl Into<A>forAssets::add - Add

reserve_handletoAssets. - Better error message on incorrect asset label

- GLTF extension support

- Fix embedded watcher to work with external crates

- Added AssetLoadFailedEvent, UntypedAssetLoadFailedEvent

- auto create imported asset folder if needed

- Fix minor typo

- Include asset path in get_meta_path panic message

- Fix documentation for

AssetReader::is_directoryfunction - AssetSaver and AssetTransformer split

- AssetPath source parse fix

- Allow TextureAtlasBuilder in AssetLoader

- Add a getter for asset watching status on

AssetServer - Make SavedAsset::get_labeled accept &str as label

- Added Support for Extension-less Assets

- Fix embedded asset path manipulation

- Fix AssetTransformer breaking LabeledAssets

- Put asset_events behind a run condition

- Use Asset Path Extension for

AssetLoaderDisambiguation

A-Core + A-App #

A-Accessibility #

A-Transform #

A-ECS + A-Hierarchy #

A-Text #

- Rename

TextAlignmenttoJustifyText. - Subtract 1 from text positions to account for glyph texture padding.

A-Assets + A-UI #

A-Utils + A-Time #

A-Rendering + A-Assets #

- Fix shader import hot reloading on windows

- Unload render assets from RAM

- mipmap levels can be 0 and they should be interpreted as 1

A-Physics #

A-ECS + A-Editor + A-App + A-Diagnostics #

A-Reflection + A-Scenes #

A-Audio + A-Windowing #

A-Build-System + A-Meta #

A-ECS + A-Time #

- Wait until

FixedUpdatecan see events before dropping them - Add First/Pre/Post/Last schedules to the Fixed timestep

- Add run conditions for executing a system after a delay

- Add paused run condition

A-Meta #

- Add "update screenshots" to release checklist

- Remove references to specific projects from the readme

- Fix broken link between files

- [doc] Fix typo in CONTRIBUTING.md

- Remove unused namespace declarations

- Add docs link to root

Cargo.toml - Migrate third party plugins guidelines to the book

- Run markdownlint

- Improve

config_fast_builds.toml - Use

-Z threads=0option inconfig_fast_builds.toml - CONTRIBUTING.md: Mention splitting complex PRs

A-Time #

- docs: use

readinstead of deprecatediter - Rename

Time::<Fixed>::overstep_percentage()andTime::<Fixed>::overstep_percentage_f64() - Rename

Timer::{percent,percent_left}toTimer::{fraction,fraction_remaining} - Document how to configure FixedUpdate

- Add discard_overstep function to

Time<Fixed>

A-Assets + A-Reflection #

A-Diagnostics + A-Utils #

A-Windowing + A-App #

A-ECS + A-Scenes #

A-Hierarchy #

- bevy_hierarchy: add some docs

- Make bevy_app and reflect opt-out for bevy_hierarchy.

- Add

bevy_hierarchyCrate and plugin documentation - Rename "AddChild" to "PushChild"

- Inline trivial methods in bevy_hierarchy

A-ECS + A-App #

A-Transform + A-Math #

A-UI + A-Text #

A-Input #

- Make ButtonSettings.is_pressed/released public

- Rename

InputtoButtonInput - Add method to check if all inputs are pressed

- Add window entity to TouchInput events

- Extend

Toucheswith clear and reset methods - Add logical key data to KeyboardInput

- Derive Ord for GamepadButtonType.

- Add delta to CursorMoved event

A-Rendering + A-Diagnostics #

A-Rendering #

- Fix bevy_pbr shader function name

- Implement Clone for VisibilityBundle and SpatialBundle

- Reexport

wgpu::Maintain - Use a consistent scale factor and resolution in stress tests

- Ignore inactive cameras

- Add shader_material_2d example

- More inactive camera checks

- Fix post processing example to only run effect on camera with settings component

- Make sure added image assets are checked in camera_system

- Ensure ExtendedMaterial works with reflection (to enable bevy_egui_inspector integration)

- Explicit color conversion methods

- Re-export wgpu BufferAsyncError

- Improve shader_material example

- Non uniform transmission samples

- Explain how

AmbientLightis inserted and configured - Add wgpu_pass method to TrackedRenderPass

- Add a

depth_biastoMaterial2d - Use as_image_copy where possible

- impl

From<Color>for ClearColorConfig - Ensure instance_index push constant is always used in prepass.wgsl

- Bind group layout entries

- prepass vertex shader always outputs world position

- Swap material and mesh bind groups

- try_insert Aabbs

- Fix prepass binding issues causing crashes when not all prepass bindings are used

- Fix binding group in custom_material_2d.wgsl

- Normalize only nonzero normals for mikktspace normal maps

- light renderlayers

- Explain how RegularPolygon mesh is generated

- Fix Mesh2d normals on webgl

- Update to wgpu 0.18

- Fix typo in docs for

ViewVisibility - Add docs to bevy_sprite a little

- Fix BindingType import warning

- Update texture_atlas example with different padding and sampling

- Update AABB when Sprite component changes in calculate_bounds_2d()

- OrthographicProjection.scaling_mode is not just for resize

- Derive