Bevy 0.14

Posted on July 4, 2024 by Bevy Contributors

Thanks to 256 contributors, 993 pull requests, community reviewers, and our generous donors, we're happy to announce the Bevy 0.14 release on crates.io!

For those who don't know, Bevy is a refreshingly simple data-driven game engine built in Rust. You can check out our Quick Start Guide to try it today. It's free and open source forever! You can grab the full source code on GitHub. Check out Bevy Assets for a collection of community-developed plugins, games, and learning resources.

To update an existing Bevy App or Plugin to Bevy 0.14, check out our 0.13 to 0.14 Migration Guide.

Since our last release a few months ago we've added a ton of new features, bug fixes, and quality of life tweaks, but here are some of the highlights:

- Virtual Geometry: Preprocess meshes into "meshlets", enabling efficient rendering of huge amounts of geometry

- Sharp Screen Space Reflections: Approximate real time raymarched screen space reflections

- Depth of Field: Cause objects at specific depths to go "out of focus", mimicking the behavior of physical lenses

- Per-Object Motion Blur: Blur objects moving fast relative to the camera

- Volumetric Fog / Lighting: Simulates fog in 3d space, enabling lights to produce beautiful "god rays"

- Filmic Color Grading: Fine tune tonemapping in your game with a complete set of filmic color grading tools

- PBR Anisotropy: Improve rendering of surfaces whose roughness varies along the tangent and bitangent directions of a mesh, such as brushed metal and hair

- Auto Exposure: Configure cameras to dynamically adjust their exposure based on what they are looking at

- PCF for Point Lights: Smooth out point light shadows, improving their quality

- Animation blending: Our new low-level animation graph adds support for animation blending, and sets the stage for first- and third-party graphical, asset-driven animation tools.

- ECS Hooks and Observers: Automatically (and immediately) respond to arbitrary events, such as component addition and removal

- Better colors: type-safe colors make it clear which color space you're operating in, and offer an awesome array of useful methods.

- Computed states and substates: Modeling complex app state is a breeze with these type-safe extensions to our

Statesabstraction. - Rounded corners: Rounding off one of

bevy_ui's roughest edges, you can now procedurally set the corner radius on your UI elements.

For the first time, Bevy 0.14 was prepared using a release candidate process to help ensure that you can upgrade right away with peace of mind. We've worked closely with both plugin authors and ordinary users to catch critical bugs, round the rough corners off our new features, and refine the migration guide. As we prepared fixes, we've shipped new release candidates on crates.io, letting core ecosystem crates update and listening closely for show-stopping problems. Thank you so much to everyone who helped out: these efforts are a vital step towards making Bevy something that teams large and small can trust to work reliably.

Virtual Geometry (Experimental) #

- Authors: @JMS55, @atlv24, @zeux, @ricky26

- PR #10164

After several months of hard work, we're super excited to bring you the experimental release of a new virtual geometry feature!

This new rendering feature works much like Unreal Engine 5's Nanite renderer. You can take a very high-poly mesh, preprocess it to generate a MeshletMesh during build time, and then at runtime render huge amounts of geometry - much more than Bevy's standard renderer can support. No explicit LODs are needed - it's all automatic, and near seamless.

This feature is still a WIP, and comes with several constraints compared to Bevy's standard renderer, so be sure to read the docs and report any bugs you encounter. We still have a lot left to do, so look forward to more performance improvements (and associated breaking changes) in future releases!

Note that this feature does not use GPU "mesh shaders", so older GPUs are compatible for now. However, they are not recommended, and are likely to become unsupported in the near future.

In addition to the below user guide, checkout:

Users wanting to use virtual geometry should compile with the meshlet cargo feature at runtime, and meshlet_processor cargo feature at build time for preprocessing meshes into the special meshlet-specific format (MeshletMesh) the meshlet renderer uses.

Enabling the meshlet feature unlocks a new module: bevy::pbr::experimental::meshlet.

First step, add MeshletPlugin to your app:

app.add_plugins(MeshletPlugin);

Next, preprocess your Mesh into a MeshletMesh. Currently, this needs to be done manually via MeshletMesh::from_mesh()(again, you need the meshlet_processor feature enabled). This step is fairly slow, and should be done once ahead of time, and then saved to an asset file. Note that there are limitations on the types of meshes and materials supported, make sure to read the docs.

Automatic GLTF/scene conversions via Bevy's asset preprocessing system is planned, but unfortunately did not make the cut in time for this release. For now, you'll have to come up with your own asset conversion and management system. If you come up with a good system, let us know!

Now, spawn your entities. In the same vein as MaterialMeshBundle, there's a MaterialMeshletMeshBundle, which uses a MeshletMesh instead of the typical Mesh.

commands.spawn(MaterialMeshletMeshBundle {

meshlet_mesh: meshlet_mesh_handle.clone(),

material: material_handle.clone(),

transform,

..default()

});

Lastly, a note on materials. Meshlet entities use the same Material trait as regular mesh entities, however, the standard material methods are not used. Instead there are 3 new methods: meshlet_mesh_fragment_shader, meshlet_mesh_prepass_fragment_shader, and meshlet_mesh_deferred_fragment_shader. All 3 methods of forward, forward with prepasses, and deferred rendering are supported.

Notice however that there is no access to vertex shaders. Meshlet rendering uses a hardcoded vertex shader that cannot be changed.

The actual fragment shader code for meshlet materials are mostly the same as fragment shaders for regular mesh entities. The key difference is that instead of this:

@fragment

fn fragment(vertex_output: VertexOutput) -> @location(0) vec4<f32> {

// ...

}

You should use this:

#import bevy_pbr::meshlet_visibility_buffer_resolve::resolve_vertex_output

@fragment

fn fragment(@builtin(position) frag_coord: vec4<f32>) -> @location(0) vec4<f32> {

let vertex_output = resolve_vertex_output(frag_coord);

// ...

}

Sharp Screen-Space Reflections #

- Authors: @pcwalton

- PR #13418

Drag this image to compare

Screen-space reflections (SSR) approximate real-time reflections by raymarching through the depth buffer and copying samples from the final rendered frame. Our initial implementation is relatively minimal, to provide a flexible base to build on, but is based on the production-quality raymarching code by Tomasz Stachowiak, one of the creators of the indie darling Bevy game Tiny Glade. As a result, there are a few caveats to bear in mind:

- Currently, this feature is built on top of the deferred renderer and is currently only supported in that mode. Forward screen-space reflections are possible albeit uncommon (though e.g. Doom Eternal uses them); however, they require tracing from the previous frame, which would add complexity. This patch leaves the door open to implementing SSR in the forward rendering path but doesn't itself have such an implementation.

- Screen-space reflections aren't supported in WebGL 2, because they require sampling from the depth buffer, which

nagacan't do because of a bug (sampler2DShadowis incorrectly generated instead ofsampler2D; this is the same reason why depth of field is disabled on that platform). - No temporal filtering or blurring is performed at all. For this reason, SSR currently only operates on very low-roughness / smooth surfaces.

- We don't perform acceleration via the hierarchical Z-buffer and reflections are traced at full resolution. As a result, you may notice performance issues depending on your scene and hardware.

To add screen-space reflections to a camera, insert the ScreenSpaceReflectionsSettings component. In addition to ScreenSpaceReflectionsSettings, DepthPrepass, and DeferredPrepass must also be present for the reflections to show up. Conveniently, the ScreenSpaceReflectionsBundle bundles these all up for you! While the ScreenSpaceReflectionsSettings comes with sensible defaults, it also contains several settings that artists can tweak.

Volumetric Fog and Volumetric Lighting (light shafts / god rays) #

- Authors: @pcwalton

- PR #13057

Not all fog is created equal. Bevy's existing implementation covers distance fog, which is fast, simple, and not particularly realistic.

In Bevy 0.14, this is supplemented with volumetric fog, based on volumetric lighting, which simulates fog using actual 3D space, rather than simply distance from the camera. As you might expect, this is both prettier and more computationally expensive!

In particular, this allows for the creation of stunningly beautiful "god rays" (more properly, crepuscular rays) shining through the fog.

Drag this image to compare

Bevy's algorithm, which is implemented as a postprocessing effect, is a combination of the techniques described in Scratchapixel and Alexandre Pestana's blog post. It uses raymarching (ported to WGSL by h3r2tic) in screen space, transformed into shadow map space for sampling and combined with physically-based modeling of absorption and scattering. Bevy employs the widely-used Henyey-Greenstein phase function to model asymmetry; this essentially allows light shafts to fade into and out of existence as the user views them.

To add volumetric fog to a scene, add VolumetricFogSettings to the camera, and add VolumetricLight to directional lights that you wish to be volumetric. VolumetricFogSettings has numerous settings that allow you to define the accuracy of the simulation, as well as the look of the fog. Currently, only interaction with directional lights that have shadow maps is supported. Note that the overhead of the effect scales directly with the number of directional lights in use, so apply VolumetricLight sparingly for the best results.

Try it hands on with our volumetric_fog example.

Per-Object Motion Blur #

- Authors: @aevyrie, @torsteingrindvik

- PR #9924

We've added a post-processing effect that blurs fast-moving objects in the direction of motion. Our implementation uses motion vectors, which means it works with Bevy's built in PBR materials, skinned meshes, or anything else that writes motion vectors and depth. The effect is used to convey high speed motion, which can otherwise look like flickering or teleporting when the image is perfectly sharp.

Blur scales with the motion of objects relative to the camera. If the camera is tracking a fast moving object, like a vehicle, the vehicle will remain sharp, while stationary objects will be blurred. Conversely, if the camera is pointing at a stationary object, and a fast moving vehicle moves through the frame, only the fast moving object will be blurred.

The implementation is configured with camera shutter angle, which corresponds to how long the virtual shutter is open during a frame. In practice, this means the effect scales with framerate, so users running at high refresh rates aren't subjected to over-blurring.

You can enable motion blur by adding MotionBlurBundle to your camera entity, as shown in our motion blur example.

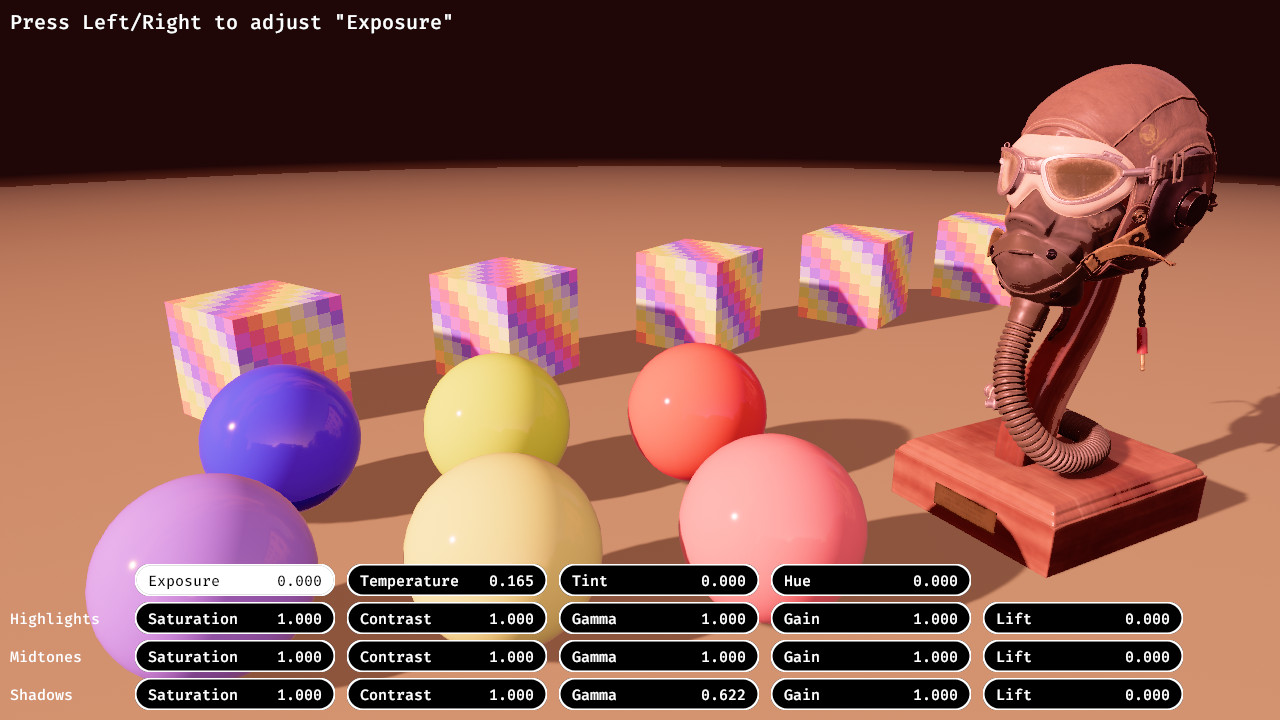

Filmic Color Grading #

- Authors: @pcwalton

- PR #13121

Artists want to get exactly the right look for their game, and color plays a huge role.

To support this, Bevy's existing tonemapping tools have been extended to include a complete set of filmic color grading tools. In addition to a base tonemap, you can now configure:

- White point adjustment. This is inspired by Unity's implementation of the feature, but simplified and optimized. Temperature and tint control the adjustments to the x and y chromaticity values of CIE 1931. Following Unity, the adjustments are made relative to the D65 standard illuminant in the LMS color space.

- Hue rotation: converts the RGB value to HSV, alters the hue, and converts back.

- Color correction: allows the gamma, gain, and lift values to be adjusted according to the standard ASC CDL combined function. This can be done separately for shadows, midtones and highlights To avoid abrupt color changes, a small crossfade is used between the different sections of the image.

We've followed Blender's implementation as closely as possible to ensure that what you see in your modelling software matches what you see in the game.

We've provided a new, color_grading example, with a shiny GUI to change all the color grading settings. Perfect for copy-pasting into your own game's dev tools and playing with the settings! Note that these settings can all be changed at runtime: giving artists control over the exact mood of the scene, or shift it dynamically based on weather or time of day.

Auto Exposure #

- Authors: @Kurble, @alice-i-cecile

- PR #12792

Since Bevy 0.13, you can configure the EV100 of a camera, which allows you to adjust the exposure of the camera in a physically based way. This also allows you to dynamically change the exposure values for various effects. However, this is a manual process and requires you to adjust the exposure values yourself.

Bevy 0.14 introduces Auto Exposure, which automatically adjusts the exposure of your camera based on the brightness of the scene. This can be useful when you want to create the feeling of a very high dynamic range, since your eyes also adjust to large changes in brightness. Note that this is not a replacement for hand-tuning the exposure values, rather an additional tool that you can use to create dramatic effects when brightness changes rapidly. Check out this video recorded from the example to see it in action!

Bevy's Auto Exposure is implemented by making a histogram of the scene's brightness in a post processing step. The exposure is then adjusted based on the average of the histogram. Because the histogram is calculated using a compute shader, Auto Exposure is not available on WebGL. It's also not enabled by default, so you need to add the AutoExposurePlugin to your app.

Auto Exposure is controlled by the AutoExposureSettings component, which you can add to your camera entity. You can configure a few things:

- A relative range of F-stops that the exposure can change by.

- The speed at which the exposure changes.

- An optional metering mask, which allows you to, for example, give more weight to the center of the image.

- An optional histogram filter, which allows you to ignore very bright or very dark pixels.

Fast Depth of Field #

- Authors: @pcwalton, @alice-i-cecile, @Kurble

- PR #13009

In rendering, depth of field is an effect that mimics the limitations of physical lenses. By virtue of the way light works, lenses (like that of the human eye or a film camera) can only focus on objects that are within a specific range (depth) from them, causing all others to be blurry and out of focus.

Bevy now ships with this effect, implemented as a post-processing shader. There are two options available: a fast Gaussian blur or a more physically accurate hexagonal bokeh technique. The bokeh blur is generally more aesthetically pleasing than the Gaussian blur, as it simulates the effect of a camera more accurately. The shape of the bokeh circles are determined by the number of blades of the aperture. In our case, we use a hexagon, which is usually considered specific to lower-quality cameras.

Drag this image to compare

The blur amount is generally specified by the f-number, which we use to compute the focal length from the film size and field-of-view. By default, we simulate standard cinematic cameras with an f/1 f-number and a film size corresponding to the classic Super 35 film format. The developer can customize these values as desired.

To see how this new API, please check out the dedicated depth_of_field example.

PBR Anisotropy #

- Authors: @pcwalton

- PR #13450

Anisotropic materials change based on the axis of motion, such as how wood behaves very differently when working with versus against the grain. But in the context of physically-based rendering, anisotropy refers specifically to a feature that allows roughness to vary along the tangent and bitangent directions of a mesh. In effect, this causes the specular light to stretch out into lines instead of a round lobe. This is useful for modeling brushed metal, hair, and similar surfaces. Support for anisotropy is a common feature in major game and graphics engines; Unity, Unreal, Godot, three.js, and Blender all support it to varying degrees.

Drag this image to compare

Two new parameters have been added to StandardMaterial: anisotropy_strength and anisotropy_rotation. Anisotropy strength, which ranges from 0 to 1, represents how much the roughness differs between the tangent and the bitangent of the mesh. In effect, it controls how stretched the specular highlight is. Anisotropy rotation allows the roughness direction to differ from the tangent of the model.

In addition to these two fixed parameters, an anisotropy texture can be supplied, in the linear texture format specified by KHR_materials_anisotropy.

Like always, give it a spin at the corresponding anisotropy example.

Percentage-Closer Filtering (PCF) for Point Lights #

- Authors: @pcwalton

- PR #12910

Percentage-closer filtering is a standard anti-aliasing technique used to get softer, less jagged shadows. To do so, we sample from the shadow map near the pixel of interest using a Gaussian kernel, averaging the results to reduce sudden transitions as we move in / out of the shadow.

As a result, Bevy's point lights now look softer and more natural, without any changes to end user code. As before, you can configure the exact strategy used to anti-alias your shadows by setting the ShadowFilteringMethod component on your 3D cameras.

Drag this image to compare

Full support for percentage-closer shadows is in the works: testing and reviews for this are, like always, extremely welcome.

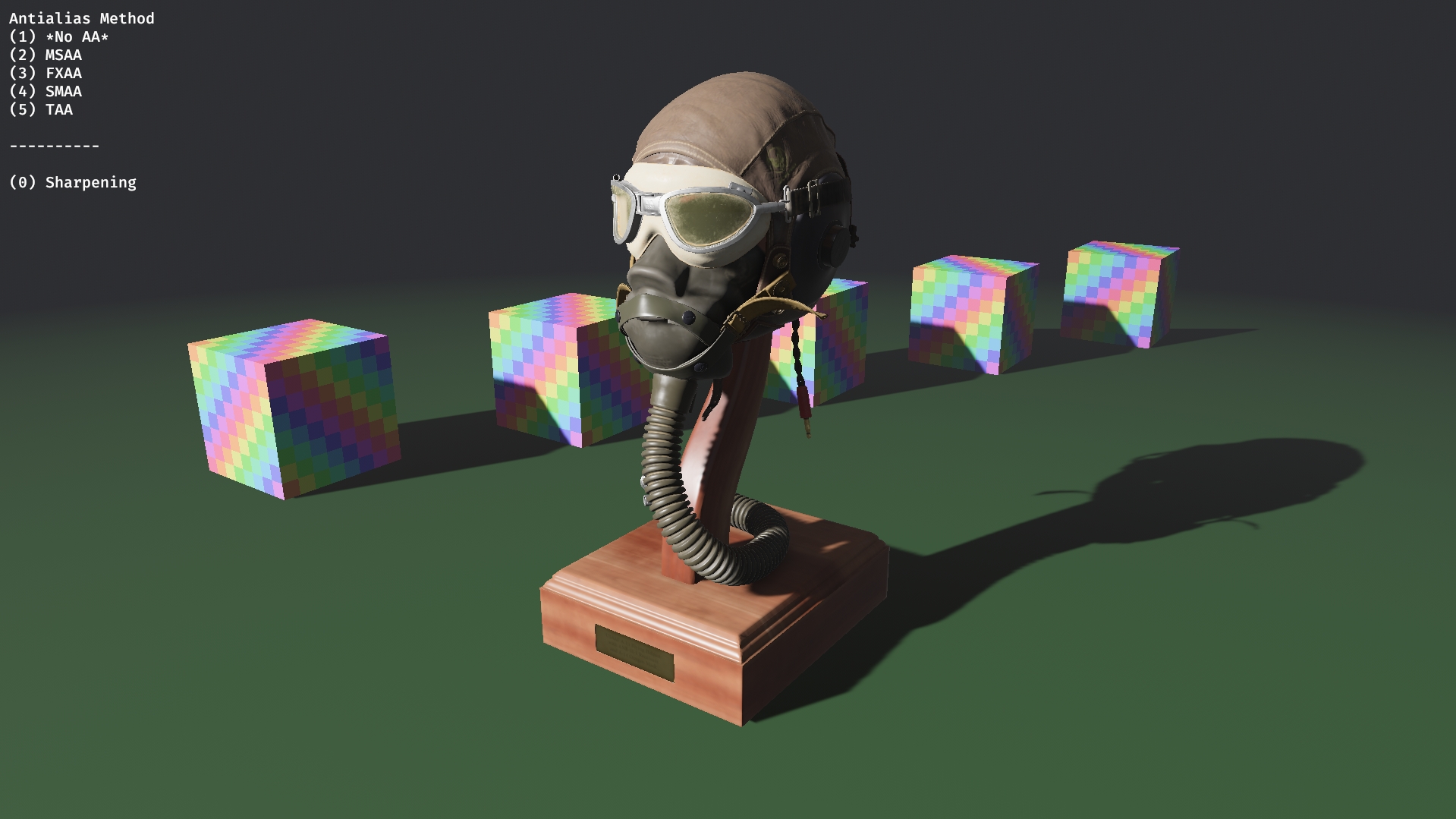

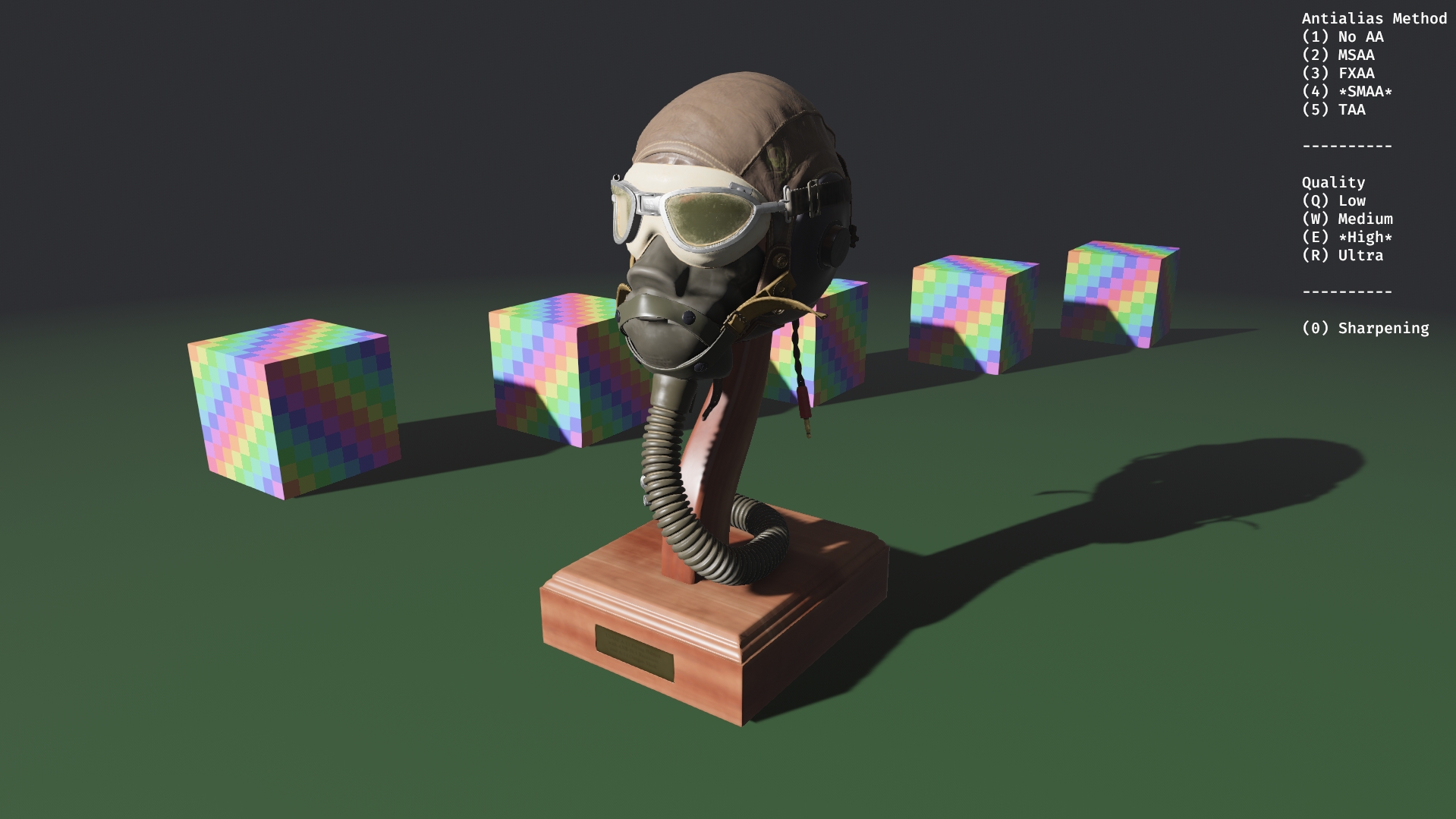

Subpixel Morphological Antialiasing (SMAA) #

- Authors: @pcwalton, @alice-i-cecile

- PR #13423

Jagged edges are the bane of game developers' existence: a wide variety of anti-aliasing techniques have been invented and are still in use to fix them without degrading image quality. In addition to MSAA, FXAA, and TAA, Bevy now implements SMAA: subpixel morphological antialiasing.

SMAA is a 2011 antialiasing technique that detects borders in the image, then averages nearby border pixels, eliminating the dreaded jaggies. Despite its age, it's been a continual staple of games for over a decade. Four quality presets are available: low, medium, high, and ultra. Due to advancements in consumer hardware, Bevy's default is high.

You can see how it compares to no anti-aliasing in the pair of images below:

Drag this image to compare

The best way to get a sense for the tradeoffs of the various anti-aliasing methods is to experiment with a test scene using the anti_aliasing example or by simply trying it out in your own game.

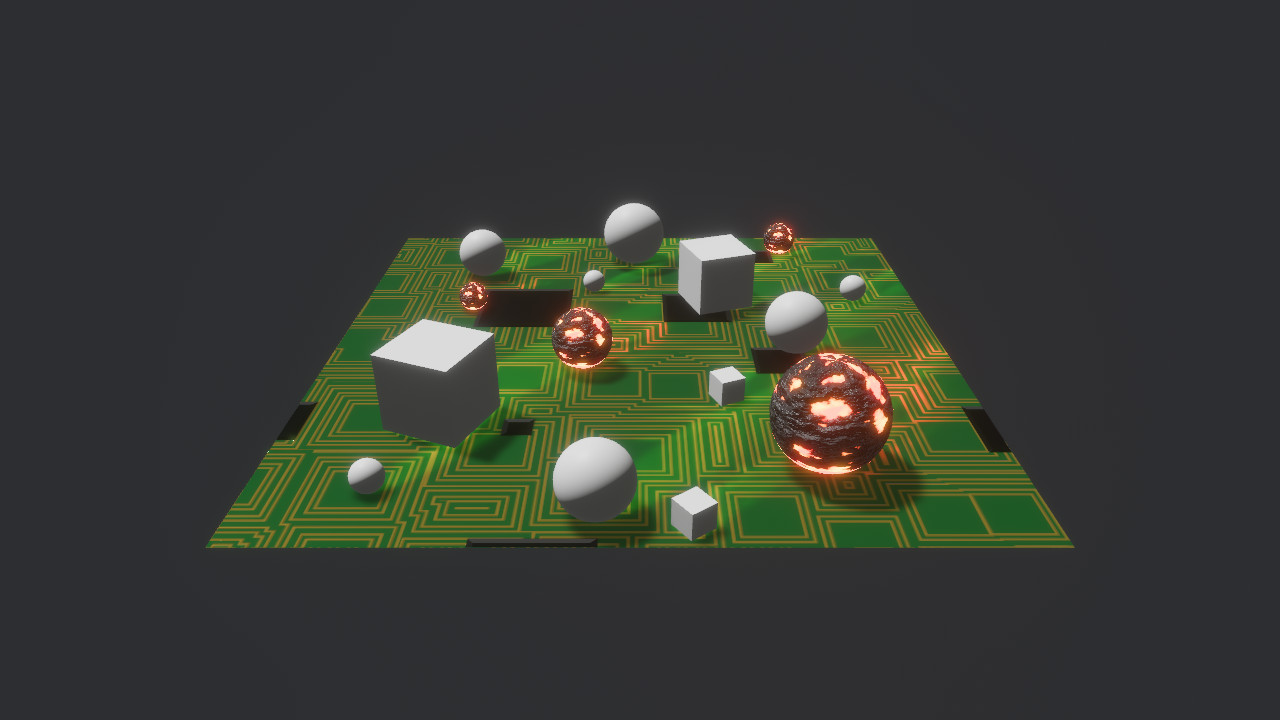

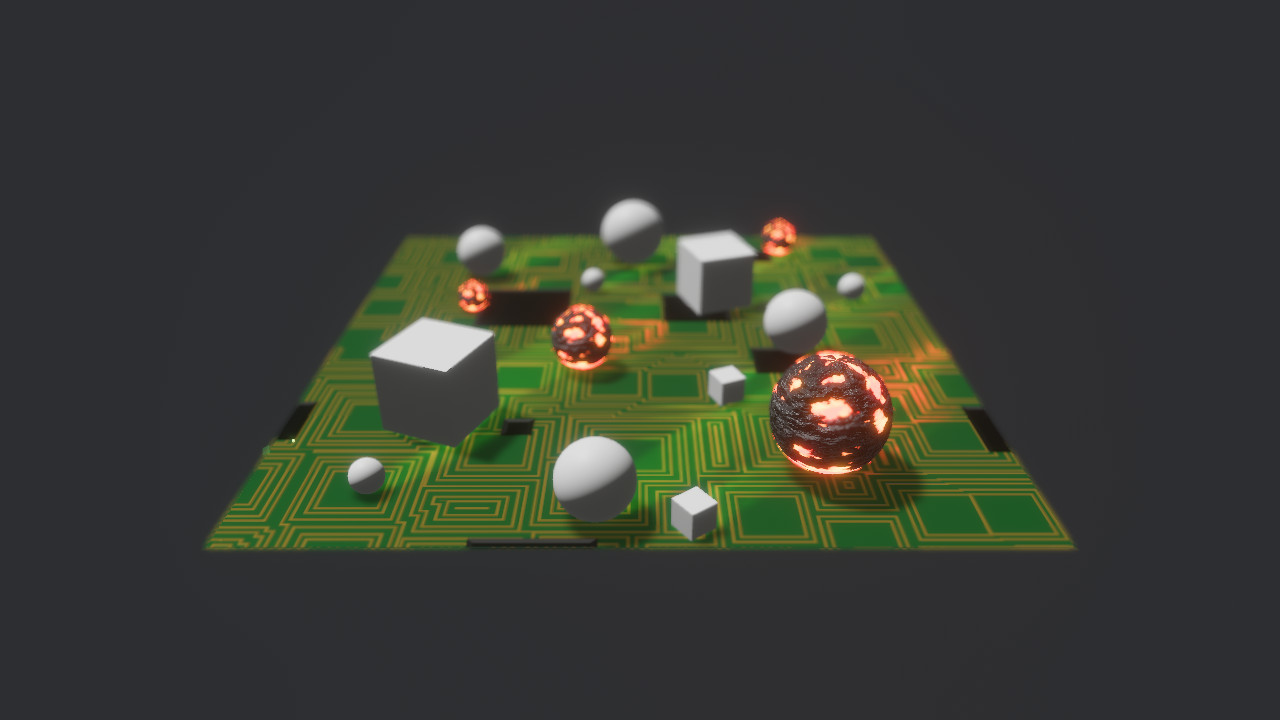

Visibility Ranges (hierarchical levels of detail / HLODs) #

- Authors: @pcwalton, @cart

- PR #12916

When looking at objects far away, it's hard to make out the details! This obvious fact is just as true in rendering as it is in real life. As a result, using complex, high-fidelity models for distant objects is a waste: we can replace their meshes with simplified equivalents.

By automatically varying the level-of-detail (LOD) of our models in this way, we can render much larger scenes (or the same open world with a higher draw distance), swapping out meshes on the fly based on their proximity to the player. Bevy now supports one of the most foundational tools for this: visibility ranges (sometimes called hierarchical levels of detail, as it allows users to replace multiple meshes with a single object).

By setting the VisibilityRange component on your mesh entities, developers can automatically control the range from the camera at which their meshes will appear and disappear, automatically fading between the two options using dithering. Hiding meshes happens early in the rendering pipeline, so this feature can be efficiently used for level of detail optimization. As a bonus, this feature is properly evaluated per-view, so different views can show different levels of detail.

Note that this feature differs from proper mesh LODs (where the geometry itself is simplified automatically), which will come later. While mesh LODs are useful for optimization and don't require any additional setup, they're less flexible than visibility ranges. Games often want to use objects other than meshes to replace distant models, such as octahedral or billboard imposters: implementing visibility ranges first gives users the flexibility to start implementing these solutions today.

You can see how this feature is used in the visibility_range example.

ECS Hooks and Observers #

- Authors: @james-j-obrien, @cart

- PR #10839

As much as we love churning through homogeneous blocks of data in a tight loop here at Bevy, not every task is a perfect fit for the straightforward ECS model. Responding to changes and/or processing events are vital tasks in any application, and games are no exception.

Bevy already has a number of distinct tools to handle this:

- Buffered

Events: Multiple-producer, multiple-consumer queues. Flexible and efficient, but requires regular polling as part of a schedule. Events are dropped after two frames. - Change detection via

AddedandChanged: Enable writing queries that can respond to added or changed components. These queries linearly scan the change state of components that match the query to see if they have been added or changed. RemovedComponents: A special form of event that is triggered when a component is removed from an entity, or an entity with that component is despawned.

All of these (and systems themselves!) use a "pull"-style mechanism: events are sent regardless of whether or not anyone is listening, and listeners must periodically poll to ask if anything has changed. This is a useful pattern, and one we intend to keep around! By polling, we can process events in batch, getting more context and improving data locality (which makes the CPU go brr).

But it comes with some limitations:

- There is an unavoidable delay between an event being triggered and the response being processed

- Polling introduces a small (but non-zero) overhead every frame

This delay is the critical problem:

- Data (like indexes or hierarchies) can exist, even momentarily, in an invalid state

- We can't process arbitrary chain of events of recursive logic within a single cycle

To overcome these limitations, Bevy 0.14 introduces Component Lifecycle Hooks and Observers: two complementary "push"-style mechanisms inspired by the ever-wonderful flecs ECS.

Component Lifecycle Hooks

Component Hooks are functions (capable of interacting with the ECS World) registered for a specific component type (as part of the Component trait impl), which are run automatically in response to "component lifecycle events", such as when that component is added, overwritten, or removed.

For a given component type, only one hook can be registered for a given lifecycle event, and it cannot be overwritten.

Hooks exist to enforce invariants tied to that component (ex: maintaining indices or hierarchy correctness). Hooks cannot be removed and always take priority over observers: they run before any on-add / on-insert observers, but after any on-remove observers. As a result, they can be thought of as something closer to constructors & destructors, and are more suitable for maintaining critical safety or correctness invariants. Hooks are also somewhat faster than observers, as their reduced flexibility means that fewer lookups are involved.

Let's examine a simple example where we care about maintaining invariants: one entity (with a Target component) targeting another entity (with a Targetable component).

#[derive(Component)]

struct Target(Option<Entity>);

#[derive(Component)]

struct Targetable {

targeted_by: Vec<Entity>

};

We want to automatically clear the Target when the target entity is despawned: how do we do this?

If we were to use the pull-based approach (RemovedComponents in this case), there could be a delay between the entity being despawned and the Target component being updated. We can remove that delay with hooks!

Let's see what this looks like with a hook on Targetable:

// Rather than a derive, let's configure the hooks with a custom

// implementation of Component

impl Component for Targetable {

const STORAGE_TYPE: StorageType = StorageType::Table;

fn register_component_hooks(hooks: &mut ComponentHooks) {

// Whenever this component is removed, or an entity with

// this component is despawned...

hooks.on_remove(|mut world, targeted_entity, _component_id|{

// Grab the data that's about to be removed

let targetable = world.get::<Targetable>(targeted_entity).unwrap();

for targeting_entity in targetable.targeted_by {

// Track down the entity that's targeting us

let mut targeting = world.get_mut::<Target>(targeting_entity).unwrap();

// And clear its target, cleaning up any dangling references

targeting.0 = None;

}

})

}

}

Observers

Observers are on-demand systems that listen to "triggered" events. These events can be triggered for specific entities or they can be triggered "globally" (no entity target).

In contrast to hooks, observers are a flexible tool intended for higher level application logic. They can watch for when user-defined events are triggered.

#[derive(Event)]

struct Message {

text: String

}

world.observe(|trigger: Trigger<Message>| {

println!("{}", trigger.event().message.text);

});

Observers are run immediately when an event they are watching for is triggered:

// All registered `Message` observers are immediately run here

world.trigger(Message { text: "Hello".to_string() });

If an event is triggered via a Command, the observers will run when the Command is flushed:

fn send_message(mut commands: Commands) {

// This will trigger all `Message` observers when this system's commands are flushed

commands.trigger(Message { text: "Hello".to_string() } );

}

Events can also be triggered with an entity target:

#[derive(Event)]

struct Resize { size: usize }

commands.trigger_targets(Resize { size: 10 }, some_entity);

You can trigger an event for more than one entity at the same time:

commands.trigger_targets(Resize { size: 10 }, [e1, e2]);

A "global" observer will be executed when any target is triggered:

fn main() {

App::new()

.observe(on_resize)

.run()

}

fn on_resize(trigger: Trigger<Resize>, query: Query<&mut Size>) {

let size = query.get_mut(trigger.entity()).unwrap();

size.value = trigger.event().size;

}

Notice that observers can use system parameters like Query, just like a normal system.

You can also add observers that only run for specific entities:

commands

.spawn(Widget)

.observe(|trigger: Trigger<Resize>| {

println!("This specific widget entity was resized!");

});

Observers are actually just an entity with the Observer component. All of the observe() methods used above are just shorthand for spawning a new observer entity. This is what a "global" observer entity looks like:

commands.spawn(Observer::new(|trigger: Trigger<Message>| {}));

Likewise, an observer watching a specific entity looks like this

commands.spawn(

Observer::new(|trigger: Trigger<Resize>| {})

.with_entity(some_entity)

);

This API makes it easy to manage and clean up observers. It also enables advanced use cases, such as sharing observers across multiple targets!

Now that we know a bit about observers, lets examine the API through a simple gameplay-flavored example:Click to expand...

use bevy::prelude::*;

#[derive(Event)]

struct DealDamage {

damage: u8,

}

#[derive(Event)]

struct LoseLife {

life_lost: u8,

}

#[derive(Event)]

struct PlayerDeath;

#[derive(Component)]

struct Player;

#[derive(Component)]

struct Life(u8);

#[derive(Component)]

struct Defense(u8);

#[derive(Component, Deref, DerefMut)]

struct Damage(u8);

#[derive(Component)]

struct Monster;

fn main() {

App::new()

.add_systems(Startup, spawn_player)

.add_systems(Update, attack_player)

.observe(on_player_death);

}

fn spawn_player(mut commands: Commands) {

commands

.spawn((Player, Life(10), Defense(2)))

.observe(on_damage_taken)

.observe(on_losing_life);

}

fn attack_player(

mut commands: Commands,

monster_query: Query<&Damage, With<Monster>>,

player_query: Query<Entity, With<Player>>,

) {

let player_entity = player_query.single();

for damage in &monster_query {

commands.trigger_targets(DealDamage { damage: damage.0 }, player_entity);

}

}

fn on_damage_taken(

trigger: Trigger<DealDamage>,

mut commands: Commands,

query: Query<&Defense>,

) {

let defense = query.get(trigger.entity()).unwrap();

let damage = trigger.event().damage;

let life_lost = damage.saturating_sub(defense.0);

// Observers can be chained into each other by sending more triggers using commands.

// This is what makes observers so powerful ... this chain of events is evaluated

// as a single transaction when the first event is triggered.

commands.trigger_targets(LoseLife { life_lost }, trigger.entity());

}

fn on_losing_life(

trigger: Trigger<LoseLife>,

mut commands: Commands,

mut life_query: Query<&mut Life>,

player_query: Query<Entity, With<Player>>,

) {

let mut life = life_query.get_mut(trigger.entity()).unwrap();

let life_lost = trigger.event().life_lost;

life.0 = life.0.saturating_sub(life_lost);

if life.0 == 0 && player_query.contains(trigger.entity()) {

commands.trigger(PlayerDeath);

}

}

fn on_player_death(_trigger: Trigger<PlayerDeath>, mut app_exit: EventWriter<AppExit>) {

println!("You died. Game over!");

app_exit.send_default();

}

In the future, we intend to use hooks and observers to replace RemovedComponents, make our hierarchy management more robust, create a first-party replacement for bevy_eventlistener as part of our UI work, and build out relations. These are powerful, general-purpose tools: we can't wait to see the mad science the community cooks up with them!

When you're ready to get started, check out the component hooks and observers examples for more API details.

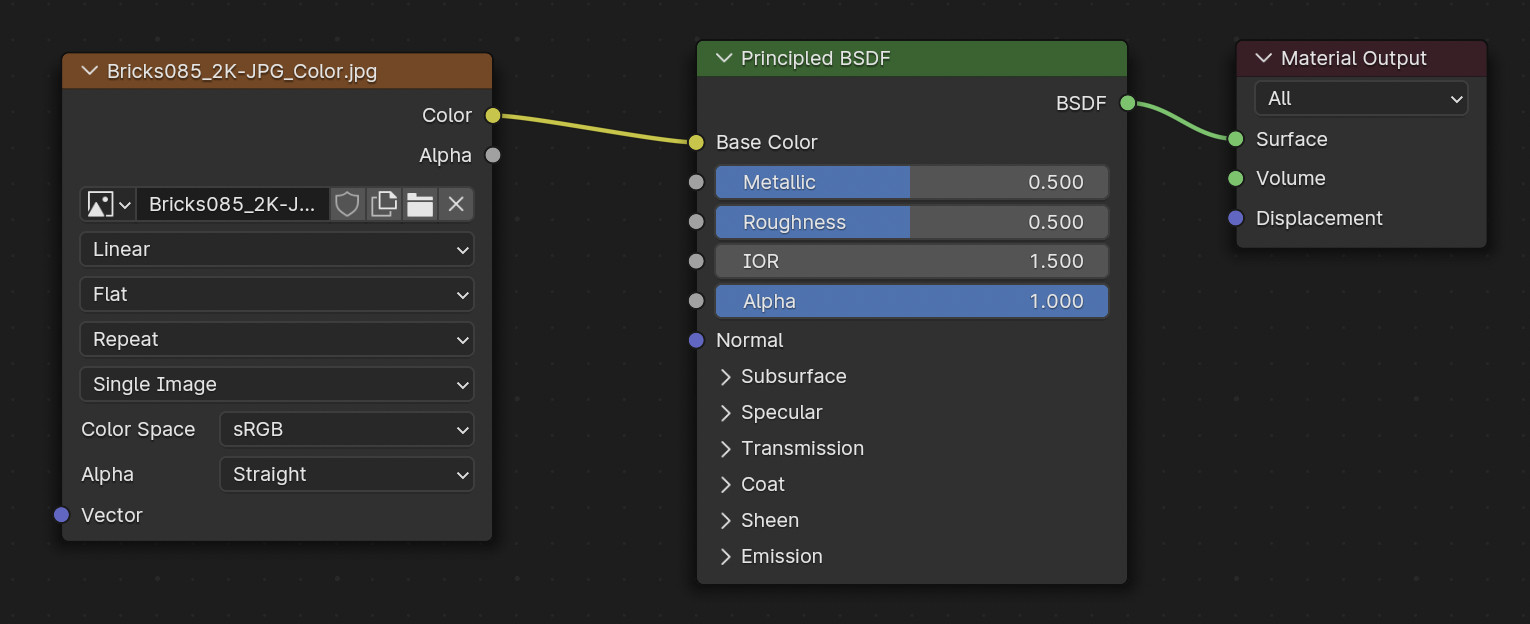

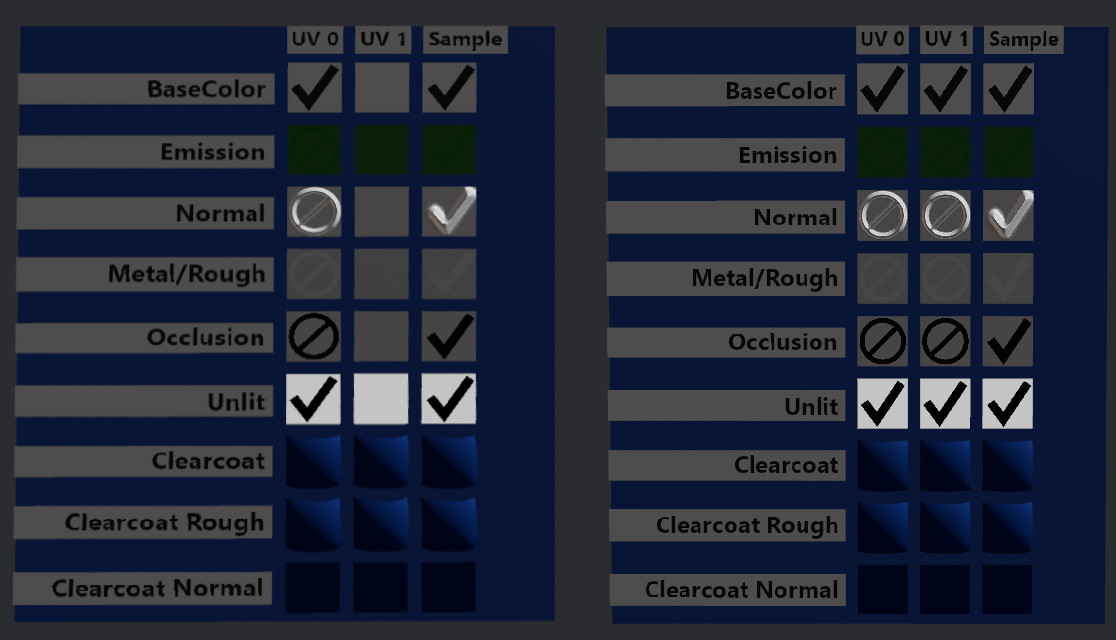

glTF KHR_texture_transform Support #

- Authors: @janhohenheim, @yrns, @Kanabenki

- PR #11904

The GLTF extension KHR_texture_transform is used to transform a texture before applying it. By reading this extension, Bevy can now support a variety of new workflows. The one we want to highlight here is the ability to easily repeat textures a set number of times. This is useful for creating textures that are meant to be tiled across a surface. We will show how to do this using Blender, but the same principles apply to any 3D modeling software.

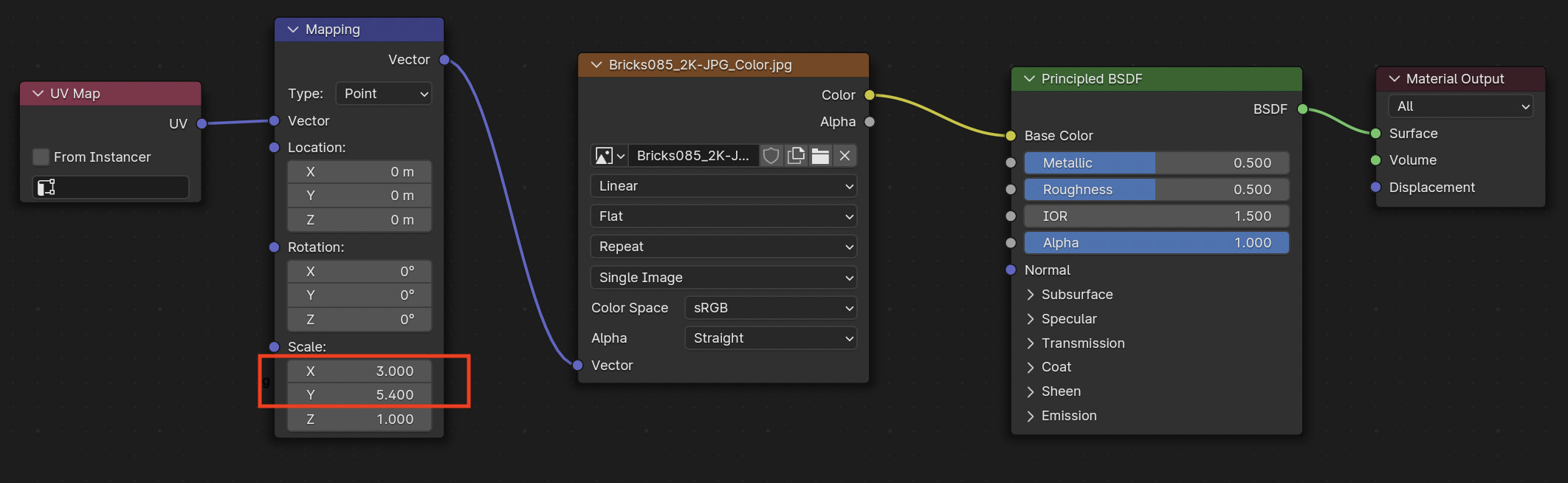

Let's look at an example scene that we've prepared in Blender, exported as a GLTF file and loaded into Bevy. We will first use the most basic shader node setup available in Blender:

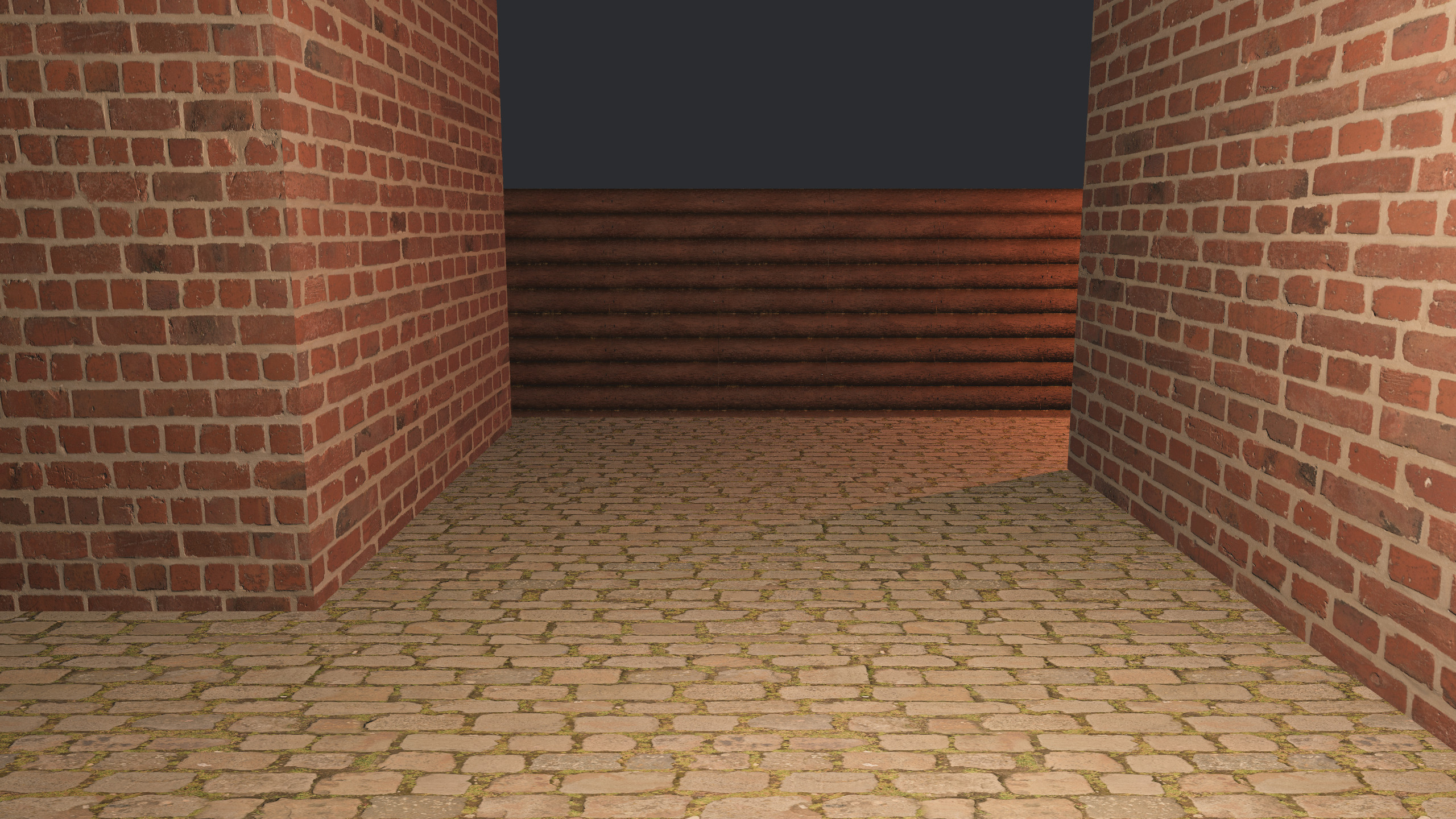

The result is the following scene in Bevy:

Oh no! Everything is stretched! This is because we have set up our UVs in a way that maps the texture exactly once onto the mesh. There are a few ways to deal with this, but the most convenient is to add shader nodes that scale the texture so that it repeats:

The data of the Mapping node is the one exported to KHR_texture_transform. Look at the part in red. These scaling factors determine how often the texture should be repeated in the material. Tweaking this value for all textures results in a much nicer render:

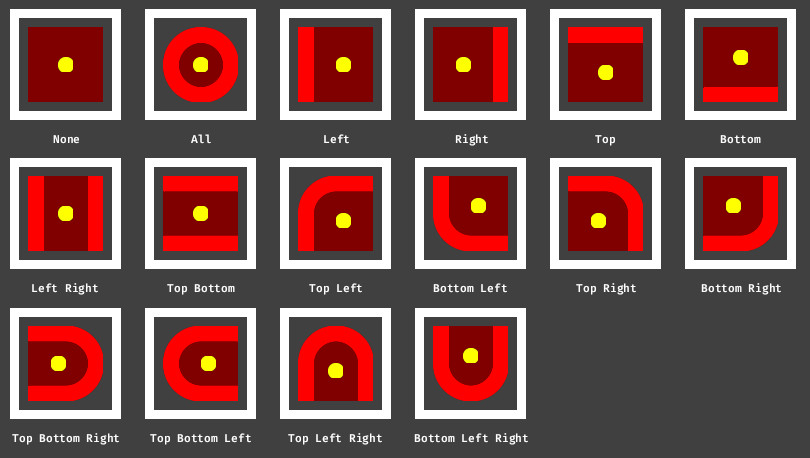

UI Node Border Radius #

- Authors: @chompaa, @pablo-lua, @alice-i-cecile, @bushrat011899

- PR #12500

Border radius for UI nodes has been a long-requested feature for Bevy. Now it's supported!

To apply border radius to a UI node, there is a new component BorderRadius. NodeBundle and ButtonBundle now have a field for this component called border_radius:

commands.spawn(NodeBundle {

style: Style {

width: Val::Px(50.0),

height: Val::Px(50.0),

// We need a border to round a border, after all!

border: UiRect::all(Val::Px(5.0)),

..default()

},

border_color: BorderColor(Color::BLACK),

// Apply the radius to all corners.

// Optionally, you could use `BorderRadius::all`.

border_radius: BorderRadius {

top_left: Val::Px(50.0),

top_right: Val::Px(50.0),

bottom_right: Val::Px(50.0),

bottom_left: Val::Px(50.0),

},

..default()

});

There's a new example showcasing this new API, a screenshot of which can be seen below:

Animation Blending with the AnimationGraph #

- Authors: @pcwalton, @rparrett, @james7132

- PR #11989

Through the eyes of a beginner, handling animation seems simple enough. Define a series of keyframes which transform the various bits of your model to match those poses. We slap some interpolation on there to smoothly move between them, and the user tells you when to start and stop the animation. Easy!

But modern animation pipelines (especially in 3D!) are substantially more complex: animators expect to be able to smoothly blend and programmatically alter different animations dynamically in response to gameplay. In order to capture this richness, the industry has developed the notion of an animation graph, which is used to couple the underlying state machine of a game object to the animations that should be playing, and the transitions that should occur between each of the various states.

A player character may be walking, running, slashing a sword, defending with a sword... to create a polished effect, animators need to be able to change between these animations smoothly, change the speed of the walk cycle to match the movement speed along the ground and even perform multiple animations at once!

In Bevy 0.14, we've implemented the Animation Composition RFC, providing a low-level API that brings code- and asset-driven animation blending to Bevy.

#[derive(Resource)]

struct ExampleAnimationGraph(Handle<AnimationGraph>);

fn programmatic_animation_graph(

mut commands: Commands,

asset_server: ResMut<AssetServer>,

animation_graphs: ResMut<Assets<AnimationGraph>>,

) {

// Create the nodes.

let mut animation_graph = AnimationGraph::new();

let blend_node = animation_graph.add_blend(0.5, animation_graph.root);

animation_graph.add_clip(

asset_server.load(GltfAssetLabel::Animation(0).from_asset("models/animated/Fox.glb")),

1.0,

animation_graph.root,

);

animation_graph.add_clip(

asset_server.load(GltfAssetLabel::Animation(1).from_asset("models/animated/Fox.glb")),

1.0,

blend_node,

);

animation_graph.add_clip(

asset_server.load(GltfAssetLabel::Animation(2).from_asset("models/animated/Fox.glb")),

1.0,

blend_node,

);

// Add the graph to our collection of assets.

let handle = animation_graphs.add(animation_graph);

// Hold onto the handle

commands.insert_resource(ExampleAnimationGraph(handle));

}

While it can be used to great effect today, most animators will ultimately prefer editing these graphs with a GUI. We plan to build a GUI on top of this API as part of the fabled Bevy Editor. Today, there are also third party solutions like bevy_animation_graph.

To learn more and see what the asset-driven approach looks like, take a look at the new animation_graph example.

Improved Color API #

- Authors: @viridia, @mockersf

- PR #12013

Colors are a huge part of building a good game: UI, effects, shaders and more all need fully-featured, correct and convenient color tools.

Bevy now supports a broad selection of color spaces, each with their own type (e.g. LinearRgba, Hsla, Oklaba), and offers a wide range of fully documented operations on and conversions between them.

The new API is more error-resistant, more idiomatic and allows us to save work by storing the LinearRgba type in our rendering internals. This solid foundation has allowed us to implement a wide range of useful operations, clustered into traits like Hue or Alpha, allowing you to operate over any color space with the required property. Critically, color mixing / blending is now supported: perfect for procedurally generating color palettes and working with animations.

use bevy_color::prelude::*;

// Each color space now corresponds to a specific type

let red = Srgba::rgb(1., 0., 0.);

// All non-standard color space conversions are done through the shortest path between

// the source and target color spaces to avoid a quadratic explosion of generated code.

// This conversion...

let red = Oklcha::from(red);

// ...is implemented using

let red = Oklcha::from(Oklaba::from(LinearRgba::from(red)));

// We've added the `tailwind` palette colors: perfect for quick-but-pretty prototyping!

// And the existing CSS palette is now actually consistent with the industry standard :p

let blue = tailwind::BLUE_500;

// The color space that you're mixing your colors in has a huge impact!

// Consider using the scientifically-motivated `Oklcha` or `Oklaba` for a perceptually uniform effect.

let purple = red.mix(blue, 0.5);

Most of the user-facing APIs still accept a colorspace-agnostic Color (which now wraps our color-space types), while rendering internals use the physically-based LinearRgba type. For an overview of the different color spaces, and what they're each good for, please check out our color space usage documentation.

bevy_color offers a solid, type-safe foundation, but it's just getting started. If you'd like another color space or there are more things you'd like to do to your colors, please open an issue or PR and we'd be happy to help!

Also note that bevy_color is intended to operate effectively as a stand-alone crate: feel free to take a dependency on it for your non-Bevy projects as well.

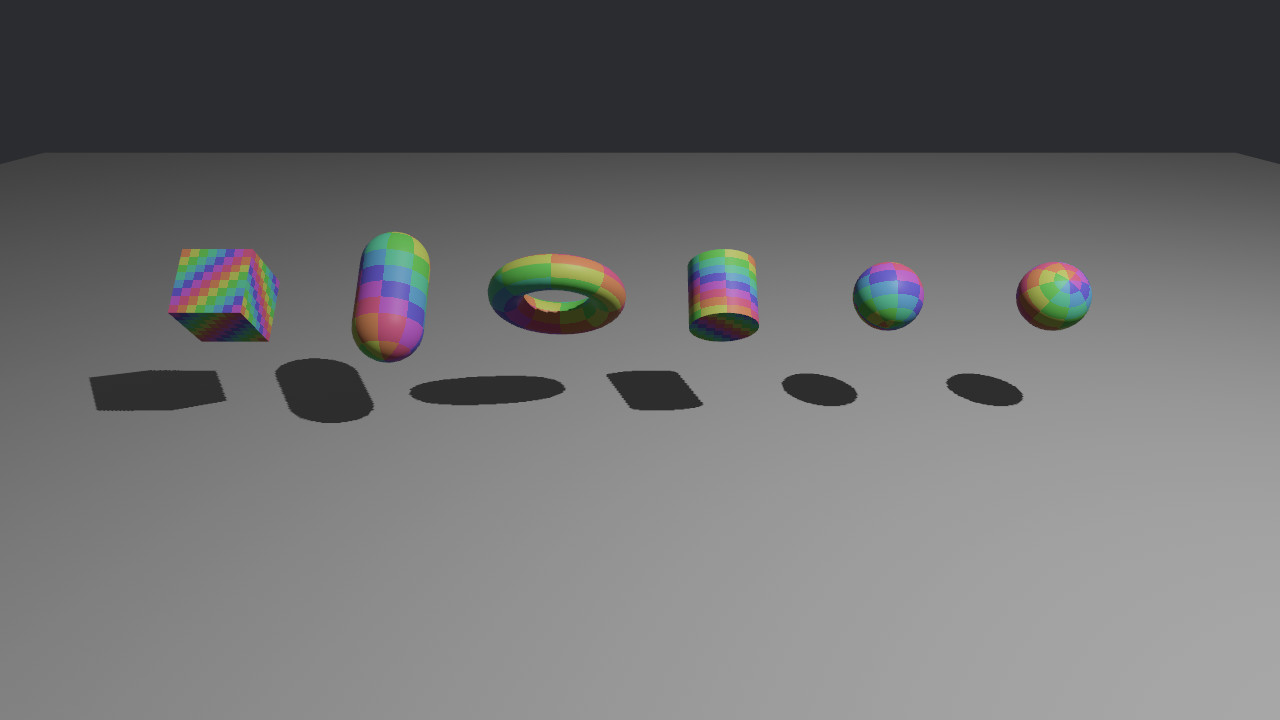

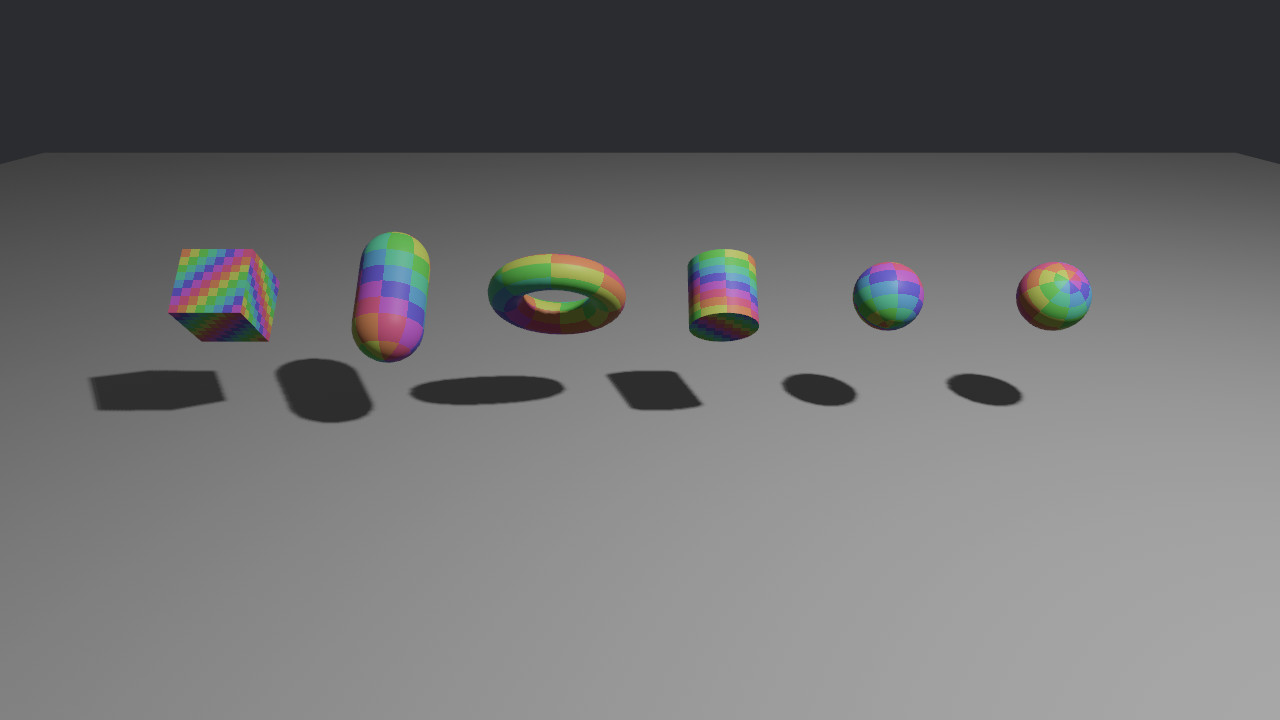

Extruded Shapes #

- Authors: @lynn-lumen

- PR #13270

Bevy 0.14 introduces an entirely new group of primitives: extrusions!

An extrusion is a 2D primitive (the base shape) that is extruded into a third dimension by some depth. The resulting shape is a prism (or in the special case of the circle, a cylinder).

// Create an ellipse with width 2 and height 1.

let my_ellipse = Ellipse::from_size(2.0, 1.0);

// Create an extrusion of this ellipse with a depth of 1.

let my_extrusion = Extrusion::new(my_ellipse, 1.);

All extrusions are extruded along the Z-axis. This guarantees that an extrusion of depth 0 and the corresponding base shape are identical, just as one would expect.

Measuring and Sampling

Since all extrusions with base shapes that implement Measured2d implement Measured3d, you can easily get the surface area or volume of an extrusion. If you have an extrusion of a custom 2D primitive, you can simply implement Measured2d for your primitive and Measured3d will be implemented automatically for the extrusion.

Likewise, you can sample the boundary and interior of any extrusion if the base shape of the extrusion implements ShapeSample<Output = Vec2> and Measured2d.

// Create a 2D capsule with radius 1 and length 2, extruded to a depth of 3

let extrusion = Extrusion::new(Capsule2d::new(1.0, 2.0), 3.0);

// Get the volume of the extrusion

let volume = extrusion.volume();

// Get the surface area of the extrusion

let surface_area = extrusion.area();

// Create a random number generator

let mut rng = StdRng::seed_from_u64(4);

// Sample a random point inside the extrusion

let interior_sample = extrusion.sample_interior(&mut rng);

// Sample a random point on the surface of the extrusion

let boundary_sample = extrusion.sample_boundary(&mut rng);

Bounding

You can also get bounding spheres and Axis Aligned Bounding Boxes (AABBs) for extrusions. If you have a custom 2D primitive that implements Bounded2d, you can simply implement BoundedExtrusion) for your primitive. The default implementation will give optimal results but may be slower than a solution fitted to your primitive.

Meshing

Extrusions do not exist in the world of maths only though. They can also be meshed and displayed on the screen!

And again, adding meshing support for your own primitives is made easy by Bevy! You simply need to implement meshing for your 2D primitive and then implement Extrudable for your 2D primitive's MeshBuilder.

When implementing Extrudable, you have to provide information about whether segments of the perimeter of the base shape are to be shaded smooth or flat, and what vertices belong to each of these perimeter segments.

The Extrudable trait allows you to easily implement meshing for extrusions of custom primitives. Of course, you could also implement meshing manually for your extrusion.

If you want to see a full implementation of this, you can check out the custom primitives example.

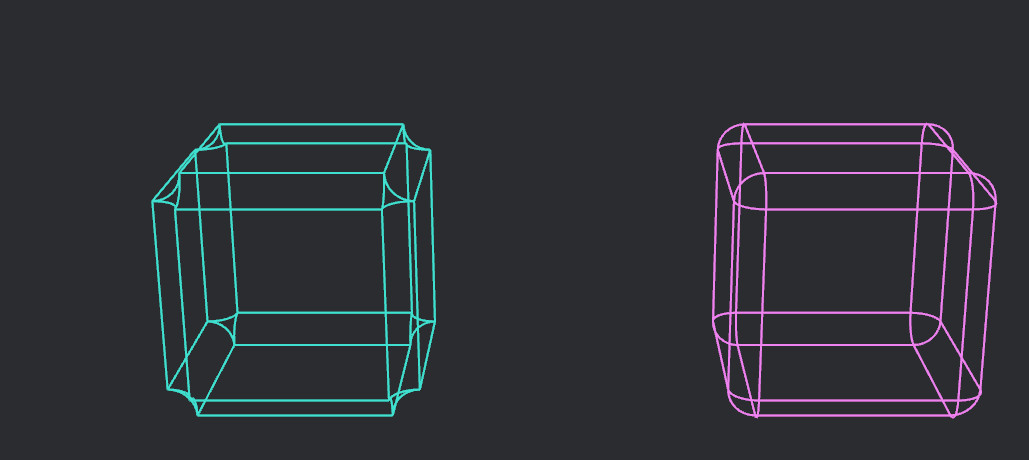

More Gizmos #

- Authors: @mweatherley, @Kanabenki, @MrGVSV, @solis-lumine-vorago, @alice-i-cecile

- PR #12211

Gizmos in Bevy allow developers to easily draw arbitrary shapes to help debugging or authoring content, but also to visualize specific properties of your scene, such has the AABB of your meshes.

In 0.14, several new gizmos have been added to bevy::gizmos:

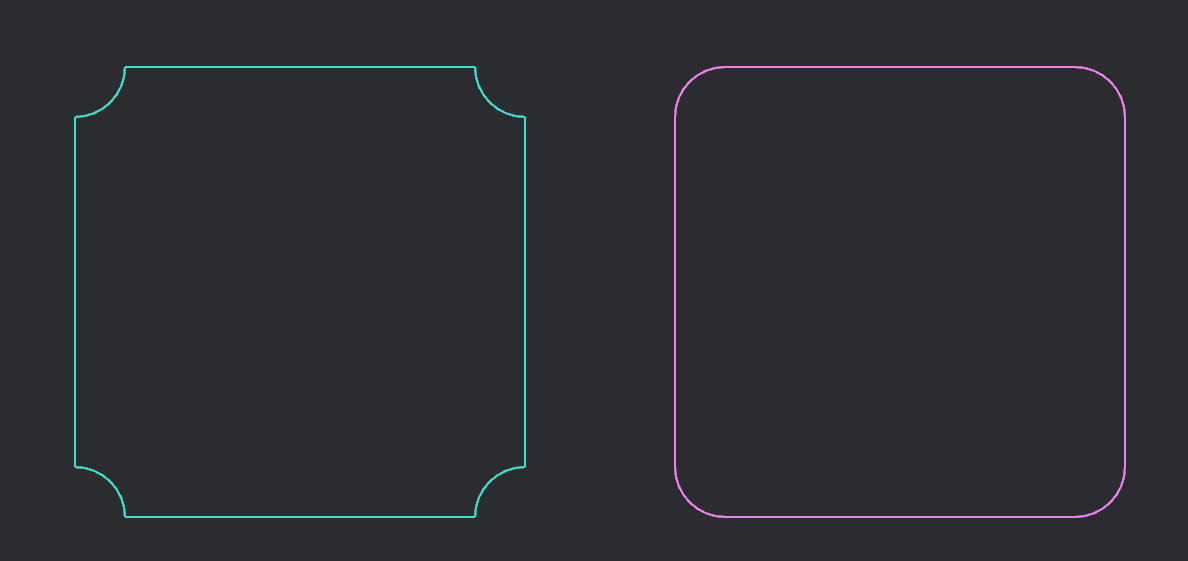

Rounded box gizmos

Rounded boxes and cubes are great for visualizing regions and colliders.

If you set the corner_radius or edge_radius to a positive value, the corners will be rounded outwards. However, if you provide a negative value, the corners will flip and curve inwards.

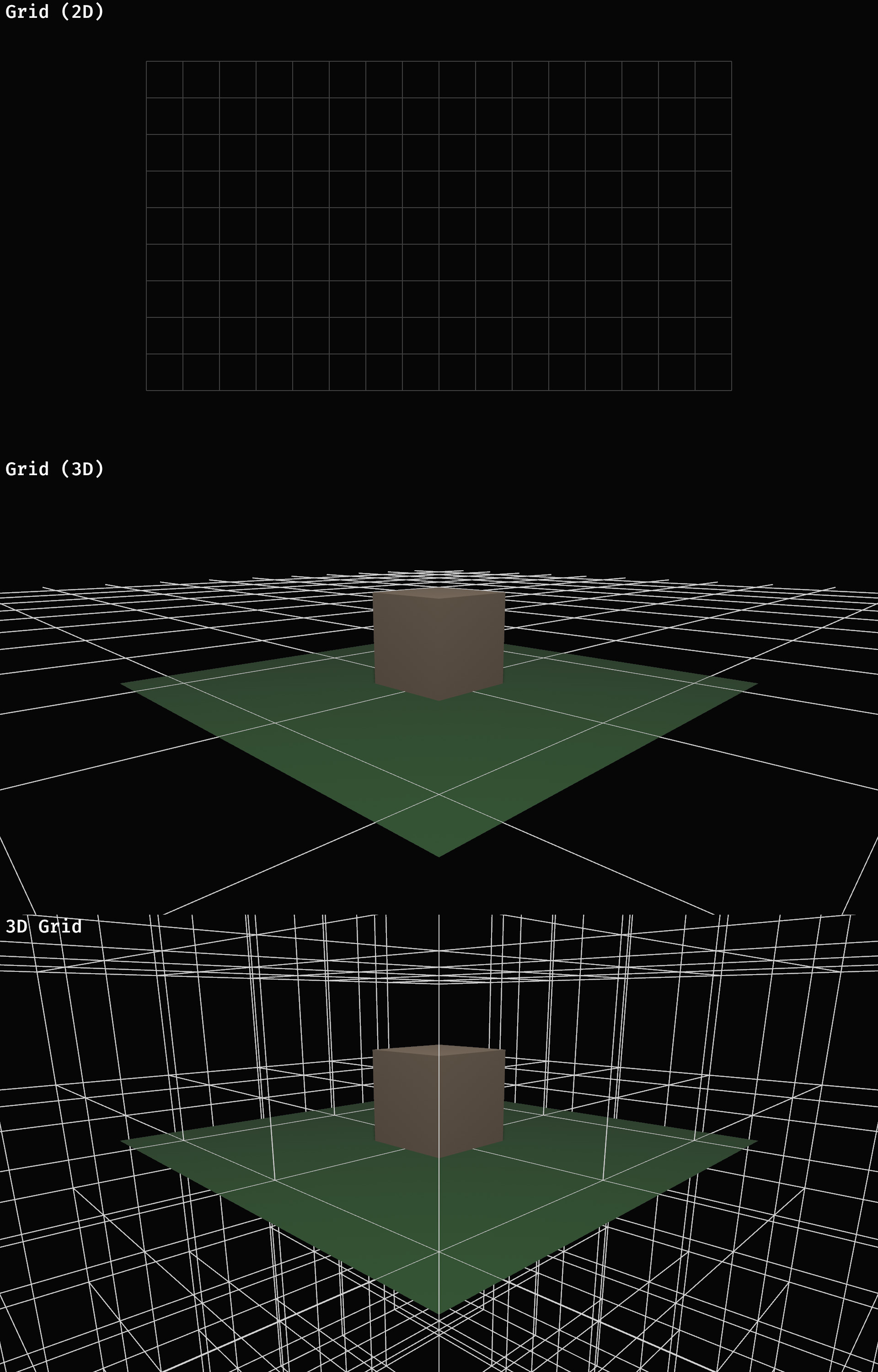

Grid Gizmos

New grid gizmo types were added with Gizmos::grid_2d and Gizmos::grid to draw a plane grid in either 2D or 3D, alongside Gizmos::grid_3d to draw a 3D grid.

Each grid type can be skewed, scaled and subdivided along its axis, and you can separately control which outer edges to draw.

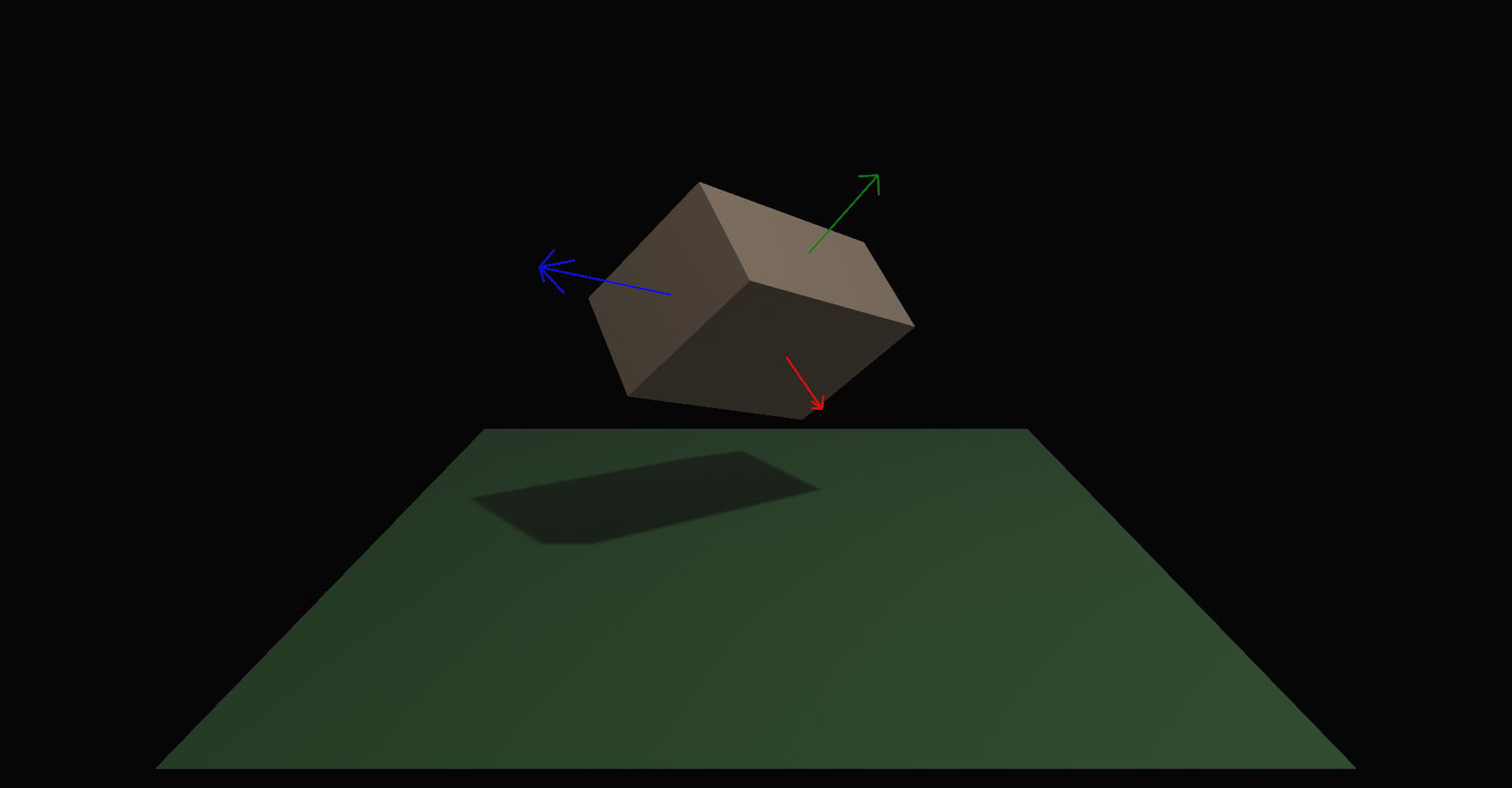

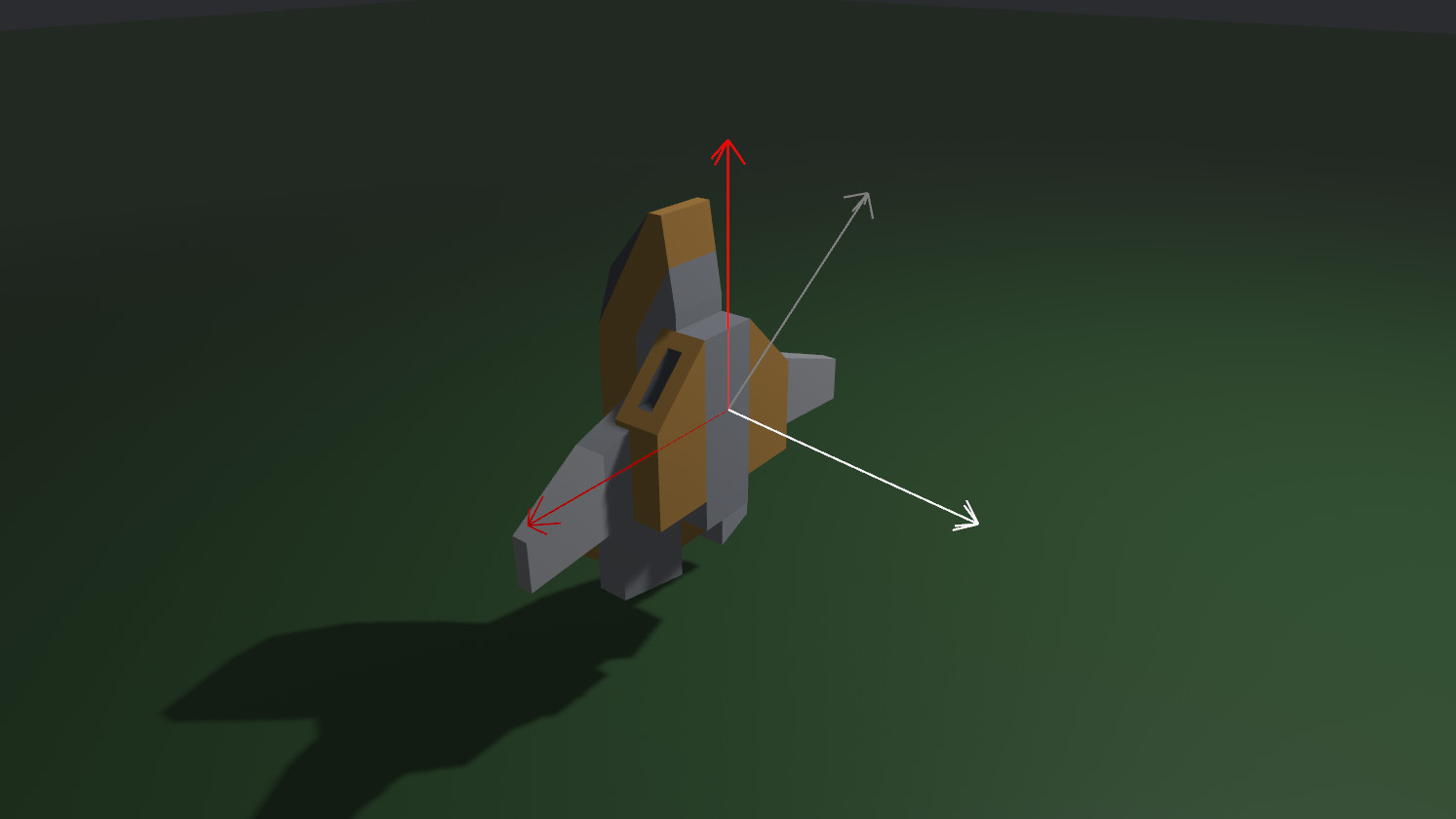

Coordinate Axes Gizmo

The new Gizmos::axes add a simple way to show the position, orientation and scale of any object from its Transform plus a base size. The size of each axis arrow is proportional to the corresponding axis scale in the provided Transform.

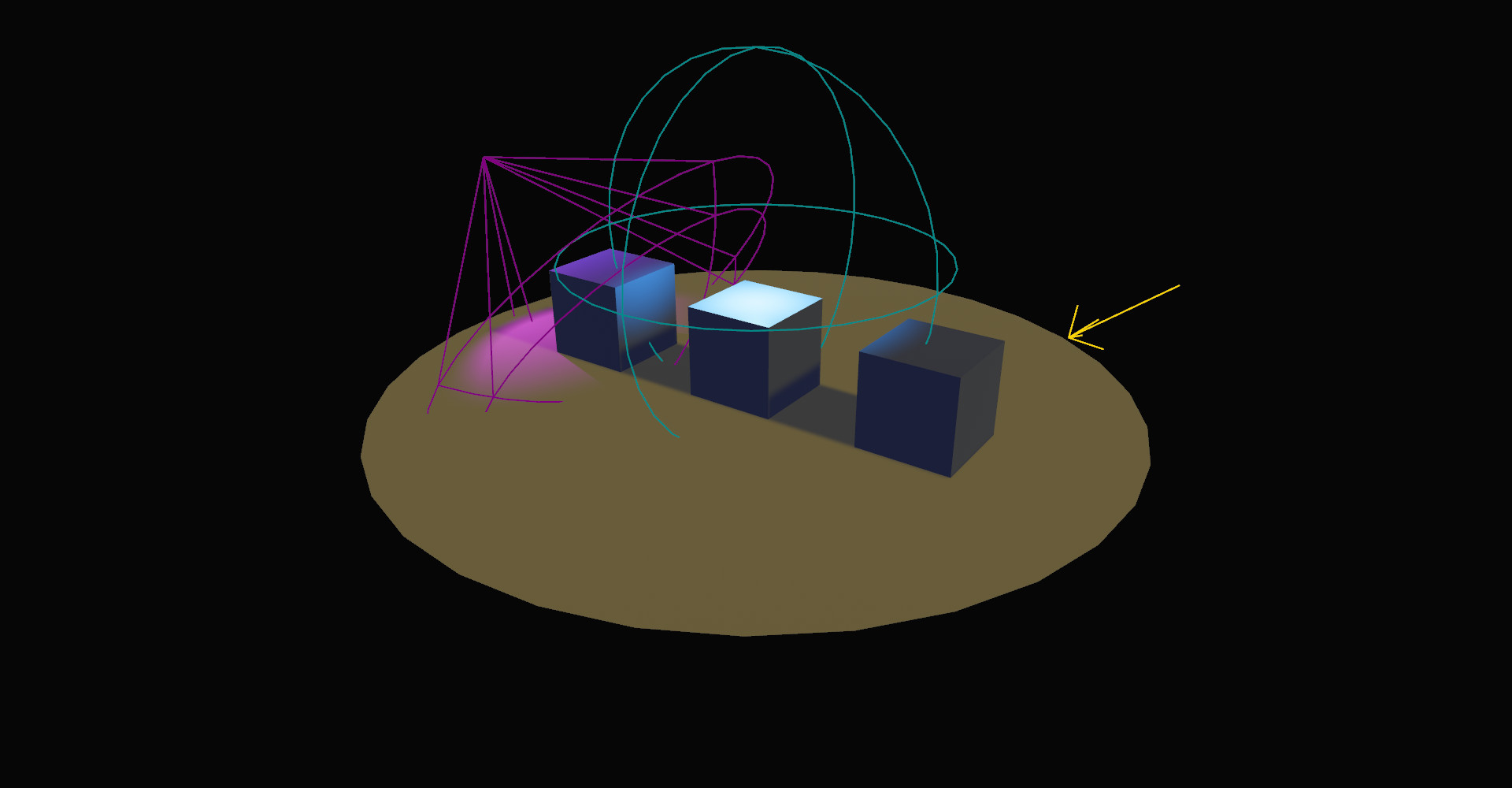

Light Gizmos

The new ShowLightGizmo component implements a retained gizmo to visualize lights for SpotLight, PointLight and DirectionalLight. Most light properties are visually represented by the gizmos, and the gizmo color can be set to match the light instance or use a variety of other behaviors.

Similar to other retained gizmos, ShowLightGizmo can be configured per-instance or globally with LightGizmoConfigGroup.

Gizmo Line Styles and Joints #

- Authors: @lynn-lumen

- PR #12394

Previous versions of Bevy supported drawing line gizmos:

fn draw_gizmos(mut gizmos: Gizmos) {

gizmos.line_2d(Vec2::ZERO, Vec2::splat(-80.), RED);

}

However the only way to customize gizmos was to change their color, which may be limiting for some use cases. Additionally, the meeting points of two lines in a line strip, their joints, had little gaps.

As of Bevy 0.14, you can change the style of the lines and their joints for each gizmo config group:

fn draw_gizmos(mut gizmos: Gizmos) {

gizmos.line_2d(Vec2::ZERO, Vec2::splat(-80.), RED);

}

fn setup(mut config_store: ResMut<GizmoConfigStore>) {

// Get the config for you gizmo config group

let (config, _) = config_store.config_mut::<DefaultGizmoConfigGroup>();

// Set the line style and joints for this config group

config.line_style = GizmoLineStyle::Dotted;

config.line_joints = GizmoLineJoint::Bevel;

}

The new line styles can be used in both 2D and 3D and respect the line_perspective option of their config groups.

Available line styles are:

GizmoLineStyle::Dotted: draws a dotted line with each dot being a squareGizmoLineStyle::Solid: draws a solid line - this is the default behavior and the only one available before Bevy 0.14

Similarly, the new line joints offer a variety of options:

GizmoLineJoint::Miter, which extends both lines until they meet at a common miter point,GizmoLineJoint::Round(resolution), which will approximate an arc filling the gap between the two lines. Theresolutiondetermines the amount of triangles used to approximate the geometry of the arc.GizmoLineJoint::Bevel, which connects the ends of the two joining lines with a straight segment, andGizmoLineJoint::None, which uses no joints and leaves small gaps - this is the default behavior and the only one available before Bevy 0.14.

You can check out the 2D gizmos example, which demonstrates the use of line styles and joints!

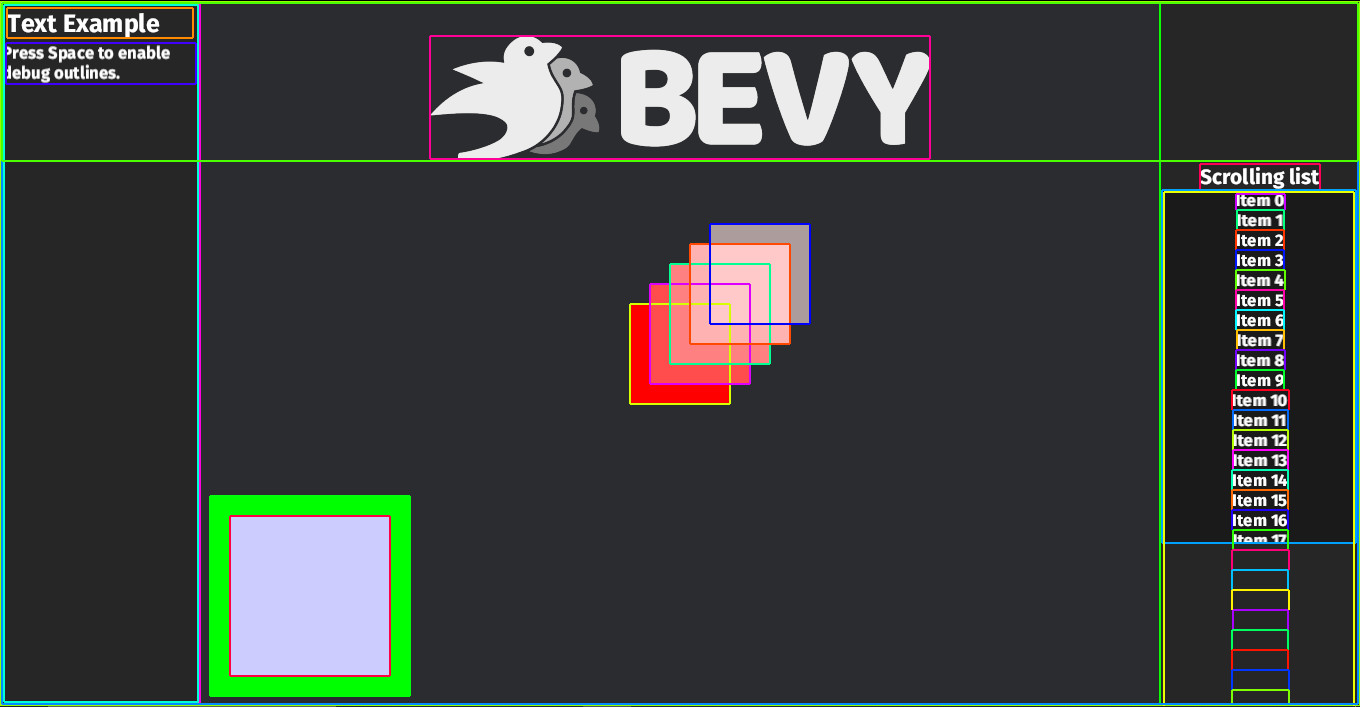

UI Node Outline Gizmos #

- Authors: @pablo-lua, @nicopap, @alice-i-cecile

- PR #11237

When working with UI on the web, being able to quickly debug the size of all your boxes is wildly useful. We now have a native layout tool which adds gizmos outlines to all Nodes

An example of what the tool looks like after enabled

use bevy::prelude::*;

// You first have to add the DebugUiPlugin to your app

let mut app = App::new()

.add_plugins(bevy::dev_tools::ui_debug_overlay::DebugUiPlugin);

// In order to enable the tool at runtime, you can add a system to toggle it

fn toggle_overlay(

input: Res<ButtonInput<KeyCode>>,

mut options: ResMut<bevy::dev_tools::ui_debug_overlay::UiDebugOptions>,

) {

info_once!("The debug outlines are enabled, press Space to turn them on/off");

if input.just_pressed(KeyCode::Space) {

// The toggle method will enable the debug_overlay if disabled and disable if enabled

options.toggle();

}

}

// And add the system to the app

app.add_systems(Update, toggle_overlay);

Contextually Clearing Gizmos #

- Authors: @Aceeri

- PR #10973

Gizmos are drawn via an immediate mode API. This means that every update you draw all gizmos you want to display, and only those will be shown. Previously, update referred to "once every time the Main schedule runs". This matches the frame rate, so it usually works great! But when you try to draw gizmos during FixedMain, they will flicker or be rendered multiple times. In Bevy 0.14, this now just works!

This can be extended for use with custom schedules. Instead of a single storage, there now are multiple storages differentiated by a context type parameter. You can also set a type parameter on the Gizmos system param to choose what storage to write to. You choose when storages you add get drawn or cleared: Any gizmos in the default storage (the () context) during the Last schedule will be shown.

Query Joins #

- Authors: @hymm

- PR #11535

ECS Queries can now be combined, returning the data for entities that are contained in both queries.

fn helper_function(a: &mut Query<&A>, b: &mut Query<&B>){

let a_and_b: QueryLens<(Entity, &A, &B)> = a.join(b);

assert!(a_and_b.iter().len() <= a.len());

assert!(a_and_b.iter().len() <= b.len());

}

In most cases, you should continue to simply add more parameters to your original query. Query<&A, &B> will generally be clearer than joining them later. But when a complex system or helper function backs you into a corner, query joins are there if you need them.

If you're familiar with database terminology, this is an "inner join". Other types of query joins are being considered. Maybe you could take a crack at the follow-up issue?

Computed States & Sub-States #

- Authors: @lee-orr, @marcelchampagne, @MiniaczQ, @alice-i-cecile

- PR #11426

Bevy's States are a simple but powerful abstraction for managing the control flow of your app.

But as users' games (and non-game applications!) grew in complexity, their limitations became more apparent. What happens if we want to capture the notion of "in a menu", but then have different states corresponding to which submenu should be open? What if we want to ask questions like "is the game paused", but that question only makes sense while we're within a game?

Finding a good abstraction for this required several attempts and a great deal of both experimentation and discussion.

While your existing States code will work exactly as before, there are now two additional tools you can reach for if you're looking for more expressiveness: computed states and sub states.

Let's begin with a simple state declaration:

#[derive(States, Clone, PartialEq, Eq, Hash, Debug, Default)]

enum GameState {

#[default]

Menu,

InGame {

paused: bool

},

}

The addition of pause field means that simply checking for GameState::InGame doesn't work ... the states are different depending on its value and we may want to distinguish between game systems that run when the game is paused or not!

Computed States

While we can simply do OnEnter(GameState::InGame{paused: true}), we need to be able to reason about "while we're in the game, paused or not". To this end, we define the InGame computed state:

#[derive(Clone, PartialEq, Eq, Hash, Debug)]

struct InGame;

impl ComputedStates for InGame {

// Computed states can be calculated from one or many source states.

type SourceStates = GameState;

// Now, we define the rule that determines the value of our computed state.

fn compute(sources: GameState) -> Option<InGame> {

match sources {

// We can use pattern matching to express the

//"I don't care whether or not the game is paused" logic!

GameState::InGame {..} => Some(InGame),

_ => None,

}

}

}

Sub-States

In contrast, sub-states should be used when you want to keep manual control over the value through NextState, but still bind their existence to some parent state.

#[derive(SubStates, Clone, PartialEq, Eq, Hash, Debug, Default)]

// This macro means that `GamePhase` will only exist when we're in the `InGame` computed state.

// The intermediate computed state is helpful for clarity here, but isn't required:

// you can manually `impl SubStates` for more control, multiple parent states and non-default initial value!

#[source(InGame = InGame)]

enum GamePhase {

#[default]

Setup,

Battle,

Conclusion

}

Initialization

Initializing our states is easy: just call the appropriate method on App and all of the required machinery will be set up for you.

App::new()

.init_state::<GameState>()

.add_computed_state::<InGame>()

.add_sub_state::<GamePhase>()

Just like any other state, computed states and substates work with all of the tools you're used to: the State and NextState resources, OnEnter, OnExit and OnTransition schedules and the in_state run condition. Make sure to visit both examples for more information!

The only exception is that, for correctness, computed states cannot be mutated through NextState. Instead, they are strictly derived from their parent states; added, removed and updated automatically during state transitions based on the provided compute method.

All of Bevy's state tools are now found in a dedicated bevy_state crate, which can be controlled via a feature flag. Yearning for the days of state stacks? Wish that there was a method for re-entering states? All of the state machinery relies only on public ECS tools: resources, schedules, and run conditions, making it easy to build on top of. We know that state machines are very much a matter of taste; so if our design isn't to your taste consider taking advantage of Bevy's modularity and writing your own abstraction or using one supplied by the community!

State Scoped Entities #

- Authors: @MiniaczQ, @alice-i-cecile, @mockersf

- PR #13649

State scoped entities is a pattern that naturally emerged in community projects. Bevy 0.14 has embraced it!

#[derive(Clone, Copy, PartialEq, Eq, Hash, Debug, Default, States)]

enum GameState {

#[default]

Menu,

InGame,

}

fn spawn_player(mut commands: Commands) {

commands.spawn((

// We mark this entity with the `StateScoped` component.

// When the provided state is exited, the entity will be

// deleted recursively with all children.

StateScoped(GameState::InGame)

SpriteBundle { ... }

))

}

App::new()

.init_state::<GameState>()

// We need to install the appropriate machinery for the cleanup code

// to run, once for each state type.

.enable_state_scoped_entities::<GameState>()

.add_systems(OnEnter(GameState::InGame), spawn_player);

By binding entity lifetime to a state during setup, we can dramatically reduce the amount of cleanup code we have to write!

State Identity Transitions #

- Authors: @MiniaczQ, @alice-i-cecile

- PR #13579

Users have sometimes asked for us to trigger exit and entry steps when moving from a state to itself. While this has its uses (refreshing is the core idea), it can be surprising and unwanted in other cases. We've found a compromise that lets users hook into this type of transition if it's something they need.

StateEventTransition events will now include transitions from a state to itself, which will also propagate to all dependent ComputedStates and SubStates.

Because it is a niche feature, OnExit and OnEnter schedules will ignore the new identity transitions by default, but you can visit the new custom_transitions example to see how you can bypass or change that behavior!

GPU Frustum Culling #

- Authors: @pcwalton

- PR #12889

Bevy's rendering stack is often CPU-bound: by shifting more work onto the GPU, we can better balance the load and render more shiny things faster. Frustum culling is an optimization technique that automatically hides objects that are outside of a camera's view (its frustum). In Bevy 0.14, users can choose to have this work performed on the GPU, depending on the performance characteristics of their project.

Two new components are available to control frustum culling: GpuCulling and NoCpuCulling. Attach the appropriate combination of these components to a camera, and you're set.

commands.spawn((

Camera3dBundle::default(),

// Enable GPU frustum culling (does not automatically disable CPU frustum culling).

GpuCulling,

// Disable CPU frustum culling.

NoCpuCulling

));

World Command Queue #

- Authors: @james7132, @james-j-obrien

- PR #11823

Working with Commands when you have exclusive world access has always been a pain. Create a CommandQueue, generate a Commands out of that, send your commands and then apply it? Not exactly the most intuitive solution.

Now, you can access the World's own command queue:

let mut world = World::new();

let mut commands = world.commands();

commands.spawn(TestComponent);

world.flush_commands();

While this isn't the most performant approach (just apply the mutations directly to the world and skip the indirection), this API can be great for quickly prototyping with or easily testing your custom commands. It is also used internally to power component lifecycle hooks and observers.

As a bonus, one-shot systems now apply their commands (and other deferred system params) immediately when run! We already have exclusive world access: why introduce delays and subtle bugs?

Reduced Multi-Threaded Execution Overhead #

- Authors: @chescock, @james7132

- PR #11906

The largest source of overhead in Bevy's multithreaded system executor is from thread context switching, i.e. starting and stopping threads. Each time a thread is woken up it can take up to 30us if the cache for the thread is cold. Minimizing these switches is an important optimization for the executor. In this cycle we landed two changes that show improvements for this:

Run the multi-threaded executor at the end of each system task

The system executor is responsible for checking that the dependencies for a system have run already and evaluating the run criteria and then running a task for that system. The old version of the multithreaded executor ran as a separate task that was woken up after each task completed. This would sometimes cause a new thread to be woken up for the executor to process the system completing.

By changing it so the system task tries to run the multithreaded executor after each system completes, we ensure that the multithreaded executor always runs on a thread that is already awake. This prevents one source of context switches. In practice this reduces the number of context switches per a Schedule run by 1-3 times, for an improvement of around 30us per schedule. When an app has many schedules, this can add up!

Combined Event update system

There used to be one instance of the "event update system" for each event type. With just the DefaultPlugins, that results in 20+ instances of the system.

Each instance ran very quick, so the overhead of spawning the system tasks and waking up threads to run all these systems dominated the time it took for the First schedule to run. So combining all these into one system avoids this overhead and makes the First schedule run much faster. In testing this made running the schedule go from 140us to 25us. Again, not a huge win, but we're all about saving every microsecond we can!

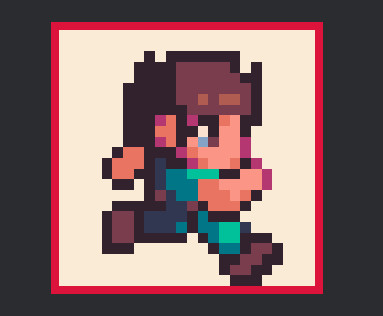

Decouple BackgroundColor from UiImage #

- Authors: @benfrankel

- PR #11165

UI images can now be given solid background colors:

The BackgroundColor component now works for UI images instead of applying a color tint on the image itself. You can still apply a color tint by setting UiImage::color. For example:

commands.spawn((

ImageBundle {

image: UiImage {

handle: assets.load("logo.png"),

color: DARK_RED.into(),

..default()

},

..default()

},

BackgroundColor(ANTIQUE_WHITE.into()),

Outline::new(Val::Px(8.0), Val::ZERO, CRIMSON.into()),

));

Combined WinitEvent #

- Authors: @UkoeHB

- PR #12100

When handling inputs, the exact ordering of the events received is often very significant, even when the events are not the same type! Consider a simple drag-and-drop operation. When, exactly, did the user release the mouse button relative to the many tiny movements that they performed? Getting these details right goes a long way to a responsive, precise user experience.

We now expose the blanket WinitEvent event stream, in addition to the existing separated event streams, which can be read and matched on directly whenever these problems arise.

Recursive Reflect Registration #

- Authors: @MrGVSV, @soqb, @cart, @james7132

- PR #5781

Bevy uses reflection in order to dynamically process data for things like serialization and deserialization. A Bevy app has a TypeRegistry to keep track of which types exist. Users can register their custom types when initializing the app or plugin.

#[derive(Reflect)]

struct Data<T> {

value: T,

}

#[derive(Reflect)]

struct Blob {

contents: Vec<u8>,

}

app

.register_type::<Data<Blob>>()

.register_type::<Blob>()

.register_type::<Vec<u8>>()

In the code above, Data<Blob> depends on Blob which depends on Vec<u8>, which means that all three types need to be manually registered— even if we only care about Data<Blob>.

This is both tedious and error-prone, especially when these type dependencies are only used in the context of other types (i.e. they aren't used as standalone types).

In 0.14, any type that derives Reflect will automatically register all of its type dependencies. So when we register Data<Blob>, Blob will be registered as well (which will register Vec<u8>), thus simplifying our registration down to a single line:

app.register_type::<Data<Blob>>()

Note that removing the registration for Data<Blob> now also means that Blob and Vec<u8> may not be registered either, unless they were registered some other way. If those types are needed as standalone types, they should be registered separately.

Rot2 Type for 2D Rotations #

- Authors: @Jondolf, @IQuick143, @tguichaoua

- PR #11658

Ever wanted to work with rotations in 2D and get frustrated with having to choose between quaternions and a raw f32? Us too!

We've added a convenient Rot2 type for you, with plenty of helper methods. Feel free to replace that helper type you wrote, and submit little PRs for any useful functionality we're missing.

Rot2 is a great complement to the Dir2 type (formerly Direction2d). The former represents an angle, while the latter is a unit vector. These types are similar but not interchangeable, and the choice of representation depends heavily on the task at hand. You can rotate a direction using direction = rotation * Dir2::X. To recover the rotation, use Dir2::X::rotation_to(direction) or in this case the helper Dir2::rotation_from_x(direction).

While these types aren't used widely within the engine yet, we are aware of your pain and are evaluating proposals for how we can make working with transforms in 2D more straightforward and pleasant.

Alignment API for Transforms #

- Authors: @mweatherley

- PR #12187

Bevy 0.14 adds a new Transform::align function, which is a more general form of Transform::look_to, which allows you to specify any local axis you want to use for the main and secondary axes.

This allows you to do things like point the front of a spaceship at a planet you're heading toward while keeping the right wing pointed in the direction of another ship. or point the top of a ship in the direction of the tractor beam pulling it in, while the front rotates to match the bigger ship's direction.

Lets consider a ship where we're going to use the front of the ship and the right wing as local axes:

// point the local negative-z axis in the global Y axis direction

// point the local x-axis in the global Z axis direction

transform.align(Vec3::NEG_Z, Vec3::Y, Vec3::X, Vec3::Z)

align will move it to match the desired positions as closely as possible:

Note that not all rotations can be constructed and the documentation explains what happens in such scenarios.

Random Sampling of Shapes and Directions #

- Authors: @13ros27, @mweatherley, @lynn-lumen

- PR #12484

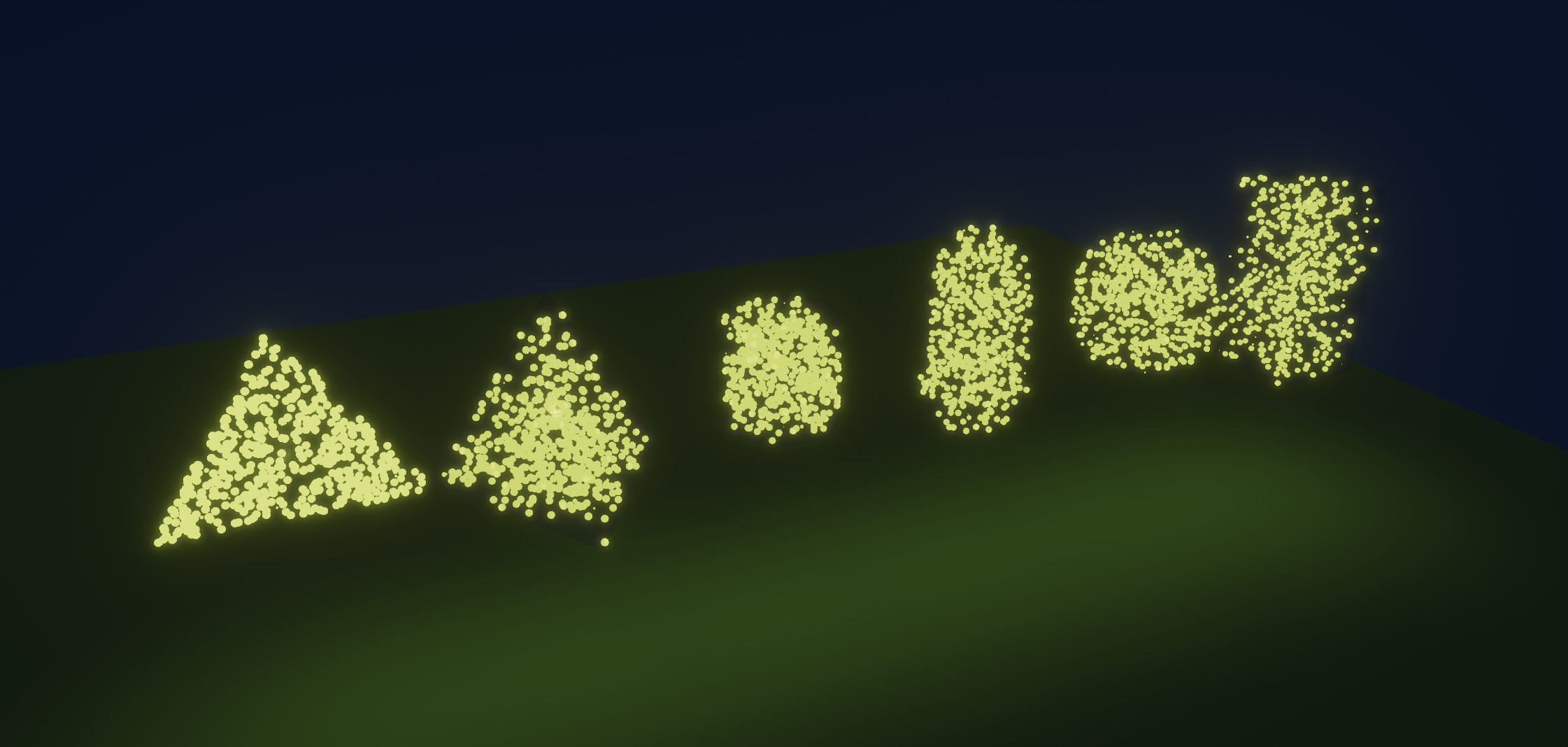

In the context of game development, it's often helpful to have access to random values, whether that's in the interest of driving behavior for NPCs, creating effects, or just trying to create variety. To help support this, a few random sampling features have been added to bevy_math, gated behind the rand feature. These are primarily geometric in nature, and they come in a couple of flavors.

First, one can sample random points from the boundaries and interiors of a variety of mathematical primitives:

In code, this can be performed in a couple different ways, using either the sample_interior/sample_boundary or interior_dist/boundary_dist APIs:

use bevy::math::prelude::*;

use rand::{Rng, SeedableRng};

use rand_chacha::ChaCha8Rng;

let sphere = Sphere::new(1.5);

// Instantiate an Rng:

let rng = &mut ChaCha8Rng::seed_from_u64(7355608);

// Using these, sample a random point from the interior of this sphere:

let interior_pt: Vec3 = sphere.sample_interior(rng);

// or from the boundary:

let boundary_pt: Vec3 = sphere.sample_boundary(rng);

// Or, if we want a lot of points, we can use a Distribution instead...

// to sample 100000 random points from the interior:

let interior_pts: Vec<Vec3> = sphere.interior_dist().sample_iter(rng).take(100000).collect();

// or 100000 random points from the boundary:

let boundary_pts: Vec<Vec3> = sphere.boundary_dist().sample_iter(rng).take(100000).collect();

Note that these methods explicitly require an Rng object, giving you control over the randomization strategy and seed.

The currently supported shapes are as follows:

2D: Circle, Rectangle, Triangle2d, Annulus, Capsule2d.

3D: Sphere, Cuboid, Triangle3d, Tetrahedron, Cylinder, Capsule3d, and extrusions of sampleable 2D shapes (Extrusion).

Similarly, the direction types (Dir2, Dir3, Dir3A) and quaternions (Quat) can now be constructed randomly using from_rng:

use bevy::math::prelude::*;

use rand::{random, Rng, SeedableRng};

use rand_chacha::ChaCha8Rng;

// Instantiate an Rng:

let rng = &mut ChaCha8Rng::seed_from_u64(7355608);

// Get a random direction:

let direction = Dir3::from_rng(rng);

// Similar, but requires left-hand type annotations or inference:

let another_direction: Dir3 = rng.gen();

// Using `random` to grab a value using implicit thread-local rng:

let yet_another_direction: Dir3 = random();

Tools for Profiling GPU Performance #

- Authors: @LeshaInc

- PR #9135

While Tracy already lets us measure CPU time per system, our GPU diagnostics are much weaker. In Bevy 0.14 we've added support for two classes of rendering-focused statistics via the RenderDiagnosticsPlugin:

- Timestamp queries: how long did specific bits of work take on the GPU?

- Pipeline statistics: information about the quantity of work sent to the GPU.

While it may sound like timestamp queries are the ultimate diagnostic tool, they come with several caveats. Firstly, they vary quite heavily from frame-to-frame as GPUs dynamically ramp up and down clock speed due to workload (idle gaps in GPU work, e.g., a bunch of consecutive barriers, or the tail end of a large dispatch) or the physical temperature of the GPU. To get an accurate measurement, you need to look at summary statistics: mean, median, 75th percentile and so on.

Secondly, while timestamp queries will tell you how long something takes, but it will not tell you why things are slow. For finding bottlenecks, you want to use a GPU profiler from your GPU vendor (Nvidia's NSight, AMD's RGP, Intel's GPA or Apple's XCode). These tools will give you much more detailed stats about cache hit rate, warp occupancy, and so on. On the other hand they lock your GPU's clock to base speeds for stable results, so they won't give you a good indicator of real world performance.

RenderDiagnosticsPlugin tracks the following pipeline statistics, recorded in Bevy's DiagnosticsStore: Elapsed CPU time, Elapsed GPU time, Vertex shader invocations, Fragment shader invocations, Compute shader invocations, Clipper invocations, and Clipper primitives.

You can also track individual render/compute passes, groups of passes (e.g. all shadow passes), and individual commands inside passes (like draw calls). To do so, instrument them using methods from the RecordDiagnostics trait.

New Geometric Primitives #

- Authors: @vitorfhc, @Chubercik, @andristarr, @spectria-limina, @salvadorcarvalhinho, @aristaeus, @mweatherley

- PR #12508

Geometric shapes find a variety of applications in game development, ranging from rendering simple items to the screen for display / debugging to use in colliders, physics, raycasting, and more.

For this, geometric shape primitives were introduced in Bevy 0.13, and work on this area has continued with Bevy 0.14, which brings the addition of Triangle3d and Tetrahedron 3D primitives, along with Rhombus, Annulus, Arc2d, CircularSegment, and CircularSector 2D primitives. As usual, these each have methods for querying geometric information like perimeter, area, and volume, and they all support meshing (where applicable) as well as gizmo display.

Improve Point and rename it to VectorSpace #

- Authors: @mweatherley, @bushrat011899, @JohnTheCoolingFan, @NthTensor, @IQuick143, @alice-i-cecile

- PR #12747

Linear algebra is used everywhere in games, and we want to make sure it's easy to get right. That's why we've added a new VectorSpace trait, as part of our work to make bevy_math more general, expressive, and mathematically sound. Anything that implements VectorSpace behaves like a vector. More formally, the trait requires that implementations satisfy the vector space axioms for vector addition and scalar multiplication. We've also added a NormedVectorSpace trait, which includes an api for distance and magnitude.

These traits underpin the new curve and shape sampling apis. VectorSpace is implemented for f32, the glam vector types, and several of the new color-space types. It completely replaces bevy_math::Point.

The splines module in Bevy has been lacking some features for a long time. Splines are extremely useful in game development, so improving them would improve everything that uses them.

The biggest addition is NURBS support! It is a variant of a B-Spline with much more parameters that can be tweaked to create specific curve shapes. We also added a LinearSpline, which can be used to put straight line segments in a curve. CubicCurve now acts as a sequence of curve segments to which you can add new pieces, so you can mix various spline types together to form a single path.

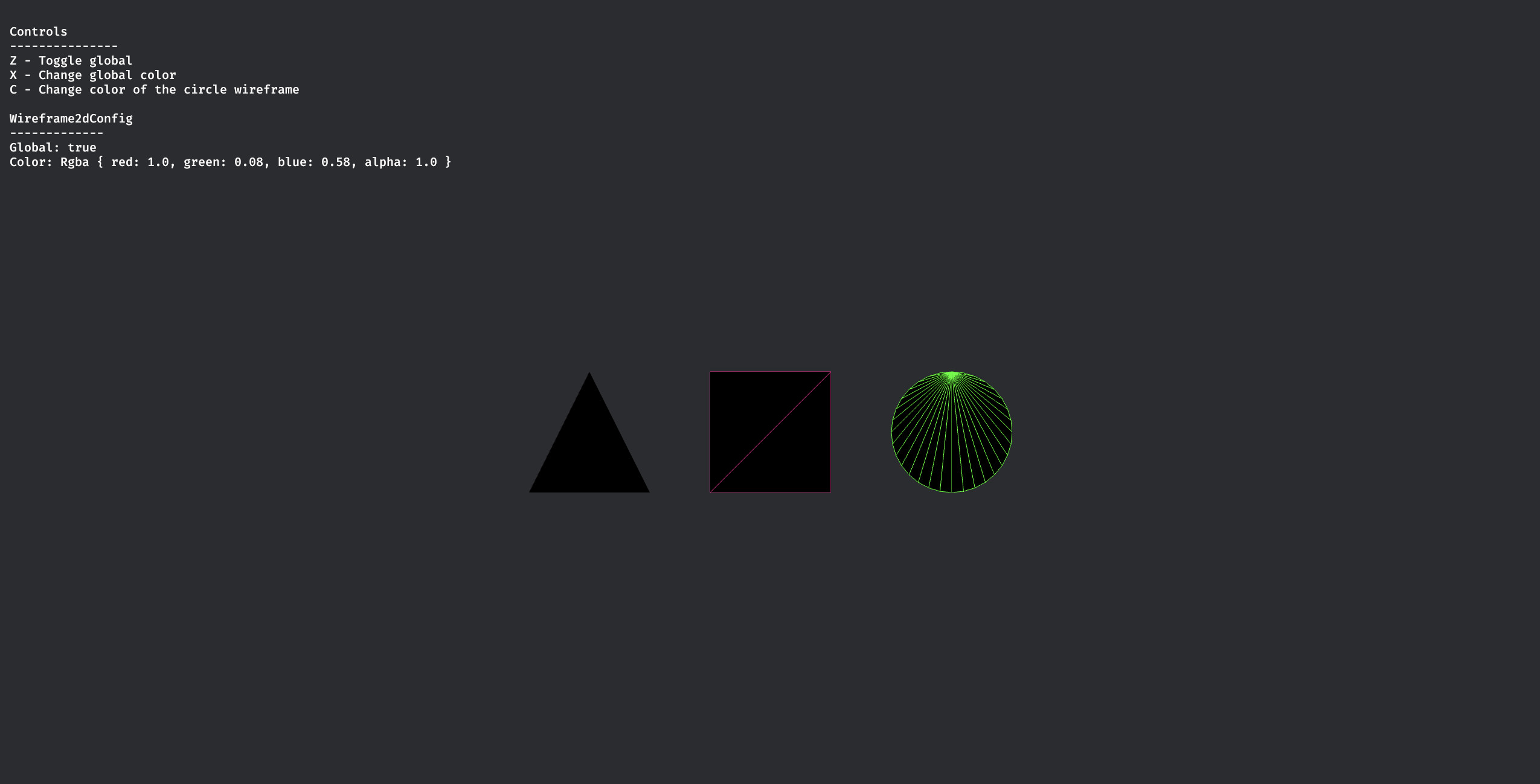

2D Mesh Wireframes #

- Authors: @msvbg, @IceSentry

- PR #12135

Wireframe materials are used to render the individual edges and faces of a mesh. They are often used as a debugging tool to visualize geometry, but can also be used for various stylized effects. Bevy supports displaying 3D meshes as wireframes, but lacked the ability to do this for 2D meshes until now.

To render your 2D mesh as a wireframe, add Wireframe2dPlugin to your app and a Wireframe2d component to your sprite. The color of the wireframe can be configured per-object by adding the Wireframe2dColor component, or globally by inserting a Wireframe2dConfig resource.

For an example of how to use the feature, have a look at the new wireframe_2d example:

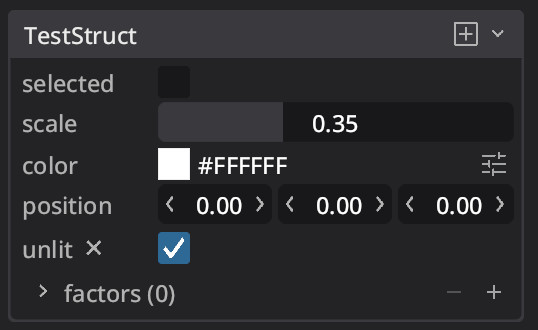

Custom Reflect Field Attributes #

- Authors: @MrGVSV

- PR #11659

One of the features of Bevy's reflection system is the ability to attach arbitrary "type data" to a type. This is most often used to allow trait methods to be called dynamically. However, some users saw it as an opportunity to do other awesome things.

The amazing bevy-inspector-egui used type data to great effect in order to allow users to configure their inspector UI per field:

use bevy_inspector_egui::prelude::*;

use bevy_reflect::Reflect;

#[derive(Reflect, Default, InspectorOptions)]

#[reflect(InspectorOptions)]

struct Slider {

#[inspector(min = 0.0, max = 1.0)]

value: f32,

}

Taking inspiration from this, Bevy 0.14 adds proper support for custom attributes when deriving Reflect, so users and third-party crates should no longer need to create custom type data specifically for this purpose. These attributes can be attached to structs, enums, fields, and variants using the #[reflect(@...)] syntax, where the ... can be any expression that resolves to a type implementing Reflect.

For example, we can use Rust's built-in RangeInclusive type to specify our own range for a field:

use std::ops::RangeInclusive;

use bevy_reflect::Reflect;

#[derive(Reflect, Default)]

struct Slider {

#[reflect(@RangeInclusive<f32>::new(0.0, 1.0))]

// Since this accepts any expression,

// we could have also used Rust's shorthand syntax:

// #[reflect(@0.0..=1.0_f32)]

value: f32,

}

Attributes can then be accessed dynamically using TypeInfo:

let TypeInfo::Struct(type_info) = Slider::type_info() else {

panic!("expected struct");

};

let field = type_info.field("value").unwrap();

let range = field.get_attribute::<RangeInclusive<f32>>().unwrap();

assert_eq!(*range, 0.0..=1.0);

This feature opens up a lot of possibilities for things built on top of Bevy's reflection system. And by making it agnostic to any particular usage, it allows for a wide range of use cases, including aiding editor work down the road.

In fact, this feature has already been put to use by bevy_reactor to power their custom inspector UI:

#[derive(Resource, Debug, Reflect, Clone, Default)]

pub struct TestStruct {

pub selected: bool,

#[reflect(@ValueRange::<f32>(0.0..1.0))]

pub scale: f32,

pub color: Srgba,

pub position: Vec3,

pub unlit: Option<bool>,

#[reflect(@ValueRange::<f32>(0.0..10.0))]

pub roughness: Option<f32>,

#[reflect(@Precision(2))]

pub metalness: Option<f32>,

#[reflect(@ValueRange::<f32>(0.0..1000.0))]

pub factors: Vec<f32>,

}

Query Iteration Sorting #

- Authors: @Victoronz

- PR #13417

Bevy does not make any guarantees about the order of items. So if we wish to work with our query items in a certain order, we need to sort them! We might want to display the scores of the players in order, or ensure a consistent iteration order for the sake of networking stability. In 0.13 a sort could look like this:

#[derive(Component, Copy, Clone, Deref)]

pub struct Attack(pub usize)

fn handle_enemies(enemies: Query<(&Health, &Attack, &Defense)>) {

// An allocation!

let mut enemies: Vec<_> = enemies.iter().collect();

enemies.sort_by_key(|(_, atk, ..)| *atk)

for enemy in enemies {

work_with(enemy)

}

}

This can get especially unwieldy and repetitive when sorting within multiple systems. Even if we always want the same sort, different Query types make it unreasonably difficult to abstract away as a user! To solve this, we implemented new sort methods on the QueryIter type, turning the example into:

// To be used as a sort key, `Attack` now implements Ord.

#[derive(Component, Copy, Clone, Deref, PartialEq, Eq, PartialOrd, Ord)]

pub struct Attack(pub usize)

fn handle_enemies(enemies: Query<(&Health, &Attack, &Defense)>) {

// Still an allocation, but undercover.

for enemy in enemies.iter().sort::<&Attack>() {

work_with(enemy)

}

}

To sort our query with the Attack component, we specify it as the generic parameter to sort. To sort by more than one Component, we can do so, independent of Component order in the original Query type: enemies.iter().sort::<(&Defense, &Attack)>()

The generic parameter can be thought of as being a lens or "subset" of the original query, on which the underlying sort is actually performed. The result is then internally used to return a new sorted query iterator over the original query items. With the default sort, the lens has to be fully Ord, like with slice::sort. If this is not enough, we also have the counterparts to the remaining 6 sort methods from slice!

The generic lens argument works the same way as with Query::transmute_lens. We do not use filters, they are inherited from the original query. The transmute_lens infrastructure has some nice additional features, which allows for this:

fn handle_enemies(enemies: Query<(&Health, &Attack, &Defense, &Rarity)>) {

for enemy in enemies.iter().sort_unstable::<Entity>() {

work_with(enemy)

}

}

Because we can add Entity to any lens, we can sort by it without including it in the original query!

These sort methods work with both Query::iter and Query::iter_mut! The rest of the of the iterator methods on Query do not currently support sorting. The sorts return QuerySortedIter, itself an iterator, enabling the use of further iterator adapters on it.

Keep in mind that the lensing does add some overhead, so these query iterator sorts do not perform the same as a manual sort on average. However, this strongly depends on workload, so best test it yourself if relevant!

SystemBuilder #

- Authors: @james-j-obrien

- PR #13123

Bevy users love systems, so we made a builder for their systems so they can build systems from within systems. At runtime, using dynamically-defined component and resource types!

While you can use SystemBuilder as an ergonomic alternative to the SystemState API for splitting the World into disjoint borrows, its true values lies in its dynamic usage.

You can choose to create a different system based on runtime branches or, more intriguingly, the queries and so on can use runtime-defined component IDs. This is another vital step towards creating an ergonomic and safe API to work with dynamic queries, laying the groundwork for the devs who want to integrate scripting languages or bake in sophisticated modding support for their game.

// Start by creating builder from the world

let system = SystemBuilder::<()>::new(&mut world)

// Various helper methods exist to add `SystemParam`.

.resource::<R>()

.query::<&A>()

// Alternatively use `.param::<T>()` for any other `SystemParam` types.

.param::<MyParam>()

// Finish it all up with a call `.build`

.build(my_system);

// The parameters the builder is initialized with will appear first in the arguments.

let system = SystemBuilder::<(Res<R>, Query<&A>)>::new(&mut world)

.param::<MyParam>()